Description

I recently tried tensorRT acceleration on the Yolov5s model.

I successfully converted Pytorch’s Yolov5s model into onNX model.

But there were problems converting the ONNx model to engine and accelerating it.

The specific questions are as follows:

&&&& RUNNING TensorRT.trtexec # trtexec --onnx=yolov5_1_3_640_640_static.onnx --explicitBatch --saveEngine=yolov5_1_3_640_640_static.engine --workspace=2048 --fp16

[08/19/2020-12:20:09] [I] === Model Options ===

[08/19/2020-12:20:09] [I] Format: ONNX

[08/19/2020-12:20:09] [I] Model: yolov5_1_3_640_640_static.onnx

[08/19/2020-12:20:09] [I] Output:

[08/19/2020-12:20:09] [I] === Build Options ===

[08/19/2020-12:20:09] [I] Max batch: explicit

[08/19/2020-12:20:09] [I] Workspace: 2048 MB

[08/19/2020-12:20:09] [I] minTiming: 1

[08/19/2020-12:20:09] [I] avgTiming: 8

[08/19/2020-12:20:09] [I] Precision: FP16

[08/19/2020-12:20:09] [I] Calibration:

[08/19/2020-12:20:09] [I] Safe mode: Disabled

[08/19/2020-12:20:09] [I] Save engine: yolov5_1_3_640_640_static.engine

[08/19/2020-12:20:09] [I] Load engine:

[08/19/2020-12:20:09] [I] Inputs format: fp32:CHW

[08/19/2020-12:20:09] [I] Outputs format: fp32:CHW

[08/19/2020-12:20:09] [I] Input build shapes: model

[08/19/2020-12:20:09] [I] === System Options ===

[08/19/2020-12:20:09] [I] Device: 0

[08/19/2020-12:20:09] [I] DLACore:

[08/19/2020-12:20:09] [I] Plugins:

[08/19/2020-12:20:09] [I] === Inference Options ===

[08/19/2020-12:20:09] [I] Batch: Explicit

[08/19/2020-12:20:09] [I] Iterations: 10

[08/19/2020-12:20:09] [I] Duration: 3s (+ 200ms warm up)

[08/19/2020-12:20:09] [I] Sleep time: 0ms

[08/19/2020-12:20:09] [I] Streams: 1

[08/19/2020-12:20:09] [I] ExposeDMA: Disabled

[08/19/2020-12:20:09] [I] Spin-wait: Disabled

[08/19/2020-12:20:09] [I] Multithreading: Disabled

[08/19/2020-12:20:09] [I] CUDA Graph: Disabled

[08/19/2020-12:20:09] [I] Skip inference: Disabled

[08/19/2020-12:20:09] [I] Inputs:

[08/19/2020-12:20:09] [I] === Reporting Options ===

[08/19/2020-12:20:09] [I] Verbose: Disabled

[08/19/2020-12:20:09] [I] Averages: 10 inferences

[08/19/2020-12:20:09] [I] Percentile: 99

[08/19/2020-12:20:09] [I] Dump output: Disabled

[08/19/2020-12:20:09] [I] Profile: Disabled

[08/19/2020-12:20:09] [I] Export timing to JSON file:

[08/19/2020-12:20:09] [I] Export output to JSON file:

[08/19/2020-12:20:09] [I] Export profile to JSON file:

[08/19/2020-12:20:09] [I]

Input filename: yolov5_1_3_640_640_static.onnx

ONNX IR version: 0.0.6

Opset version: 11

Producer name: pytorch

Producer version: 1.6

Domain:

Model version: 0

Doc string:

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:222: One or more weights outside the range of INT32 was clamped

[08/19/2020-12:20:10] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

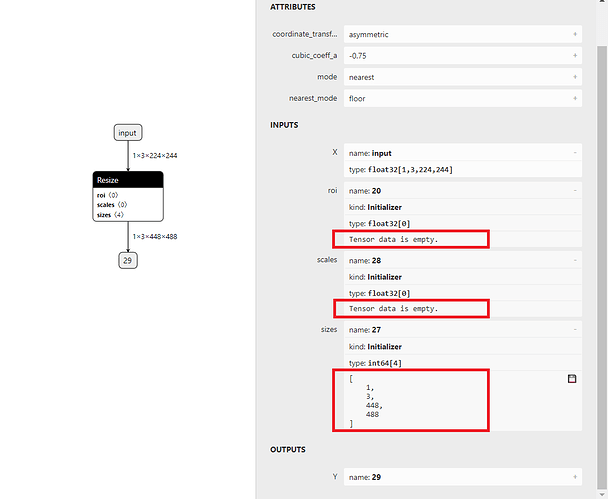

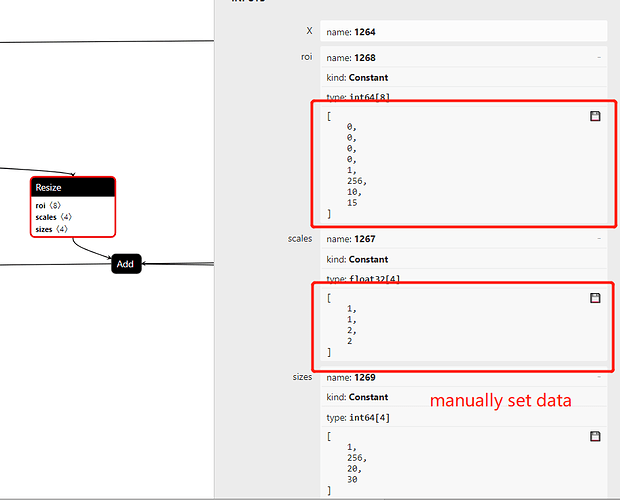

While parsing node number 169 [Resize]:

ERROR: ModelImporter.cpp:124 In function parseGraph:

[5] Assertion failed: ctx->tensors().count(inputName)

[08/19/2020-12:20:10] [E] Failed to parse onnx file

[08/19/2020-12:20:10] [E] Parsing model failed

[08/19/2020-12:20:10] [E] Engine creation failed

[08/19/2020-12:20:10] [E] Engine set up failed

&&&& FAILED TensorRT.trtexec # trtexec --onnx=yolov5_1_3_640_640_static.onnx --explicitBatch --saveEngine=yolov5_1_3_640_640_static.engine --workspace=2048 --fp16

Environment

TensorRT Version: 7.0.0.11

GPU Type: Tesla V100-SXM2-32GB

Nvidia Driver Version: 418.67

CUDA Version: 10.1

CUDNN Version: 7.6.5

Operating System + Version: Ubuntu18.04

Python Version (if applicable): 3.6

TensorFlow Version (if applicable):

PyTorch Version (if applicable): 1.6

Baremetal or Container (if container which image + tag):

Relevant Files

Please attach or include links to any models, data, files, or scripts necessary to reproduce your issue. (Github repo, Google Drive, Dropbox, etc.)

Steps To Reproduce

Please include:

- Exact steps/commands to build your repro

- Exact steps/commands to run your repro

- Full traceback of errors encountered