I have been testing the nvdsanalytics element to determine the presence of persons in a region, however I am having inconsistent results. It was my understanding that the analytics will determine that an object is in the ROI if the bottom center coordinate is inside the defined region, however I get object bounding boxes classified in the ROI when the bottom line is outside the region and bounding boxes not detected in the ROI when the bottom line is inside the region.

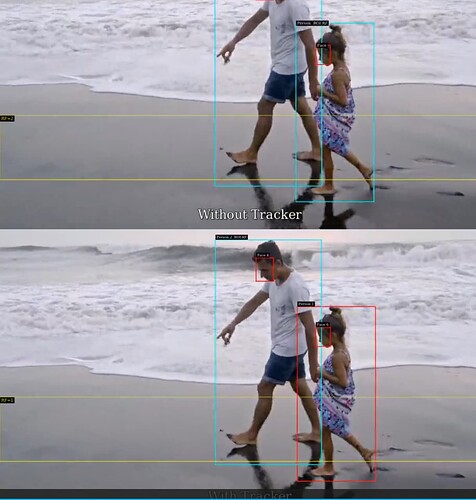

Following example shows how in some occasions the man is detected inside the RT ROI when the bottom bounding box line is outside the region:

On the other hand next video shows the man is not detected in ROI even when the bottom line of the bounding box is inside the ROI-RF:

Also I am getting different results depending on the detector that I use, I tested with peoplenet and rtmdet, with the same video and pipeline, even tough I get similar bounding boxes, the analytics ROI detections are different, see below:

-

rtmdet

-

peoplenet

I tested with the following gstreamer pipeline:

gst-launch-1.0 filesrc location= test-walking.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! .sink_0 nvstreammux name=m width=1920 height=1080 batch-size=1 live-source=1 ! nvinfer config-file-path= peoplenet/config_infer_primary_peoplenet.txt ! nvdsanalytics config-file=config.txt ! nvvideoconvert ! nvdsosd ! nvvideoconvert ! nvv4l2h264enc ! h264parse ! avimux ! filesink location=/tmp/test.avi

Using the following analytics configuration file:

[property]

enable=1

config-width=1920

config-height=1080

osd-mode=2

display-font-size=12

[roi-filtering-stream-0]

enable=1

roi-RF=0;643;1920;643;1920;890;0;890

inverse-roi=0

class-id=-1

Is my understanding of how the analytics element should work wrong? Do I have a configuration error?

Please provide complete information as applicable to your setup.

• Hardware Platform (Jetson / GPU) Jetson AGX Orin

• DeepStream Version 7.0

• JetPack Version (valid for Jetson only) 6.0

• TensorRT Version

• NVIDIA GPU Driver Version (valid for GPU only)

• Issue Type( questions, new requirements, bugs)

• How to reproduce the issue ? (This is for bugs. Including which sample app is using, the configuration files content, the command line used and other details for reproducing)

• Requirement details( This is for new requirement. Including the module name-for which plugin or for which sample application, the function description)

Melissa Montero

www.ridgerun.ai