Hello,

I am using a Jetson Xavier NX for real-time Object detection and tracking usually with Yolov8. My current setup includes:

YOLOv8n model converted to TensorRT

Torch, TorchVision, and ONNX installed with CUDA support

OpenCV compiled with CUDA support

Despite this, I am only achieving 12–15 FPS.

I would like to know:

How to properly increase FPS on the Jetson Xavier NX for real-time AI inference and video streaming.

Which settings, optimizations, or modes can maximize performance for TensorRT models on this platform.

Any best practices, recommended configurations, or system-level tweaks specific to Jetson Xavier NX.

waiting for your help.

Y-T-G

October 6, 2025, 5:54am

2

Did you convert the model to FP16?

Hi,

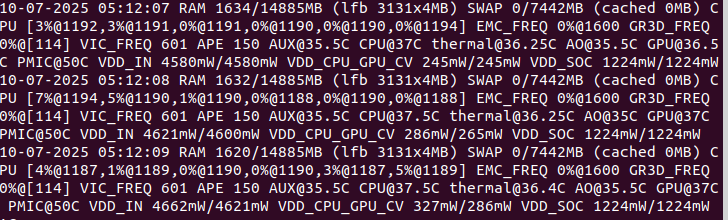

Could you check GPU utilization with tegrastats?

$ sudo tegrastats

It’s common that the pre-processing or post-processing, which run on CPU, takes long time to finish.

NVIDIA DeepStream SDK 8.0 / 7.1 / 7.0 / 6.4 / 6.3 / 6.2 / 6.1.1 / 6.1 / 6.0.1 / 6.0 / 5.1 implementation for YOLO models

Thanks.

Thank you.

Thank you!

Okay, I’ll use DeepStream.

I used this command:

$ sudo tegrastats

Are there other ways to increase Jetson’s speed or FPS?