I want to stream webcam video over RTSP URL on jetson nano using python.

Moving this topic to the proper category.

I cannot give a working solution without knowing more about your webcam.

For RTSP server, trying with a simulated video source:

import gi

gi.require_version('Gst','1.0')

gi.require_version('GstVideo','1.0')

gi.require_version('GstRtspServer','1.0')

from gi.repository import GObject, Gst, GstVideo, GstRtspServer

Gst.init(None)

mainloop = GObject.MainLoop()

server = GstRtspServer.RTSPServer()

mounts = server.get_mount_points()

factory = GstRtspServer.RTSPMediaFactory()

factory.set_launch('( videotestsrc is-live=1 ! video/x-raw,width=320,height=240,framerate=30/1 ! nvvidconv ! nvv4l2h264enc insert-sps-pps=1 idrinterval=30 insert-vui=1 ! rtph264pay name=pay0 pt=96 )')

mounts.add_factory("/test", factory)

server.attach(None)

print "stream ready at rtsp://127.0.0.1:8554/test"

mainloop.run()

Now adding your own camera. I assume your webcam is a USB camera with UVC driver providing video node 0 (/dev/video0). You would get the available modes with:

v4l2-ctl -d0 --list-formats-ext

Choos one of these modes (better start with low resolution and framerate). For example, if seeing:

ioctl: VIDIOC_ENUM_FMT

Index : 0

Type : Video Capture

Pixel Format: 'YUYV'

Name : YUYV 4:2:2

Size: Discrete 1280x720

Interval: Discrete 0.033s (30.000 fps)

Then in the gstreamer pipeline you would replace videotestsrc with :

v4l2src device=/dev/video0 ! video/x-raw,format=YUY2,width=1280,height=720,framerate=30/1 ! nvvidconv ! ...

If your webcam sends encoded frames such mpjg or h264, it would be a bit different.

HI Honey_Patouceul!,

Thanks for you’re time, I ran this code and tried to open the RTSP URL in vlc player the console displays “opening in BLOCKING MODE” . i am using Logitech 270 webcamera.

For sake of understanding, please first tell if running with videotestsrc was ok.

If ok, please post the code (or pipeline string if this was the only modification.

Also post messages from RTSP server and VLC receiver.

I have no Logitech C270, but you may post the output of v4l2-ctl -d0 --list-formats-ext as suggested above for better help.

i can preview camera using this command - gst-launch-1.0 -v v4l2src device=/dev/video0 ! videoconvert ! xvimagesink

import gi

gi.require_version(‘Gst’,‘1.0’)

gi.require_version(‘GstVideo’,‘1.0’)

gi.require_version(‘GstRtspServer’,‘1.0’)

from gi.repository import GObject, Gst, GstVideo, GstRtspServer

Gst.init(None)

mainloop = GObject.MainLoop()

server = GstRtspServer.RTSPServer()

mounts = server.get_mount_points()

factory = GstRtspServer.RTSPMediaFactory()

factory.set_launch(‘( v4l2src device=/dev/video0 ! video/x-raw,format=YUY2,width=1280,height=720,framerate=30/1 ! nvvidconv ! nvv4l2h264enc insert-sps-pps=1 idrinterval=1 insert-vui=1 ! rtph264pay name=pay0 pt=96 )’)

factory.set_launch(‘v4l2src device=/dev/video0 io-mode=2 ! image/jpeg,width=1280,height=720,framerate=30/1 ! nvjpegdec ! video/x-raw ! nvvidconv ! nvv4l2h264enc ! rtph264ay name=pay0 pt=96)’)

mounts.add_factory(“/test”, factory)

server.attach(None)

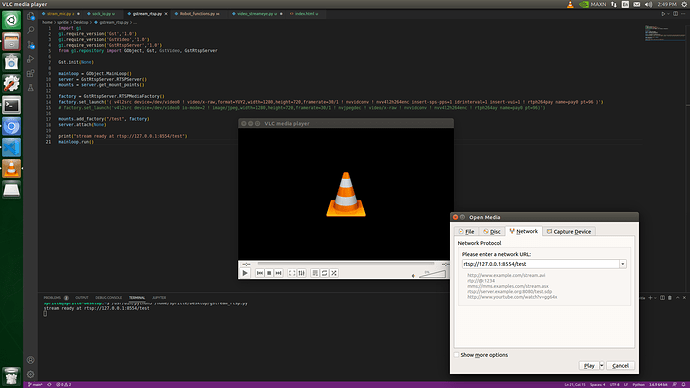

print(“stream ready at rtsp://127.0.0.1:8554/test”)

mainloop.run()

console.txt (9.3 KB)

Uploading: Screenshot from 2022-06-29 14-50-11.png…

You didn’t answered my first question: Does RTSP streaming and receiving work when using videotestsrc as in my original post example ?

Some notes:

-

There was an error in my original post (edited later). idrinterval property of nvv4l2h264enc should be 30 (each second @30 fps, or 25 in your case) instead of 1.

-

If using MJPG format, for decoding:

- you may add format=MJPG into caps after v4l2src.

- with nvjpegdec you would output in I420 format into NVMM memory.

- you can also use

nvv4l2decoder mjpeg=1. This would output NV12 format into NVMM memory. - if you don’t need to transcode into H264, you may also just use rtpjpegpay.

You didn’t answered my first question: Does RTSP streaming and receiving work when using videotestsrc as in my original post example?

Ans: when run your code then try to open the URL on the vlc it automatically closed,so i am used OpenCV to capture the frame that the image coming corrupted.

can you please make me the pipeline,i tried to make pipeline but not working

Note that with Jetson, you would have to disable a VLC plugin, OMXIL.

Also note about corrupted frames that first 10 seconds may be trying to adjust.

You may also check with gstreamer:

gst-play-1.0 rtsp://127.0.0.1:8554/test

If this doesn’t correctly display the stream after 10 or 15s with videotestsrc, there is a problem with your system or software.

Now let’s try using your camera just reading and displaying with gstreamer:

# 640x480@30fps Raw video YUYV 4:2:2, aka YUY2 in gstreamer

gst-launch-1.0 -v v4l2src device=/dev/video0 ! video/x-raw,format=YUY2,width=640,height=480,framerate=30/1 ! xvimagesink

# 640x480@30fps MJPG decoded by nvjpegdec, nvvidconv will convert and copy to system memory

gst-launch-1.0 -v v4l2src device=/dev/video0 ! image/jpeg,format=MJPG,width=640,height=480,framerate=30/1 ! nvjpegdec ! 'video/x-raw(memory:NVMM),format=I420' ! nvvidconv ! xvimagesink

# 640x480@30fps MJPG decoded by nvv4l2decoder, nvvidconv will convert and copy to system memory

gst-launch-1.0 -v v4l2src device=/dev/video0 ! image/jpeg,format=MJPG,width=640,height=480,framerate=30/1 ! nvv4l2decoder mjpeg=1 ! 'video/x-raw(memory:NVMM),format=NV12' ! nvvidconv ! xvimagesink

If some of these work, you would try the respective pipelines for rtsp server:

# 640x480@30fps Raw video YUYV 4:2:2, aka YUY2 in gstreamer, encoded into H264 and sent to RTPH264

factory.set_launch('( v4l2src device=/dev/video0 ! video/x-raw,format=YUY2,width=640,height=480,framerate=30/1 ! nvvidconv ! nvv4l2h264enc insert-sps-pps=1 idrinterval=30 insert-vui=1 ! rtph264pay name=pay0 )')

# 640x480@30fps MJPG decoded by nvjpegdec, then encoded into H264 and sent to RTPH264

factory.set_launch('( v4l2src device=/dev/video0 ! image/jpeg,format=MJPG,width=640,height=480,framerate=30/1 ! nvjpegdec ! video/x-raw(memory:NVMM),format=I420 ! nvvidconv ! nvv4l2h264enc insert-sps-pps=1 idrinterval=30 insert-vui=1 ! rtph264pay name=pay0 )')

# 640x480@30fps MJPG decoded by nvv4l2decoder, nvvidconv will convert and copy to system memory

factory.set_launch('( v4l2src device=/dev/video0 ! image/jpeg,format=MJPG,width=640,height=480,framerate=30/1 ! nvv4l2decoder mjpeg=1 ! video/x-raw(memory:NVMM),format=NV12 ! nvv4l2h264enc insert-sps-pps=1 idrinterval=30 insert-vui=1 ! rtph264pay name=pay0 )')

If reading from camera in MJPG works, the simplest would just be to stream in MPJG (no H264 transcoding):

factory.set_launch('( v4l2src device=/dev/video0 ! image/jpeg,format=MJPG,width=640,height=480,framerate=30/1 ! rtpjpegpay name=pay0 )')

Hello Honey_Patouceul,

Thank you so much for helping !!!

This pipeline works after the vlc plugin is fixed, I am able to stream video over the local network.

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.