Hi All,

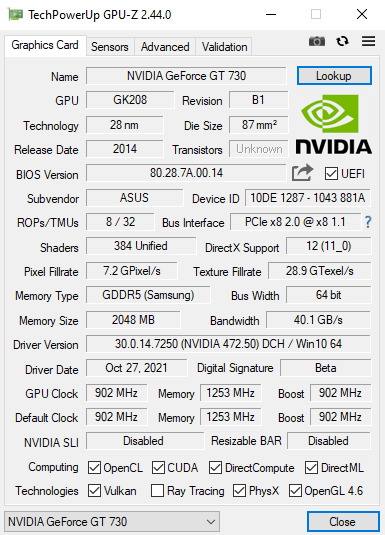

Can somebody suggest me the correct driver, CUDA Toolkit and CUDNN version for NVIDIA GeForce GT 730 GK208 B1 installed on Windows 10 64.

I installed NVidia driver 472.50, then I installed CUDA Toolkit 11.4.3_472.50_win10 and CUDNN 11.4-windows-x64-v8.2.2.26.

Then I compiled dlib version 19.23.99 successfully on Python 3.7. But some dlib/face_recognition function complaints about incompatible CUDA version.

Thanks and regards.

Sudheer

rs277

February 24, 2022, 6:19pm

2

Your card has a Kepler Compute Capability 3.5 GPU and should be OK with both the Cuda and driver versions you’re using.

I know nothing about dlib, but this may be worth reading if you haven’t already:

opened 01:42PM - 10 Jan 20 UTC

closed 09:00AM - 25 Feb 20 UTC

inactive

## Expected Behavior

I have compiled dlib test pack using CMake, noticed that … CUDA won't be used due to the lack of cuDNN, then I run "dtest --runall" without a single error (using CPU only, no CUDA support).

I have then added cuDNN (7.1.4, last one for CUDA 8.0) an re-compiled test pack, made sure that CUDA will be used:

-- Found CUDA: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v8.0 (found suitable version "8.0", minimum required is "7.5")

-- Looking for cuDNN install...

-- Found cuDNN: C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v8.0/lib/x64/cudnn.lib

-- Building a CUDA test project to see if your compiler is compatible with CUDA...

-- Checking if you have the right version of cuDNN installed.

-- Enabling CUDA support for dlib. DLIB WILL USE CUDA

Expected to have "dtest --runall" without a single error as well (with CUDA support).

## Current Behavior

Three tests (test_cublas, test_dnn and test_ranking) fail with similar error:

Failure message from test: Error while calling cudaOccupancyMaxPotentialBlockSize(&num_blocks,&num_threads,K) in file d:\...\dlib\dlib\cuda\cuda_utils.h:186. code: 8, reason: invalid device function

I believe the kernel function passed to cudaOccupancyMaxPotentialBlockSize is considered as invalid (via dlib::cuda::launch_kernel).

Any ideas how to fix that or what might be the cause? The station I'm working on has one GTX 580 and one Tesla M2090, other CUDA-using software run on those devices properly. I'm stuck with Fermi for a while (which means I'm stuck with CUDA 8.0 and MSVC2015), however, present versions do not seem to vialoate dlib requirements.

## Steps to Reproduce

I believe everything is clear from the Expected Behaviour section

* **Version**: 19.19

* **Where did you get dlib**: dlib.net

* **Platform**: Win10 x64 10.0.17763

* **Compiler**: MSVC 19.0.24215.1 (VS2015 Update 3), CUDA 8.0, cuDNN 7.1.4.