• Hardware Platform (Jetson / GPU): GPU

• DeepStream Version: 6.3

• TensorRT Version: 8.5.3-1+cuda11.8

• NVIDIA GPU Driver Version (valid for GPU only): 525.89.02

• Issue Type( questions, new requirements, bugs): questions

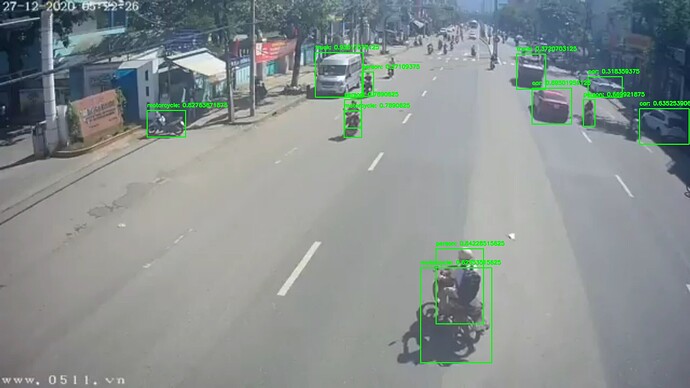

When using tracking NvDCF, the results is better than Deepsort tracking, frame miss object when using pgie:

When using NvDCF:

BaseConfig:

minDetectorConfidence: 0 # If the confidence of a detector bbox is lower than this, then it won't be considered for tracking

TargetManagement:

enableBboxUnClipping: 1 # In case the bbox is likely to be clipped by image border, unclip bbox

maxTargetsPerStream: 150 # Max number of targets to track per stream. Recommended to set >10. Note: this value should account for the targets being tracked in shadow mode as well. Max value depends on the GPU memory capacity

# [Creation & Termination Policy]

minIouDiff4NewTarget: 0.5 # If the IOU between the newly detected object and any of the existing targets is higher than this threshold, this newly detected object will be discarded.

minTrackerConfidence: 0.2 # If the confidence of an object tracker is lower than this on the fly, then it will be tracked in shadow mode. Valid Range: [0.0, 1.0]

probationAge: 3 # If the target's age exceeds this, the target will be considered to be valid.

maxShadowTrackingAge: 30 # Max length of shadow tracking. If the shadowTrackingAge exceeds this limit, the tracker will be terminated.

earlyTerminationAge: 1 # If the shadowTrackingAge reaches this threshold while in TENTATIVE period, the target will be terminated prematurely.

TrajectoryManagement:

useUniqueID: 0 # Use 64-bit long Unique ID when assignining tracker ID. Default is [true]

DataAssociator:

dataAssociatorType: 0 # the type of data associator among { DEFAULT= 0 }

associationMatcherType: 0 # the type of matching algorithm among { GREEDY=0, GLOBAL=1 }

checkClassMatch: 1 # If checked, only the same-class objects are associated with each other. Default: true

# [Association Metric: Thresholds for valid candidates]

minMatchingScore4Overall: 0.0 # Min total score

minMatchingScore4SizeSimilarity: 0.6 # Min bbox size similarity score

minMatchingScore4Iou: 0.0 # Min IOU score

minMatchingScore4VisualSimilarity: 0.7 # Min visual similarity score

# [Association Metric: Weights]

matchingScoreWeight4VisualSimilarity: 0.6 # Weight for the visual similarity (in terms of correlation response ratio)

matchingScoreWeight4SizeSimilarity: 0.0 # Weight for the Size-similarity score

matchingScoreWeight4Iou: 0.4 # Weight for the IOU score

StateEstimator:

stateEstimatorType: 1 # the type of state estimator among { DUMMY=0, SIMPLE=1, REGULAR=2 }

# [Dynamics Modeling]

processNoiseVar4Loc: 2.0 # Process noise variance for bbox center

processNoiseVar4Size: 1.0 # Process noise variance for bbox size

processNoiseVar4Vel: 0.1 # Process noise variance for velocity

measurementNoiseVar4Detector: 4.0 # Measurement noise variance for detector's detection

measurementNoiseVar4Tracker: 16.0 # Measurement noise variance for tracker's localization

VisualTracker:

visualTrackerType: 1 # the type of visual tracker among { DUMMY=0, NvDCF=1 }

# [NvDCF: Feature Extraction]

useColorNames: 1 # Use ColorNames feature

useHog: 0 # Use Histogram-of-Oriented-Gradient (HOG) feature

featureImgSizeLevel: 2 # Size of a feature image. Valid range: {1, 2, 3, 4, 5}, from the smallest to the largest

featureFocusOffsetFactor_y: -0.2 # The offset for the center of hanning window relative to the feature height. The center of hanning window would move by (featureFocusOffsetFactor_y*featureMatSize.height) in vertical direction

# [NvDCF: Correlation Filter]

filterLr: 0.075 # learning rate for DCF filter in exponential moving average. Valid Range: [0.0, 1.0]

filterChannelWeightsLr: 0.1 # learning rate for the channel weights among feature channels. Valid Range: [0.0, 1.0]

gaussianSigma: 0.75 # Standard deviation for Gaussian for desired response when creating DCF filter [pixels]

Using Deepsort with your weight in Gst-nvtracker — DeepStream documentation

BaseConfig:

minDetectorConfidence: 0.0762 # If the confidence of a detector bbox is lower than this, then it won't be considered for tracking

TargetManagement:

preserveStreamUpdateOrder: 0 # When assigning new target ids, preserve input streams' order to keep target ids in a deterministic order over multuple runs

maxTargetsPerStream: 150 # Max number of targets to track per stream. Recommended to set >10. Note: this value should account for the targets being tracked in shadow mode as well. Max value depends on the GPU memory capacity

# [Creation & Termination Policy]

minIouDiff4NewTarget: 0.9847 # If the IOU between the newly detected object and any of the existing targets is higher than this threshold, this newly detected object will be discarded.

minTrackerConfidence: 0.4314 # If the confidence of an object tracker is lower than this on the fly, then it will be tracked in shadow mode. Valid Range: [0.0, 1.0]

probationAge: 2 # If the target's age exceeds this, the target will be considered to be valid.

maxShadowTrackingAge: 68 # Max length of shadow tracking. If the shadowTrackingAge exceeds this limit, the tracker will be terminated.

earlyTerminationAge: 1 # If the shadowTrackingAge reaches this threshold while in TENTATIVE period, the the target will be terminated prematurely.

TrajectoryManagement:

useUniqueID: 0 # Use 64-bit long Unique ID when assignining tracker ID.

DataAssociator:

dataAssociatorType: 0 # the type of data associator among { DEFAULT= 0 }

associationMatcherType: 1 # the type of matching algorithm among { GREEDY=0, CASCADED=1 }

checkClassMatch: 1 # If checked, only the same-class objects are associated with each other. Default: true

# [Association Metric: Mahalanobis distance threshold (refer to DeepSORT paper) ]

thresholdMahalanobis: 12.1875 # Threshold of Mahalanobis distance. A detection and a target are not matched if their distance is larger than the threshold.

# [Association Metric: Thresholds for valid candidates]

minMatchingScore4Overall: 0.1794 # Min total score

minMatchingScore4SizeSimilarity: 0.3291 # Min bbox size similarity score

minMatchingScore4Iou: 0.2364 # Min IOU score

minMatchingScore4ReidSimilarity: 0.7505 # Min reid similarity score

# [Association Metric: Weights for valid candidates]

matchingScoreWeight4SizeSimilarity: 0.7178 # Weight for the Size-similarity score

matchingScoreWeight4Iou: 0.4551 # Weight for the IOU score

matchingScoreWeight4ReidSimilarity: 0.3197 # Weight for the reid similarity

# [Association Metric: Tentative detections] only uses iou similarity for tentative detections

tentativeDetectorConfidence: 0.2479 # If a detection's confidence is lower than this but higher than minDetectorConfidence, then it's considered as a tentative detection

minMatchingScore4TentativeIou: 0.2376 # Min iou threshold to match targets and tentative detection

StateEstimator:

stateEstimatorType: 2 # the type of state estimator among { DUMMY=0, SIMPLE=1, REGULAR=2 }

# [Dynamics Modeling]

noiseWeightVar4Loc: 0.0503 # weight of process and measurement noise for bbox center; if set, location noise will be proportional to box height

noiseWeightVar4Vel: 0.0037 # weight of process and measurement noise for velocity; if set, velocity noise will be proportional to box height

useAspectRatio: 1 # use aspect ratio in Kalman filter's observation

ReID:

reidType: 1 # The type of reid among { DUMMY=0, DEEP=1 }

# [Reid Network Info]

batchSize: 100 # Batch size of reid network

workspaceSize: 1000 # Workspace size to be used by reid engine, in MB

reidFeatureSize: 256 # Size of reid feature

reidHistorySize: 100 # Max number of reid features kept for one object

inferDims: [3, 256, 128] # Reid network input dimension CHW or HWC based on inputOrder

networkMode: 1 # Reid network inference precision mode among {fp32=0, fp16=1, int8=2 }

# [Input Preprocessing]

inputOrder: 0 # Reid network input order among { NCHW=0, NHWC=1 }. Batch will be converted to the specified order before reid input.

colorFormat: 0 # Reid network input color format among {RGB=0, BGR=1 }. Batch will be converted to the specified color before reid input.

offsets: [123.6750, 116.2800, 103.5300] # Array of values to be subtracted from each input channel, with length equal to number of channels

netScaleFactor: 0.01735207 # Scaling factor for reid network input after substracting offsets

keepAspc: 1 # Whether to keep aspc ratio when resizing input objects for reid

# [Output Postprocessing]

addFeatureNormalization: 1 # If reid feature is not normalized in network, adding normalization on output so each reid feature has l2 norm equal to 1

# [Paths and Names]

tltEncodedModel: "/opt/nvidia/deepstream/deepstream/samples/models/Tracker/resnet50_market1501.etlt" # NVIDIA TAO model path

tltModelKey: "nvidia_tao" # NVIDIA TAO model key

modelEngineFile: "/opt/nvidia/deepstream/deepstream/samples/models/Tracker/resnet50_market1501.etlt_b100_gpu0_fp16.engine" # Engine file path

**How to change the parameter for not miss object detection

**