I ran the exact same codes as the Nvidia Github codes on Nvidia GitHub ONNX TensorRT Jupyter Notebook: Using Tensorflow 2 through ONNX.ipynb

But I obtain different and wrong outputs after running inference. After running the keras model, i obtain:

But after doing inference with ONNX TensorRT, it gives:

I am using Windows 11 WSL2 Ubuntu 20.04 and 18.04, and I have installed:

cuda-11-6 libcudnn8=8.4.1.50-1+cuda11.6 libcudnn8-dev=8.4.1.50-1+cuda11.6

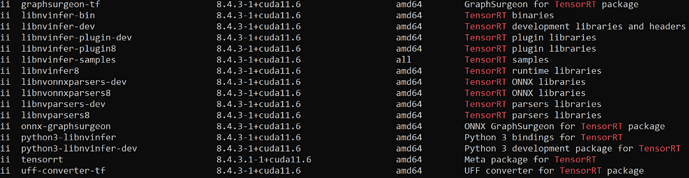

For TensorRT, the following libraries were installed:

Other things i did during installation was:

- Copy trtexec file from /usr/src/tensorrt/bin to /usr/local/bin/. Initially the command was not found, but i was able to exceute trtexec after that.

- Copy libcurand.so libcurand.so.10 from /usr/local/cuda/lib64/ to /usr/lib/x86_64-linux-gnu/. Initially I cannot build wheel for installation of pycuda, because error: lcurand not found, but I was able to pip install pycuda after that.

Not sure why I am encountering the error, because I ran the same codes on Windows 11 TensorRT and I was able to reproduce the results. But I get wrong results on WSL Ubuntu.