I have followed all the steps in this thread and still can’t get vllm to start successfully; There have been a few success reports; but I don’t get what I am doing wrong. Here are all the steps that I performed:

#!/bin/bash

set -e

-x

export TORCH_CUDA_ARCH_LIST=12.1a # Spark 12.1, 12.0f, 12.1a

export TRITON_PTXAS_PATH=/usr/local/cuda/bin/ptxas

export PATH=/usr/local/cuda/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

PACKAGE_NAME=“python3-dev”

Use ‘dpkg -s’ which checks the package status.

’ > /dev/null 2>&1’ is the POSIX-compliant way to silence

all output (stdout and stderr) and is more reliable than ‘&>’.

if ! dpkg -s “$PACKAGE_NAME” > /dev/null 2>&1; then

‘>&2’ redirects echo to stderr, which is standard for error messages.

echo “Error: Required package ‘$PACKAGE_NAME’ is not installed.” >&2

echo “This package is necessary to build Python C-extensions.” >&2

echo “” >&2

echo “To install it, please run:” >&2

echo " sudo apt update && sudo apt install $PACKAGE_NAME" >&2

exit 1

fi

echo “‘$PACKAGE_NAME’ is installed. Continuing script…”

mkdir -p ~/code

cd ~/code

if [ ! -d “vllm” ]; then

git clone --recursive https://github.com/vllm-project/vllm.git

fi

if [ ! -d “triton” ]; then

git clone --recursive https://github.com/triton-lang/triton.git

fi

cd ~

rm -rf .vllm

uv venv .vllm --python 3.12

source .vllm/bin/activate

uv pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu130

uv pip install xgrammar flashinfer-python --prerelease=allow

cd ~/code/vllm

git checkout .

git pull

cat <<‘EOF’ | patch -p1

diff --git a/CMakeLists.txt b/CMakeLists.txt

index 7cb94f919..f860e533e 100644

— a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -594,9 +594,9 @@ if(VLLM_GPU_LANG STREQUAL “CUDA”)

FP4 Archs and flags

if(${CMAKE_CUDA_COMPILER_VERSION} VERSION_GREATER_EQUAL 13.0)

cuda_archs_loose_intersection(FP4_ARCHS “10.0f;11.0f;12.0f” “${CUDA_ARCHS}”)

cuda_archs_loose_intersection(FP4_ARCHS “10.0f” “${CUDA_ARCHS}”)

else()

cuda_archs_loose_intersection(FP4_ARCHS “10.0a;10.1a;12.0a;12.1a” “${CUDA_ARCHS}”)

cuda_archs_loose_intersection(FP4_ARCHS “10.0a;10.1a” “${CUDA_ARCHS}”)

endif()

if(${CMAKE_CUDA_COMPILER_VERSION} VERSION_GREATER_EQUAL 12.8 AND FP4_ARCHS)

set(SRCS

@@ -668,7 +668,7 @@ if(VLLM_GPU_LANG STREQUAL “CUDA”)

endif()

if(${CMAKE_CUDA_COMPILER_VERSION} VERSION_GREATER_EQUAL 13.0)

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0f;11.0f” “${CUDA_ARCHS}”)

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0f” “${CUDA_ARCHS}”)

else()

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0a” “${CUDA_ARCHS}”)

endif()

@@ -716,9 +716,9 @@ if(VLLM_GPU_LANG STREQUAL “CUDA”)

endif()

if(${CMAKE_CUDA_COMPILER_VERSION} VERSION_GREATER_EQUAL 13.0)

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0f;11.0f;12.0f” “${CUDA_ARCHS}”)

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0f” “${CUDA_ARCHS}”)

else()

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0a;10.1a;10.3a;12.0a;12.1a” “${CUDA_ARCHS}”)

cuda_archs_loose_intersection(SCALED_MM_ARCHS “10.0a;10.1a;10.3a” “${CUDA_ARCHS}”)

endif()

if(${CMAKE_CUDA_COMPILER_VERSION} VERSION_GREATER_EQUAL 12.8 AND SCALED_MM_ARCHS)

set(SRCS “csrc/quantization/w8a8/cutlass/moe/blockwise_scaled_group_mm_sm100.cu”)

EOF

which python3

python3 use_existing_torch.py

uv pip install -r requirements/build.txt

uv pip uninstall triton

uv pip uninstall triton-kernels

cd ~/code/triton

git pull

uv pip install -e .

cd ~/code/vllm

uv pip install --no-build-isolation -e .

cd ~

mkdir -p tiktoken_encodings

wget -O tiktoken_encodings/o200k_base.tiktoken “https://openaipublic.blob.core.windows.net/encodings/o200k_base.tiktoken”

wget -O tiktoken_encodings/cl100k_base.tiktoken “https://openaipublic.blob.core.windows.net/encodings/cl100k_base.tiktoken”

export TIKTOKEN_ENCODINGS_BASE=${PWD}/tiktoken_encodings

sudo sysctl -w vm.drop_caches=3

export VLLM_USE_FLASHINFER_MXFP4_MOE=1

uv run vllm serve “openai/gpt-oss-120b” --async-scheduling --port 8000 --host 0.0.0.0 --trust_remote_code --swap-space 16 --max-model-len 32000 --tensor-parallel-size 1 --max-num-seqs 1024 --gpu-memory-utilization 0.7

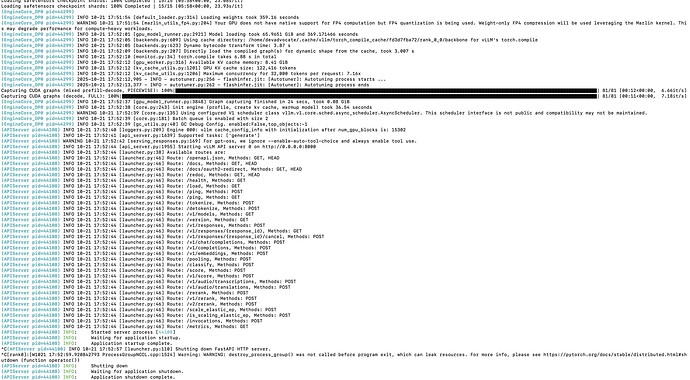

And this is the result when I launch it:

$ uv run vllm serve "openai/gpt-oss-120b" --async-scheduling --port 8000 --host 0.0.0.0 --trust_remote_code --swap-space 16 --max-model-len 32000 --tensor-parallel-size 1 --max-num-seqs 1024 --gpu-memory-utilization 0.7

(APIServer pid=86142) INFO 10-27 13:32:03 [api_server.py:1870] vLLM API server version 0.11.1rc4.dev38+g69f064062.d20251027

(APIServer pid=86142) INFO 10-27 13:32:03 [utils.py:253] non-default args: {'model_tag': 'openai/gpt-oss-120b', 'host': '0.0.0.0', 'model': 'openai/gpt-oss-120b', 'trust_remote_code': True, 'max_model_len': 32000, 'gpu_memory_utilization': 0.7, 'swap_space': 16.0, 'max_num_seqs': 1024, 'async_scheduling': True}

(APIServer pid=86142) INFO 10-27 13:32:06 [model.py:667] Resolved architecture: GptOssForCausalLM

Parse safetensors files: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 15/15 [00:01<00:00, 14.23it/s]

(APIServer pid=86142) INFO 10-27 13:32:08 [model.py:1778] Using max model len 32000

(APIServer pid=86142) The argument `trust_remote_code` is to be used with Auto classes. It has no effect here and is ignored.

(APIServer pid=86142) INFO 10-27 13:32:08 [scheduler.py:211] Chunked prefill is enabled with max_num_batched_tokens=2048.

(APIServer pid=86142) INFO 10-27 13:32:08 [config.py:272] Overriding max cuda graph capture size to 992 for performance.

(EngineCore_DP0 pid=86247) INFO 10-27 13:32:12 [core.py:93] Initializing a V1 LLM engine (v0.11.1rc4.dev38+g69f064062.d20251027) with config: model='openai/gpt-oss-120b', speculative_config=None, tokenizer='openai/gpt-oss-120b', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, tokenizer_revision=None, trust_remote_code=True, dtype=torch.bfloat16, max_seq_len=32000, download_dir=None, load_format=auto, tensor_parallel_size=1, pipeline_parallel_size=1, data_parallel_size=1, disable_custom_all_reduce=False, quantization=mxfp4, enforce_eager=False, kv_cache_dtype=auto, device_config=cuda, structured_outputs_config=StructuredOutputsConfig(backend='auto', disable_fallback=False, disable_any_whitespace=False, disable_additional_properties=False, reasoning_parser='openai_gptoss', enable_in_reasoning=False), observability_config=ObservabilityConfig(show_hidden_metrics_for_version=None, otlp_traces_endpoint=None, collect_detailed_traces=None), seed=0, served_model_name=openai/gpt-oss-120b, enable_prefix_caching=True, chunked_prefill_enabled=True, pooler_config=None, compilation_config={'level': None, 'mode': 3, 'debug_dump_path': None, 'cache_dir': '', 'backend': 'inductor', 'custom_ops': ['none'], 'splitting_ops': ['vllm::unified_attention', 'vllm::unified_attention_with_output', 'vllm::unified_mla_attention', 'vllm::unified_mla_attention_with_output', 'vllm::mamba_mixer2', 'vllm::mamba_mixer', 'vllm::short_conv', 'vllm::linear_attention', 'vllm::plamo2_mamba_mixer', 'vllm::gdn_attention', 'vllm::sparse_attn_indexer'], 'use_inductor': None, 'compile_sizes': [], 'inductor_compile_config': {'enable_auto_functionalized_v2': False, 'combo_kernels': True, 'benchmark_combo_kernel': True}, 'inductor_passes': {}, 'cudagraph_mode': <CUDAGraphMode.FULL_AND_PIECEWISE: (2, 1)>, 'use_cudagraph': True, 'cudagraph_num_of_warmups': 1, 'cudagraph_capture_sizes': [1, 2, 4, 8, 16, 24, 32, 40, 48, 56, 64, 72, 80, 88, 96, 104, 112, 120, 128, 136, 144, 152, 160, 168, 176, 184, 192, 200, 208, 216, 224, 232, 240, 248, 256, 272, 288, 304, 320, 336, 352, 368, 384, 400, 416, 432, 448, 464, 480, 496, 512, 528, 544, 560, 576, 592, 608, 624, 640, 656, 672, 688, 704, 720, 736, 752, 768, 784, 800, 816, 832, 848, 864, 880, 896, 912, 928, 944, 960, 976, 992], 'cudagraph_copy_inputs': False, 'full_cuda_graph': True, 'cudagraph_specialize_lora': True, 'use_inductor_graph_partition': False, 'pass_config': {}, 'max_cudagraph_capture_size': 992, 'local_cache_dir': None}

(EngineCore_DP0 pid=86247) /home/codr/.vllm/lib/python3.12/site-packages/torch/cuda/__init__.py:283: UserWarning:

(EngineCore_DP0 pid=86247) Found GPU0 NVIDIA GB10 which is of cuda capability 12.1.

(EngineCore_DP0 pid=86247) Minimum and Maximum cuda capability supported by this version of PyTorch is

(EngineCore_DP0 pid=86247) (8.0) - (12.0)

(EngineCore_DP0 pid=86247)

(EngineCore_DP0 pid=86247) warnings.warn(

[Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0

[Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0

[Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0

[Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0

[Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0

[Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0

(EngineCore_DP0 pid=86247) INFO 10-27 13:32:18 [parallel_state.py:1325] rank 0 in world size 1 is assigned as DP rank 0, PP rank 0, TP rank 0, EP rank 0

(EngineCore_DP0 pid=86247) INFO 10-27 13:32:18 [gpu_model_runner.py:2849] Starting to load model openai/gpt-oss-120b...

(EngineCore_DP0 pid=86247) INFO 10-27 13:32:18 [cuda.py:400] Using Triton backend on V1 engine.

(EngineCore_DP0 pid=86247) INFO 10-27 13:32:18 [mxfp4.py:143] Using Triton backend

Loading safetensors checkpoint shards: 0% Completed | 0/15 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 7% Completed | 1/15 [00:31<07:27, 31.98s/it]

Loading safetensors checkpoint shards: 13% Completed | 2/15 [01:01<06:34, 30.35s/it]

Loading safetensors checkpoint shards: 20% Completed | 3/15 [01:33<06:12, 31.07s/it]

Loading safetensors checkpoint shards: 27% Completed | 4/15 [02:05<05:46, 31.52s/it]

Loading safetensors checkpoint shards: 33% Completed | 5/15 [02:38<05:19, 31.94s/it]

Loading safetensors checkpoint shards: 40% Completed | 6/15 [03:03<04:28, 29.84s/it]

Loading safetensors checkpoint shards: 47% Completed | 7/15 [03:28<03:44, 28.05s/it]

Loading safetensors checkpoint shards: 53% Completed | 8/15 [03:46<02:55, 25.06s/it]

Loading safetensors checkpoint shards: 60% Completed | 9/15 [04:10<02:28, 24.68s/it]

Loading safetensors checkpoint shards: 67% Completed | 10/15 [04:37<02:06, 25.38s/it]

Loading safetensors checkpoint shards: 73% Completed | 11/15 [05:01<01:39, 24.97s/it]

Loading safetensors checkpoint shards: 80% Completed | 12/15 [05:25<01:13, 24.61s/it]

Loading safetensors checkpoint shards: 87% Completed | 13/15 [05:51<00:50, 25.11s/it]

Loading safetensors checkpoint shards: 93% Completed | 14/15 [06:09<00:22, 22.90s/it]

Loading safetensors checkpoint shards: 100% Completed | 15/15 [06:34<00:00, 23.63s/it]

Loading safetensors checkpoint shards: 100% Completed | 15/15 [06:34<00:00, 26.32s/it]

(EngineCore_DP0 pid=86247)

(EngineCore_DP0 pid=86247) INFO 10-27 13:38:56 [default_loader.py:314] Loading weights took 395.13 seconds

(EngineCore_DP0 pid=86247) INFO 10-27 13:39:08 [gpu_model_runner.py:2914] Model loading took 68.0744 GiB and 402.666436 seconds

(EngineCore_DP0 pid=86247) INFO 10-27 13:39:13 [backends.py:618] Using cache directory: /home/codr/.cache/vllm/torch_compile_cache/e34e3b9aaa/rank_0_0/backbone for vLLM's torch.compile

(EngineCore_DP0 pid=86247) INFO 10-27 13:39:13 [backends.py:634] Dynamo bytecode transform time: 4.63 s

(EngineCore_DP0 pid=86247) [rank0]:W1027 13:39:13.767000 86247 torch/_inductor/utils.py:1558] [0/0] Not enough SMs to use max_autotune_gemm mode

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] EngineCore failed to start.

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] Traceback (most recent call last):

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 770, in run_engine_core

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] engine_core = EngineCoreProc(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 538, in __init__

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] super().__init__(

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 109, in __init__

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] num_gpu_blocks, num_cpu_blocks, kv_cache_config = self._initialize_kv_caches(

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 218, in _initialize_kv_caches

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] available_gpu_memory = self.model_executor.determine_available_memory()

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/executor/abstract.py", line 123, in determine_available_memory

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return self.collective_rpc("determine_available_memory")

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/executor/uniproc_executor.py", line 73, in collective_rpc

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return [run_method(self.driver_worker, method, args, kwargs)]

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/serial_utils.py", line 459, in run_method

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return func(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/utils/_contextlib.py", line 120, in decorate_context

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return func(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/worker/gpu_worker.py", line 284, in determine_available_memory

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] self.model_runner.profile_run()

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/worker/gpu_model_runner.py", line 3733, in profile_run

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] hidden_states, last_hidden_states = self._dummy_run(

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/utils/_contextlib.py", line 120, in decorate_context

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return func(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/v1/worker/gpu_model_runner.py", line 3464, in _dummy_run

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] outputs = self.model(

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/compilation/cuda_graph.py", line 126, in __call__

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return self.runnable(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return self._call_impl(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return forward_call(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/model_executor/models/gpt_oss.py", line 705, in forward

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return self.model(input_ids, positions, intermediate_tensors, inputs_embeds)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/code/vllm/vllm/compilation/decorators.py", line 408, in __call__

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] output = self.compiled_callable(*args, **kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_dynamo/eval_frame.py", line 845, in compile_wrapper

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] raise e.remove_dynamo_frames() from None # see TORCHDYNAMO_VERBOSE=1

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 990, in _compile_fx_inner

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] raise InductorError(e, currentframe()).with_traceback(

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 974, in _compile_fx_inner

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] mb_compiled_graph = fx_codegen_and_compile(

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1695, in fx_codegen_and_compile

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return scheme.codegen_and_compile(gm, example_inputs, inputs_to_check, graph_kwargs)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1505, in codegen_and_compile

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] compiled_module = graph.compile_to_module()

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2319, in compile_to_module

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return self._compile_to_module()

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2325, in _compile_to_module

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] self.codegen_with_cpp_wrapper() if self.cpp_wrapper else self.codegen()

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2271, in codegen

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] result = self.wrapper_code.generate(self.is_inference)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/codegen/wrapper.py", line 1552, in generate

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] return self._generate(is_inference)

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/codegen/wrapper.py", line 1615, in _generate

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] self.generate_and_run_autotune_block()

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/codegen/wrapper.py", line 1695, in generate_and_run_autotune_block

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] raise RuntimeError(f"Failed to run autotuning code block: {e}") from e

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] torch._inductor.exc.InductorError: RuntimeError: Failed to run autotuning code block: 'JITFunction' object has no attribute 'constexprs'

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779]

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779] Set TORCHDYNAMO_VERBOSE=1 for the internal stack trace (please do this especially if you're reporting a bug to PyTorch). For even more developer context, set TORCH_LOGS="+dynamo"

(EngineCore_DP0 pid=86247) ERROR 10-27 13:39:13 [core.py:779]

(EngineCore_DP0 pid=86247) Process EngineCore_DP0:

(EngineCore_DP0 pid=86247) Traceback (most recent call last):

(EngineCore_DP0 pid=86247) File "/usr/lib/python3.12/multiprocessing/process.py", line 314, in _bootstrap

(EngineCore_DP0 pid=86247) self.run()

(EngineCore_DP0 pid=86247) File "/usr/lib/python3.12/multiprocessing/process.py", line 108, in run

(EngineCore_DP0 pid=86247) self._target(*self._args, **self._kwargs)

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 783, in run_engine_core

(EngineCore_DP0 pid=86247) raise e

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 770, in run_engine_core

(EngineCore_DP0 pid=86247) engine_core = EngineCoreProc(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 538, in __init__

(EngineCore_DP0 pid=86247) super().__init__(

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 109, in __init__

(EngineCore_DP0 pid=86247) num_gpu_blocks, num_cpu_blocks, kv_cache_config = self._initialize_kv_caches(

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/engine/core.py", line 218, in _initialize_kv_caches

(EngineCore_DP0 pid=86247) available_gpu_memory = self.model_executor.determine_available_memory()

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/executor/abstract.py", line 123, in determine_available_memory

(EngineCore_DP0 pid=86247) return self.collective_rpc("determine_available_memory")

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/executor/uniproc_executor.py", line 73, in collective_rpc

(EngineCore_DP0 pid=86247) return [run_method(self.driver_worker, method, args, kwargs)]

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/serial_utils.py", line 459, in run_method

(EngineCore_DP0 pid=86247) return func(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/utils/_contextlib.py", line 120, in decorate_context

(EngineCore_DP0 pid=86247) return func(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/worker/gpu_worker.py", line 284, in determine_available_memory

(EngineCore_DP0 pid=86247) self.model_runner.profile_run()

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/worker/gpu_model_runner.py", line 3733, in profile_run

(EngineCore_DP0 pid=86247) hidden_states, last_hidden_states = self._dummy_run(

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/utils/_contextlib.py", line 120, in decorate_context

(EngineCore_DP0 pid=86247) return func(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/v1/worker/gpu_model_runner.py", line 3464, in _dummy_run

(EngineCore_DP0 pid=86247) outputs = self.model(

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/compilation/cuda_graph.py", line 126, in __call__

(EngineCore_DP0 pid=86247) return self.runnable(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

(EngineCore_DP0 pid=86247) return self._call_impl(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

(EngineCore_DP0 pid=86247) return forward_call(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/model_executor/models/gpt_oss.py", line 705, in forward

(EngineCore_DP0 pid=86247) return self.model(input_ids, positions, intermediate_tensors, inputs_embeds)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/code/vllm/vllm/compilation/decorators.py", line 408, in __call__

(EngineCore_DP0 pid=86247) output = self.compiled_callable(*args, **kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_dynamo/eval_frame.py", line 845, in compile_wrapper

(EngineCore_DP0 pid=86247) raise e.remove_dynamo_frames() from None # see TORCHDYNAMO_VERBOSE=1

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 990, in _compile_fx_inner

(EngineCore_DP0 pid=86247) raise InductorError(e, currentframe()).with_traceback(

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 974, in _compile_fx_inner

(EngineCore_DP0 pid=86247) mb_compiled_graph = fx_codegen_and_compile(

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1695, in fx_codegen_and_compile

(EngineCore_DP0 pid=86247) return scheme.codegen_and_compile(gm, example_inputs, inputs_to_check, graph_kwargs)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/compile_fx.py", line 1505, in codegen_and_compile

(EngineCore_DP0 pid=86247) compiled_module = graph.compile_to_module()

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2319, in compile_to_module

(EngineCore_DP0 pid=86247) return self._compile_to_module()

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2325, in _compile_to_module

(EngineCore_DP0 pid=86247) self.codegen_with_cpp_wrapper() if self.cpp_wrapper else self.codegen()

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/graph.py", line 2271, in codegen

(EngineCore_DP0 pid=86247) result = self.wrapper_code.generate(self.is_inference)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/codegen/wrapper.py", line 1552, in generate

(EngineCore_DP0 pid=86247) return self._generate(is_inference)

(EngineCore_DP0 pid=86247) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/codegen/wrapper.py", line 1615, in _generate

(EngineCore_DP0 pid=86247) self.generate_and_run_autotune_block()

(EngineCore_DP0 pid=86247) File "/home/codr/.vllm/lib/python3.12/site-packages/torch/_inductor/codegen/wrapper.py", line 1695, in generate_and_run_autotune_block

(EngineCore_DP0 pid=86247) raise RuntimeError(f"Failed to run autotuning code block: {e}") from e

(EngineCore_DP0 pid=86247) torch._inductor.exc.InductorError: RuntimeError: Failed to run autotuning code block: 'JITFunction' object has no attribute 'constexprs'

(EngineCore_DP0 pid=86247)

(EngineCore_DP0 pid=86247) Set TORCHDYNAMO_VERBOSE=1 for the internal stack trace (please do this especially if you're reporting a bug to PyTorch). For even more developer context, set TORCH_LOGS="+dynamo"

(EngineCore_DP0 pid=86247)

[rank0]:[W1027 13:39:15.693171868 ProcessGroupNCCL.cpp:1524] Warning: WARNING: destroy_process_group() was not called before program exit, which can leak resources. For more info, please see https://pytorch.org/docs/stable/distributed.html#shutdown (function operator())

(APIServer pid=86142) Traceback (most recent call last):

(APIServer pid=86142) File "/home/codr/.vllm/bin/vllm", line 10, in <module>

(APIServer pid=86142) sys.exit(main())

(APIServer pid=86142) ^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/entrypoints/cli/main.py", line 73, in main

(APIServer pid=86142) args.dispatch_function(args)

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/entrypoints/cli/serve.py", line 59, in cmd

(APIServer pid=86142) uvloop.run(run_server(args))

(APIServer pid=86142) File "/home/codr/.vllm/lib/python3.12/site-packages/uvloop/__init__.py", line 96, in run

(APIServer pid=86142) return __asyncio.run(

(APIServer pid=86142) ^^^^^^^^^^^^^^

(APIServer pid=86142) File "/usr/lib/python3.12/asyncio/runners.py", line 194, in run

(APIServer pid=86142) return runner.run(main)

(APIServer pid=86142) ^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/usr/lib/python3.12/asyncio/runners.py", line 118, in run

(APIServer pid=86142) return self._loop.run_until_complete(task)

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete

(APIServer pid=86142) File "/home/codr/.vllm/lib/python3.12/site-packages/uvloop/__init__.py", line 48, in wrapper

(APIServer pid=86142) return await main

(APIServer pid=86142) ^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/entrypoints/openai/api_server.py", line 1914, in run_server

(APIServer pid=86142) await run_server_worker(listen_address, sock, args, **uvicorn_kwargs)

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/entrypoints/openai/api_server.py", line 1930, in run_server_worker

(APIServer pid=86142) async with build_async_engine_client(

(APIServer pid=86142) File "/usr/lib/python3.12/contextlib.py", line 210, in __aenter__

(APIServer pid=86142) return await anext(self.gen)

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/entrypoints/openai/api_server.py", line 185, in build_async_engine_client

(APIServer pid=86142) async with build_async_engine_client_from_engine_args(

(APIServer pid=86142) File "/usr/lib/python3.12/contextlib.py", line 210, in __aenter__

(APIServer pid=86142) return await anext(self.gen)

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/entrypoints/openai/api_server.py", line 232, in build_async_engine_client_from_engine_args

(APIServer pid=86142) async_llm = AsyncLLM.from_vllm_config(

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/utils/func_utils.py", line 116, in inner

(APIServer pid=86142) return fn(*args, **kwargs)

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/async_llm.py", line 220, in from_vllm_config

(APIServer pid=86142) return cls(

(APIServer pid=86142) ^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/async_llm.py", line 142, in __init__

(APIServer pid=86142) self.engine_core = EngineCoreClient.make_async_mp_client(

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/core_client.py", line 121, in make_async_mp_client

(APIServer pid=86142) return AsyncMPClient(*client_args)

(APIServer pid=86142) ^^^^^^^^^^^^^^^^^^^^^^^^^^^

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/core_client.py", line 807, in __init__

(APIServer pid=86142) super().__init__(

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/core_client.py", line 468, in __init__

(APIServer pid=86142) with launch_core_engines(vllm_config, executor_class, log_stats) as (

(APIServer pid=86142) File "/usr/lib/python3.12/contextlib.py", line 144, in __exit__

(APIServer pid=86142) next(self.gen)

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/utils.py", line 889, in launch_core_engines

(APIServer pid=86142) wait_for_engine_startup(

(APIServer pid=86142) File "/home/codr/code/vllm/vllm/v1/engine/utils.py", line 946, in wait_for_engine_startup

(APIServer pid=86142) raise RuntimeError(

(APIServer pid=86142) RuntimeError: Engine core initialization failed. See root cause above. Failed core proc(s): {}