Hutch

October 25, 2020, 1:09am

1

Hardware Platform: Jetson Nano

I’ve exported a ResNet18 model using the Detectnet_v2 object detection architecture to the Jetson Nano, ran the tlt-convert command and began inference using Deepstream, but I’m getting different detection results than I did when I ran the tlt-infer command in the Jupyter Notebook. In my inference spec sheet in the notebook, the confidence model I used was the aggregate_cov. Is there a way to set/configure this in the gst-invfer spec sheet file? Here is my gst-invfer spec sheet:

[property]

gpu-id=0

net-scale-factor=0.0039215697906911373

model-engine-file=../../models/Tank_Level_Detector/tank_model.engine

labelfile-path=../../models/Tank_Level_Detector/labels.txt

batch-size=1

process-mode=1

model-color-format=0

## 0=FP32, 1=INT8, 2=FP16 mode

network-mode=0

num-detected-classes=3

interval=0

gie-unique-id=1

output-blob-names=output_cov/Sigmoid;output_bbox/BiasAdd

force-implicit-batch-dim=1

#parse-bbox-func-name=NvDsInferParseCustomResnet

#custom-lib-path=/path/to/libnvdsparsebbox.so

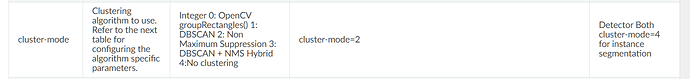

## 0=Group Rectangles, 1=DBSCAN, 2=NMS, 3= DBSCAN+NMS Hybrid, 4 = None(No clustering)

cluster-mode=1

scaling-filter=0

scaling-compute-hw=0

#Use the config params below for dbscan clustering mode

[class-attrs-all]

detected-min-w=4

detected-min-h=4

minBoxes=10

topk=1

# Per class configurations

[class-attrs-0]

pre-cluster-threshold=0.0

eps=0.7

dbscan-min-score=0.05

[class-attrs-1]

pre-cluster-threshold=0.0

eps=0.7

dbscan-min-score=0.05

[class-attrs-2]

pre-cluster-threshold=0.0

eps=0.7

dbscan-min-score=0.05

Hi,

Here is an example of deploying a Detectnet_v2 model to Deesptream.https://github.com/NVIDIA-AI-IOT/deepstream_tlt_apps/tree/master/nvdsinfer_detectNet_v2_tlt

Thanks.

Hutch

October 26, 2020, 7:29pm

6

Yes, here is my training spec sheet:

random_seed: 42

dataset_config {

data_sources {

tfrecords_path: "/workspace/tlt-experiments/data/tfrecords/kitti_trainval/*"

image_directory_path: "/workspace/tlt-experiments/data/training"

}

image_extension: "png"

target_class_mapping {

key: "low"

value: "low"

}

target_class_mapping {

key: "medium"

value: "medium"

}

target_class_mapping {

key: "high"

value: "high"

}

validation_fold: 0

}

augmentation_config {

preprocessing {

output_image_width: 704

output_image_height: 480

min_bbox_width: 1.0

min_bbox_height: 1.0

output_image_channel: 3

}

spatial_augmentation {

hflip_probability: 0.0

zoom_min: 1.0

zoom_max: 1.0

translate_max_x: 0.0

translate_max_y: 0.0

}

color_augmentation {

hue_rotation_max: 25.0

saturation_shift_max: 0.2

contrast_scale_max: 0.1

contrast_center: 0.5

}

}

postprocessing_config {

target_class_config {

key: "low"

value {

clustering_config {

coverage_threshold: 0.00499999988824

dbscan_eps: .7

dbscan_min_samples: 0.0500000007451

minimum_bounding_box_height: 75

}

}

}

target_class_config {

key: "medium"

value {

clustering_config {

coverage_threshold: 0.00499999988824

dbscan_eps: .7

dbscan_min_samples: 0.0500000007451

minimum_bounding_box_height: 75

}

}

}

target_class_config {

key: "high"

value {

clustering_config {

coverage_threshold: 0.00749999983236

dbscan_eps: .7

dbscan_min_samples: 0.0500000007451

minimum_bounding_box_height: 75

}

}

}

}

model_config {

pretrained_model_file: "/workspace/tlt-experiments/detectnet_v2/pretrained_resnet18/tlt_pretrained_detectnet_v2_vresnet18/resnet18.hdf5"

num_layers: 18

use_batch_norm: true

objective_set {

bbox {

scale: 35.0

offset: 0.5

}

cov {

}

}

training_precision {

backend_floatx: FLOAT32

}

arch: "resnet"

}

evaluation_config {

validation_period_during_training: 10

first_validation_epoch: 30

minimum_detection_ground_truth_overlap {

key: "low"

value: 0.80

}

minimum_detection_ground_truth_overlap {

key: "medium"

value: 0.80

}

minimum_detection_ground_truth_overlap {

key: "high"

value: 0.80

}

evaluation_box_config {

key: "low"

value {

minimum_height: 10

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

evaluation_box_config {

key: "medium"

value {

minimum_height: 10

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

evaluation_box_config {

key: "high"

value {

minimum_height: 10

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

average_precision_mode: INTEGRATE

}

cost_function_config {

target_classes {

name: "low"

class_weight: 2.22

coverage_foreground_weight: 0.0500000007451

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 10.0

weight_target: 10.0

}

}

target_classes {

name: "medium"

class_weight: 3.49

coverage_foreground_weight: 0.0500000007451

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 10.0

weight_target: 10.0

}

}

target_classes {

name: "high"

class_weight: 3.77

coverage_foreground_weight: 0.0500000007451

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 10.0

weight_target: 10.0

}

}

enable_autoweighting: true

max_objective_weight: 0.999899983406

min_objective_weight: 9.99999974738e-05

}

training_config {

batch_size_per_gpu: 4

num_epochs: 120

learning_rate {

soft_start_annealing_schedule {

min_learning_rate: 5e-06

max_learning_rate: 5e-04

soft_start: 0.10000000149

annealing: 0.699999988079

}

}

regularizer {

type: L1

weight: 3.00000002618e-09

}

optimizer {

adam {

epsilon: 9.99999993923e-09

beta1: 0.899999976158

beta2: 0.999000012875

}

}

cost_scaling {

initial_exponent: 20.0

increment: 0.005

decrement: 1.0

}

checkpoint_interval: 10

}

bbox_rasterizer_config {

target_class_config {

key: "low"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 1.0

cov_radius_y: 1.0

bbox_min_radius: 1.0

}

}

target_class_config {

key: "medium"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 1.0

cov_radius_y: 1.0

bbox_min_radius: 1.0

}

}

target_class_config {

key: "high"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 1.0

cov_radius_y: 1.0

bbox_min_radius: 1.0

}

}

deadzone_radius: 0.400000154972

}

And here is my inference spec sheet used in the Jupyter Notebook:

inferencer_config{

# defining target class names for the experiment.

# Note: This must be mentioned in order of the networks classes.

target_classes: "low"

target_classes: "medium"

target_classes: "high"

# Inference dimensions.

image_width: 704

image_height: 480

# Must match what the model was trained for.

image_channels: 3

batch_size: 8

gpu_index: 0

# model handler config

tlt_config{

model: "/workspace/tlt-experiments/detectnet_v2/experiment_dir_retrain/weights/resnet18_detector_pruned.tlt"

}

}

bbox_handler_config{

kitti_dump: true

disable_overlay: false

overlay_linewidth: 2

classwise_bbox_handler_config{

key:"low"

value: {

confidence_model: "aggregate_cov"

output_map: "low"

confidence_threshold: 100.0

bbox_color{

R: 255

G: 0

B: 0

}

clustering_config{

coverage_threshold: 0.00

dbscan_eps: .7

dbscan_min_samples: 0.05

minimum_bounding_box_height: 4

}

}

}

classwise_bbox_handler_config{

key:"medium"

value: {

confidence_model: "aggregate_cov"

output_map: "medium"

confidence_threshold: 100.0

bbox_color{

R: 0

G: 255

B: 0

}

clustering_config{

coverage_threshold: 0.00

dbscan_eps: .7

dbscan_min_samples: 0.05

minimum_bounding_box_height: 4

}

}

}

classwise_bbox_handler_config{

key:"high"

value: {

confidence_model: "aggregate_cov"

output_map: "high"

confidence_threshold: 100.0

bbox_color{

R: 0

G: 0

B: 255

}

clustering_config{

coverage_threshold: 0.00

dbscan_eps: .7

dbscan_min_samples: 0.05

minimum_bounding_box_height: 4

}

}

}

classwise_bbox_handler_config{

key:"default"

value: {

confidence_model: "aggregate_cov"

confidence_threshold: 100.0

bbox_color{

R: 255

G: 255

B: 0

}

clustering_config{

coverage_threshold: 0.00

dbscan_eps: .7

dbscan_min_samples: 0.05

minimum_bounding_box_height: 4

}

}

}

}

My model does very well in inference using the aggregate_cov model, but poorly using the mean_cov model. I’m trying to replicate these parameters in the deepstream spec sheets, but I’m not able to achieve the same results. My labels file is also set up the same way in the example.

@Hutch ,https://github.com/NVIDIA-AI-IOT/deepstream_tlt_apps/blob/master/pgie_detectnet_v2_tlt_config.txt . Suggest using that tempalate for reference.

And retry again.

Second,

Python

December 25, 2020, 2:27pm

10

Hi morgan, does Deepstream uses Aggregate or Mean cov to draw bounding boxes?

mchi

December 29, 2020, 5:06am

11

Python:

Aggregate or Mean cov

what do Aggregate and Mean cov mean?

Python

December 29, 2020, 3:19pm

12

I meant the bbox confidences on inference in Transfer Learning Toolkit , to make it clear to you, for example i have trained my model and inference it with aggregate_cov parameter and 0.9 threshold value in TLT it perfectly detected objects and draw bounding boxes but when i deploy it to Deepstream there is no bounding boxes until i was reduce the threshold to the 0.1.

I would ask this question as another topic after a while I think its clear that deepstream uses mean_cov or similar thing while thresholding bounding boxes, so if my quess is wrong, please let me know. Thank you

mchi

December 31, 2020, 2:00am

13

it depends on which cluster-mode you are using. TLT is using DBSCAN

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_plugin_gst-nvinfer.html

KGerry

May 13, 2021, 10:04am

14

I’m having the same issue: using the default jupyter notebook and kitti dataset my tlt-infer works great, but the deepstream detection is very poor. See this post Can't get TLT trained model get to work on Deepstream - Jetson (NX) - #2 by kayccc . Tried the decectnet config file, tried all possible eps, dbscan settings. And yes, I converted it on the xavier nx.

1 Like