**• Hardware Platform (Jetson / GPU) Jetson Nano & Jetson TX2

**• DeepStream Version 5.0

**• JetPack Version (valid for Jetson only) 4.4

**• TensorRT Version 7.1

Hi,

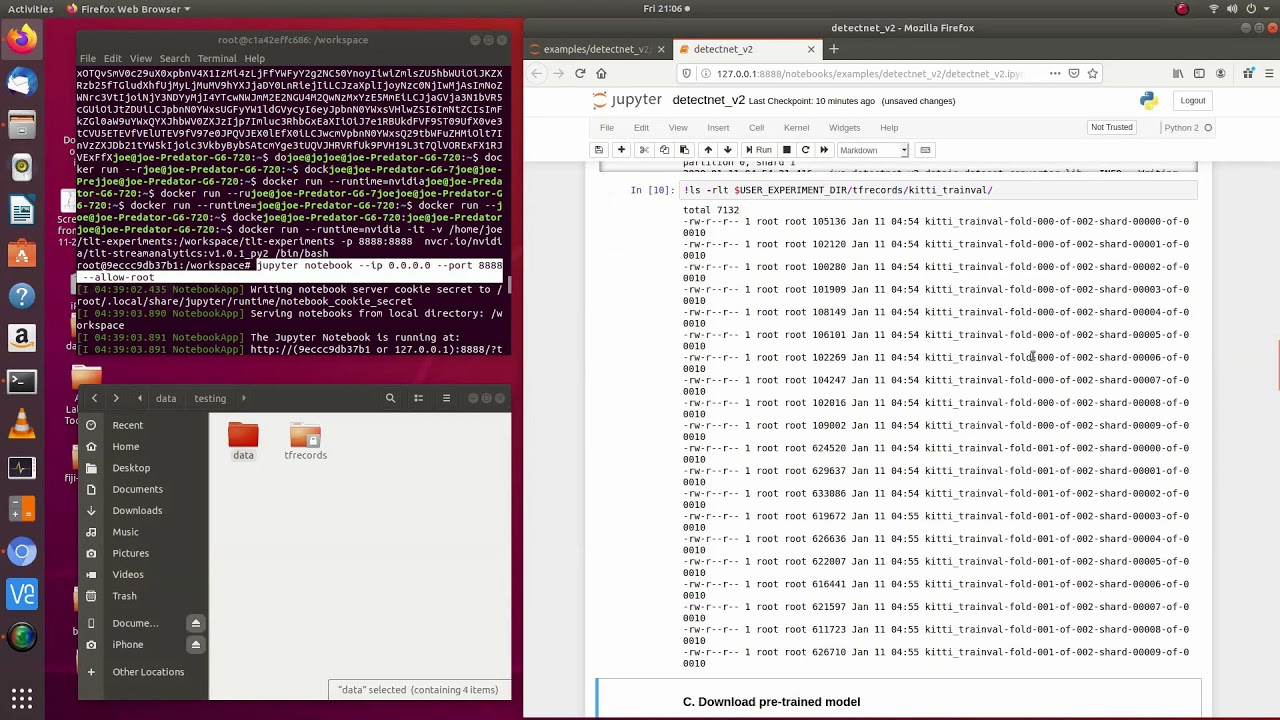

I trained resnet18 and resnet10 models with Kitti Dataset(which is given in TLT Jupyter Notebook script) on TLT (in jupyter notebook under the detecnet_v2) and converted the .etlt file to .trt file with the valid trt-converter. While converting process is killed in Nano platform, it worked on TX2. However, the model does not detect anything on live source or video although it detects the pictures on jupyter notebook.

I’ve followed the instructions on Metropolis Documentation of NVIDIA and also watched & followed this video during the all process:

Thanks

Hi,

Thanks for reporting this to us.

We are checking this internally.

Will update more information with you later.

Moving this topic into TLT forum for tracking.

@ucuzovaesra

Please provide the link of your post-video, is it an official video guide?

If possible, please share your spec file of deepstream too.

Thanks, I’m waiting to hear from you soon.

There are the video links that i got help during the process:

All those videos have the same steps and codes with your tlt documentation.

About the spec file, i did not change anything in tlt jupyter notebook scripts and/or specs under detecnet_v2 folder.

Thanks.

@ucuzovaesra

Since the videos you shared above can detect objects well, could you please try to narrow down your case comparing to the video step by step?

If possible, I suggest you to share your deepstream config files.

More, the video you shared above is talking about how to run the TLT 1.0.1 docker and deploy it into Deepstream 4.0.

This 1.0.1 docker is released last December.

Currently, TLT already release 2.0_dp docker in April and 2.0 docker these days. End users should deploy the model into Deepstream 5.0.

From your description, you are deploying model in DS5.0. Not sure which docker you were using.