I tried implementation a ROS2 node following the VPI3.2 sample for Sparse LK Optical Flow,

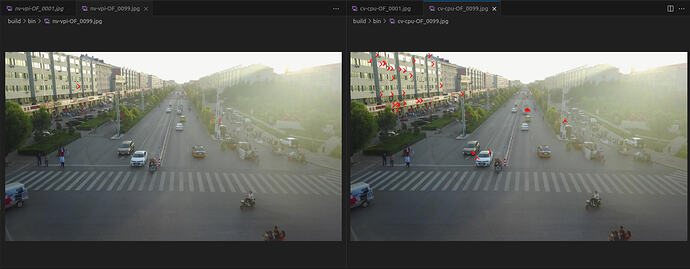

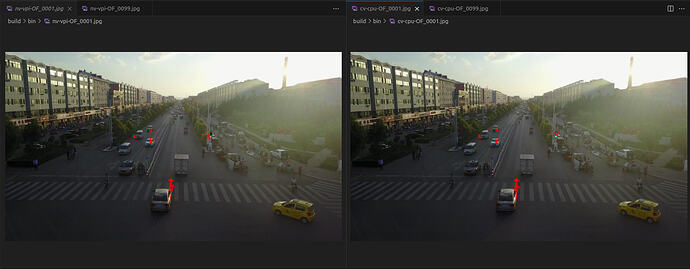

I found that the first few frames of tracking always produce good results but subsequently all fail miserably.

Initially i tried swapping the pyramids, or even regenerating the pyramids each frames but it is still the same. I had tried logging the hash of the inputs (prevPyr, curPyr, prevPts) to the vpiSubmitOpticalFlowPyrLK and it is valid. Changing between CPU and CUDA backend does not work.

Afterwards, I tried comparing with OpenCV side by side with the same input.

These are the observations

Is there any place I should look out for, I don’t think there is any data corruption issue.

Following is the code.

#include <rclcpp/rclcpp.hpp>

#include <cv_bridge/cv_bridge.h>

#include <sensor_msgs/msg/image.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/video/tracking.hpp>

#include <vpi/OpenCVInterop.hpp>

#include <vpi/Array.h>

#include <vpi/Image.h>

#include <vpi/Pyramid.h>

#include <vpi/Status.h>

#include <vpi/Stream.h>

#include <vpi/algo/ConvertImageFormat.h>

#include <vpi/algo/GaussianPyramid.h>

#include <vpi/algo/HarrisCorners.h>

#include <vpi/algo/OpticalFlowPyrLK.h>

#include <algorithm>

#include <numeric>

#include <vector>

#include <mutex>

#include <map>

constexpr int MAX_KEYPOINTS = 200;

constexpr int MAX_HARRIS_CORNERS = 8192;

constexpr float MIN_DIST = 10.0f;

constexpr int MIN_DIST_SQ = 100;

constexpr int WINDOW_SIZE = 21;

constexpr int MAX_ITERATIONS = 30;

constexpr float EPSILON = 0.5f;

#define CHECK_STATUS(STMT) \

do \

{ \

VPIStatus status__ = (STMT); \

if (status__ != VPI_SUCCESS) \

{ \

char buffer[VPI_MAX_STATUS_MESSAGE_LENGTH]; \

vpiGetLastStatusMessage(buffer, sizeof(buffer)); \

std::ostringstream ss; \

ss << vpiStatusGetName(status__) << ": " << buffer; \

RCLCPP_ERROR(rclcpp::get_logger("vpi_node"), "%s", ss.str().c_str()); \

throw std::runtime_error(ss.str()); \

} \

} while (0);

struct TrackState

{

std::vector<int> ids;

std::vector<int> ages;

std::map<int, cv::Point2f> prev_pts_map;

void updateHistory(const std::vector<cv::Point2f> ¤t_points)

{

for (size_t i = 0; i < current_points.size(); ++i)

{

if (i < ids.size())

prev_pts_map[ids[i]] = current_points[i];

}

}

void addTrack(int id, const cv::Point2f &pt)

{

ids.push_back(id);

ages.push_back(0);

prev_pts_map[id] = pt;

}

void incrementAges()

{

for (auto &age : ages)

age++;

}

};

struct VisualData

{

std::vector<cv::Point2f> points;

std::vector<int> ids;

std::vector<int> ages;

std::map<int, cv::Point2f> prev_map;

};

static void SortKeypoints(VPIArray keypoints, VPIArray scores, int max)

{

VPIArrayData ptsData, scoresData;

CHECK_STATUS(vpiArrayLockData(keypoints, VPI_LOCK_READ_WRITE, VPI_ARRAY_BUFFER_HOST_AOS, &ptsData));

CHECK_STATUS(vpiArrayLockData(scores, VPI_LOCK_READ_WRITE, VPI_ARRAY_BUFFER_HOST_AOS, &scoresData));

VPIArrayBufferAOS &aosKeypoints = ptsData.buffer.aos;

VPIArrayBufferAOS &aosScores = scoresData.buffer.aos;

std::vector<int> indices(*aosKeypoints.sizePointer);

std::iota(indices.begin(), indices.end(), 0);

uint8_t *scoreBase = (uint8_t *)aosScores.data;

int32_t scoreStride = aosScores.strideBytes;

std::stable_sort(indices.begin(), indices.end(), [&](int a, int b)

{

uint32_t *scoreA = reinterpret_cast<uint32_t *>(scoreBase + a * scoreStride);

uint32_t *scoreB = reinterpret_cast<uint32_t *>(scoreBase + b * scoreStride);

return *scoreA > *scoreB; });

indices.resize(std::min<size_t>(indices.size(), max));

uint8_t *kptBase = (uint8_t *)aosKeypoints.data;

int32_t kptStride = aosKeypoints.strideBytes;

std::vector<VPIKeypointF32> kpt;

kpt.reserve(indices.size());

for (int idx : indices)

{

kpt.push_back(*reinterpret_cast<VPIKeypointF32 *>(kptBase + idx * kptStride));

}

for (size_t i = 0; i < kpt.size(); ++i)

{

*reinterpret_cast<VPIKeypointF32 *>(kptBase + i * kptStride) = kpt[i];

}

*aosKeypoints.sizePointer = kpt.size();

vpiArrayUnlock(scores);

vpiArrayUnlock(keypoints);

}

class VpiLkNode : public rclcpp::Node

{

public:

VpiLkNode() : Node("vpi_lk_comparison"), initialized_(false), global_id_counter_(0)

{

this->declare_parameter("backend", "cuda");

this->declare_parameter("pyramid_levels", 3);

this->declare_parameter("use_timer", false);

std::string backend_str = this->get_parameter("backend").as_string();

backend_ = (backend_str == "cpu") ? VPI_BACKEND_CPU : VPI_BACKEND_CUDA;

pyr_levels_ = this->get_parameter("pyramid_levels").as_int();

use_timer_ = this->get_parameter("use_timer").as_bool();

sub_ = this->create_subscription<sensor_msgs::msg::Image>(

"image_in", rclcpp::SensorDataQoS(), std::bind(&VpiLkNode::imageCallback, this, std::placeholders::_1));

pub_ = this->create_publisher<sensor_msgs::msg::Image>("tracking_comparison", 10);

debug_pub_ = this->create_publisher<sensor_msgs::msg::Image>("vpi_debug_image", 10);

if (use_timer_)

{

timer_ = this->create_wall_timer(std::chrono::milliseconds(33),

std::bind(&VpiLkNode::timerCallback, this));

}

}

~VpiLkNode()

{

if (stream_)

vpiStreamDestroy(stream_);

if (harris_)

vpiPayloadDestroy(harris_);

if (optflow_)

vpiPayloadDestroy(optflow_);

if (pyrPrevFrame_)

vpiPyramidDestroy(pyrPrevFrame_);

if (pyrCurFrame_)

vpiPyramidDestroy(pyrCurFrame_);

if (imgTempFrame_)

vpiImageDestroy(imgTempFrame_);

if (imgFrame_)

vpiImageDestroy(imgFrame_);

if (curFeatures_)

vpiArrayDestroy(curFeatures_);

if (status_)

vpiArrayDestroy(status_);

if (candidates_)

vpiArrayDestroy(candidates_);

if (candidateScores_)

vpiArrayDestroy(candidateScores_);

}

private:

rclcpp::Subscription<sensor_msgs::msg::Image>::SharedPtr sub_;

rclcpp::Publisher<sensor_msgs::msg::Image>::SharedPtr pub_, debug_pub_;

rclcpp::TimerBase::SharedPtr timer_;

VPIBackend backend_;

bool use_timer_;

int pyr_levels_;

std::mutex data_mutex_;

cv::Mat latest_frame_;

bool has_new_frame_ = false;

int img_width_ = 0;

int img_height_ = 0;

// VPI

bool initialized_;

VPIStream stream_ = NULL;

VPIImage imgTempFrame_ = NULL;

VPIImage imgFrame_ = NULL;

VPIPyramid pyrPrevFrame_ = NULL, pyrCurFrame_ = NULL;

VPIArray curFeatures_ = NULL, status_ = NULL;

VPIArray candidates_ = NULL, candidateScores_ = NULL;

VPIPayload optflow_ = NULL, harris_ = NULL;

VPIOpticalFlowPyrLKParams lkParams_;

TrackState vpi_track_state_;

std::vector<VPIKeypointF32> vpi_prev_features_host_;

cv::Mat prev_frame_bgr_;

// OpenCV

cv::Mat cv_prev_gray_;

std::vector<cv::Point2f> cv_prev_pts_, cv_cur_pts_;

TrackState cv_track_state_;

int global_id_counter_;

void initVpi(int cols, int rows)

{

img_width_ = cols;

img_height_ = rows;

CHECK_STATUS(vpiStreamCreate(backend_, &stream_));

cv::Mat dummy = cv::Mat::zeros(rows, cols, CV_8UC3);

CHECK_STATUS(vpiImageCreateWrapperOpenCVMat(dummy, VPI_BACKEND_ALL, &imgTempFrame_));

CHECK_STATUS(vpiImageCreate(cols, rows, VPI_IMAGE_FORMAT_U8, VPI_BACKEND_ALL, &imgFrame_));

CHECK_STATUS(vpiPyramidCreate(cols, rows, VPI_IMAGE_FORMAT_U8, pyr_levels_, 0.5, backend_, &pyrPrevFrame_));

CHECK_STATUS(vpiPyramidCreate(cols, rows, VPI_IMAGE_FORMAT_U8, pyr_levels_, 0.5, backend_, &pyrCurFrame_));

CHECK_STATUS(vpiArrayCreate(MAX_HARRIS_CORNERS, VPI_ARRAY_TYPE_KEYPOINT_F32, VPI_BACKEND_ALL, &curFeatures_));

CHECK_STATUS(vpiArrayCreate(MAX_HARRIS_CORNERS, VPI_ARRAY_TYPE_U8, VPI_BACKEND_ALL, &status_));

CHECK_STATUS(vpiArrayCreate(MAX_HARRIS_CORNERS, VPI_ARRAY_TYPE_KEYPOINT_F32, VPI_BACKEND_ALL, &candidates_));

CHECK_STATUS(vpiArrayCreate(MAX_HARRIS_CORNERS, VPI_ARRAY_TYPE_U32, VPI_BACKEND_ALL, &candidateScores_));

CHECK_STATUS(vpiCreateOpticalFlowPyrLK(backend_, cols, rows, VPI_IMAGE_FORMAT_U8, pyr_levels_, 0.5, &optflow_));

CHECK_STATUS(vpiInitOpticalFlowPyrLKParams(backend_, &lkParams_));

lkParams_.windowDimension = WINDOW_SIZE;

lkParams_.numIterations = MAX_ITERATIONS;

lkParams_.epsilon = EPSILON;

lkParams_.useInitialFlow = 0;

lkParams_.epsilonType = VPI_LK_ERROR_L1;

lkParams_.termination = VPI_TERMINATION_CRITERIA_ITERATIONS; // | VPI_TERMINATION_CRITERIA_EPSILON;

CHECK_STATUS(vpiCreateHarrisCornerDetector(backend_, cols, rows, &harris_));

initialized_ = true;

}

void imageCallback(const sensor_msgs::msg::Image::ConstSharedPtr msg)

{

try

{

cv::Mat frame = cv_bridge::toCvCopy(msg, "bgr8")->image;

if (use_timer_)

{

std::lock_guard<std::mutex> lock(data_mutex_);

latest_frame_ = frame;

has_new_frame_ = true;

}

else

{

processFrame(frame, msg->header);

}

}

catch (cv_bridge::Exception &e)

{

RCLCPP_ERROR(this->get_logger(), "cv_bridge: %s", e.what());

}

}

void timerCallback()

{

cv::Mat frame;

{

std::lock_guard<std::mutex> lock(data_mutex_);

if (!has_new_frame_)

return;

frame = latest_frame_;

has_new_frame_ = false;

}

std_msgs::msg::Header header;

header.stamp = this->now();

header.frame_id = "camera_frame";

processFrame(frame, header);

}

void processFrame(cv::Mat &cvFrame, const std_msgs::msg::Header &header)

{

if (cvFrame.empty())

return;

if (!initialized_)

initVpi(cvFrame.cols, cvFrame.rows);

cv::Mat gray;

cv::cvtColor(cvFrame, gray, cv::COLOR_BGR2GRAY);

// === FIRST FRAME ===

if (cv_prev_gray_.empty())

{

cv_prev_gray_ = gray.clone();

prev_frame_bgr_ = cvFrame.clone();

detectNewFeatures(cvFrame);

extractVpiFeaturesToHost();

cv_prev_pts_ = cv_cur_pts_;

return;

}

// 1. Build pyramids fresh each frame

CHECK_STATUS(vpiImageSetWrappedOpenCVMat(imgTempFrame_, prev_frame_bgr_));

CHECK_STATUS(vpiSubmitConvertImageFormat(stream_, backend_, imgTempFrame_, imgFrame_, NULL));

CHECK_STATUS(vpiSubmitGaussianPyramidGenerator(stream_, backend_, imgFrame_, pyrPrevFrame_, VPI_BORDER_CLAMP));

CHECK_STATUS(vpiStreamSync(stream_));

CHECK_STATUS(vpiImageSetWrappedOpenCVMat(imgTempFrame_, cvFrame));

CHECK_STATUS(vpiSubmitConvertImageFormat(stream_, backend_, imgTempFrame_, imgFrame_, NULL));

CHECK_STATUS(vpiSubmitGaussianPyramidGenerator(stream_, backend_, imgFrame_, pyrCurFrame_, VPI_BORDER_CLAMP));

CHECK_STATUS(vpiStreamSync(stream_));

publishDebugImage(imgFrame_, header);

// 2. Run optical flow if we have previous features

std::vector<cv::Point2f> vpi_cur_pts;

std::vector<uint8_t> vpi_status_host;

if (!vpi_prev_features_host_.empty())

{

VPIArray prevFeatures = NULL;

int32_t nSize = static_cast<int32_t>(vpi_prev_features_host_.size());

VPIArrayData arraydata;

arraydata.bufferType = VPI_ARRAY_BUFFER_HOST_AOS;

arraydata.buffer.aos.type = VPI_ARRAY_TYPE_KEYPOINT_F32;

arraydata.buffer.aos.capacity = nSize;

arraydata.buffer.aos.sizePointer = &nSize;

arraydata.buffer.aos.data = vpi_prev_features_host_.data();

CHECK_STATUS(vpiArrayCreateWrapper(&arraydata, backend_, &prevFeatures));

CHECK_STATUS(vpiSubmitOpticalFlowPyrLK(stream_, backend_, optflow_,

pyrPrevFrame_, pyrCurFrame_,

prevFeatures, curFeatures_,

status_, &lkParams_));

CHECK_STATUS(vpiStreamSync(stream_));

// Download status

VPIArrayData statusData;

CHECK_STATUS(vpiArrayLockData(status_, VPI_LOCK_READ, VPI_ARRAY_BUFFER_HOST_AOS, &statusData));

int statusN = *statusData.buffer.aos.sizePointer;

uint8_t *statusPtr = (uint8_t *)statusData.buffer.aos.data;

vpi_status_host.assign(statusPtr, statusPtr + statusN);

CHECK_STATUS(vpiArrayUnlock(status_));

// Prune and extract

pruneAndExtractVPI(vpi_status_host, vpi_cur_pts);

vpiArrayDestroy(prevFeatures);

}

vpi_track_state_.incrementAges();

// 3. OpenCV optical flow

std::vector<uchar> cv_status;

std::vector<float> cv_err;

if (!cv_prev_pts_.empty())

{

cv::calcOpticalFlowPyrLK(cv_prev_gray_, gray, cv_prev_pts_, cv_cur_pts_,

cv_status, cv_err,

cv::Size(WINDOW_SIZE, WINDOW_SIZE),

pyr_levels_ - 1,

cv::TermCriteria(cv::TermCriteria::COUNT + cv::TermCriteria::EPS,

MAX_ITERATIONS, EPSILON));

}

PruneTracksCV(cv_cur_pts_, cv_status, cv_track_state_);

cv_track_state_.incrementAges();

// 4. Extract visualization data

VisualData vpiData = extractVpiVisData(vpi_cur_pts);

VisualData cvData = extractCvVisData();

size_t vpi_tracked = vpi_cur_pts.size();

size_t cv_tracked = cv_cur_pts_.size();

// 5. Replenish

if (vpi_cur_pts.size() < static_cast<size_t>(MAX_KEYPOINTS))

{

replenishFeaturesOnCurrentFrame(cvFrame, vpi_cur_pts);

}

RCLCPP_INFO_THROTTLE(this->get_logger(), *this->get_clock(), 1000,

"VPI: %zu tracked -> %zu after replenish, CV: %zu tracked -> %zu after replenish",

vpi_tracked, vpi_cur_pts.size(), cv_tracked, cv_cur_pts_.size());

// 6. Draw & Publish

drawAndPublish(cvFrame, vpiData, cvData, header);

// 7. Update history with tracked + replenished points

vpi_track_state_.updateHistory(vpi_cur_pts);

cv_track_state_.updateHistory(cv_cur_pts_);

// 8. Prepare for next frame

vpi_prev_features_host_.clear();

vpi_prev_features_host_.reserve(vpi_cur_pts.size());

for (const auto &pt : vpi_cur_pts)

{

VPIKeypointF32 kp;

kp.x = pt.x;

kp.y = pt.y;

vpi_prev_features_host_.push_back(kp);

}

// 9. Store current frame as previous for next iteration

cv_prev_gray_ = gray.clone();

cv_prev_pts_ = cv_cur_pts_;

prev_frame_bgr_ = cvFrame.clone();

// RCLCPP_INFO_THROTTLE(this->get_logger(), *this->get_clock(), 1000,

// "VPI: %zu tracked, CV: %zu tracked", vpi_cur_pts.size(), cv_cur_pts_.size());

}

void replenishFeaturesOnCurrentFrame(cv::Mat &cvFrame, std::vector<cv::Point2f> &vpi_pts)

{

VPIHarrisCornerDetectorParams harrisParams;

CHECK_STATUS(vpiInitHarrisCornerDetectorParams(&harrisParams));

harrisParams.strengthThresh = 0.01f;

harrisParams.sensitivity = 0.01f;

harrisParams.minNMSDistance = 8.0f;

CHECK_STATUS(vpiSubmitHarrisCornerDetector(stream_, backend_, harris_, imgFrame_,

candidates_, candidateScores_, &harrisParams));

CHECK_STATUS(vpiStreamSync(stream_));

SortKeypoints(candidates_, candidateScores_, MAX_KEYPOINTS);

VPIArrayData candData;

CHECK_STATUS(vpiArrayLockData(candidates_, VPI_LOCK_READ, VPI_ARRAY_BUFFER_HOST_AOS, &candData));

int candN = *candData.buffer.aos.sizePointer;

auto *candBase = (VPIKeypointF32 *)candData.buffer.aos.data;

size_t startN = vpi_pts.size();

size_t curN = vpi_pts.size();

int rejectedTooClose = 0;

for (int i = 0; i < candN && curN < static_cast<size_t>(MAX_KEYPOINTS); ++i)

{

VPIKeypointF32 candPt = candBase[i];

bool isFar = true;

for (const auto &pt : vpi_pts)

{

float dx = candPt.x - pt.x;

float dy = candPt.y - pt.y;

if ((dx * dx + dy * dy) < MIN_DIST_SQ)

{

isFar = false;

rejectedTooClose++;

break;

}

}

if (isFar)

{

cv::Point2f newPt(candPt.x, candPt.y);

vpi_pts.push_back(newPt);

int new_id = global_id_counter_++;

vpi_track_state_.addTrack(new_id, newPt);

cv_cur_pts_.push_back(newPt);

cv_track_state_.addTrack(new_id, newPt);

curN++;

}

}

CHECK_STATUS(vpiArrayUnlock(candidates_));

// RCLCPP_INFO(this->get_logger(),

// "Replenish: Harris found %d candidates, had %zu pts, added %zu, rejected %d (too close), now %zu",

// candN, startN, curN - startN, rejectedTooClose, vpi_pts.size());

}

void detectNewFeatures(cv::Mat &cvFrame)

{

CHECK_STATUS(vpiImageSetWrappedOpenCVMat(imgTempFrame_, cvFrame));

CHECK_STATUS(vpiSubmitConvertImageFormat(stream_, backend_, imgTempFrame_, imgFrame_, NULL));

CHECK_STATUS(vpiStreamSync(stream_));

VPIHarrisCornerDetectorParams harrisParams;

CHECK_STATUS(vpiInitHarrisCornerDetectorParams(&harrisParams));

harrisParams.strengthThresh = 10.0f;

harrisParams.sensitivity = 0.01f;

harrisParams.minNMSDistance = 8.0f;

CHECK_STATUS(vpiSubmitHarrisCornerDetector(stream_, backend_, harris_, imgFrame_,

candidates_, candidateScores_, &harrisParams));

CHECK_STATUS(vpiStreamSync(stream_));

SortKeypoints(candidates_, candidateScores_, MAX_KEYPOINTS);

VPIArrayData candData, curData;

CHECK_STATUS(vpiArrayLockData(candidates_, VPI_LOCK_READ, VPI_ARRAY_BUFFER_HOST_AOS, &candData));

CHECK_STATUS(vpiArrayLockData(curFeatures_, VPI_LOCK_READ_WRITE, VPI_ARRAY_BUFFER_HOST_AOS, &curData));

int candN = *candData.buffer.aos.sizePointer;

auto *candBase = (VPIKeypointF32 *)candData.buffer.aos.data;

auto *curBase = (VPIKeypointF32 *)curData.buffer.aos.data;

int count = std::min(candN, MAX_KEYPOINTS);

for (int i = 0; i < count; ++i)

{

curBase[i] = candBase[i];

int new_id = global_id_counter_++;

vpi_track_state_.addTrack(new_id, cv::Point2f(candBase[i].x, candBase[i].y));

cv_cur_pts_.push_back(cv::Point2f(candBase[i].x, candBase[i].y));

cv_track_state_.addTrack(new_id, cv::Point2f(candBase[i].x, candBase[i].y));

}

*curData.buffer.aos.sizePointer = count;

CHECK_STATUS(vpiArrayUnlock(candidates_));

CHECK_STATUS(vpiArrayUnlock(curFeatures_));

}

void extractVpiFeaturesToHost()

{

VPIArrayData curData;

CHECK_STATUS(vpiArrayLockData(curFeatures_, VPI_LOCK_READ, VPI_ARRAY_BUFFER_HOST_AOS, &curData));

int n = *curData.buffer.aos.sizePointer;

auto *base = (VPIKeypointF32 *)curData.buffer.aos.data;

vpi_prev_features_host_.clear();

vpi_prev_features_host_.reserve(n);

for (int i = 0; i < n; ++i)

{

vpi_prev_features_host_.push_back(base[i]);

}

CHECK_STATUS(vpiArrayUnlock(curFeatures_));

}

void pruneAndExtractVPI(const std::vector<uint8_t> &status, std::vector<cv::Point2f> &out_pts)

{

VPIArrayData curData;

CHECK_STATUS(vpiArrayLockData(curFeatures_, VPI_LOCK_READ, VPI_ARRAY_BUFFER_HOST_AOS, &curData));

int n = *curData.buffer.aos.sizePointer;

auto *base = (VPIKeypointF32 *)curData.buffer.aos.data;

out_pts.clear();

std::vector<int> nextIds, nextAges;

int trackedCount = 0, lostCount = 0, oobCount = 0;

// Get image dimensions

int imgW = img_width_;

int imgH = img_height_;

for (int i = 0; i < n && i < (int)status.size(); ++i)

{

if (status[i] == 0 && i < (int)vpi_track_state_.ids.size())

{

// Check if point is within image bounds

float x = base[i].x;

float y = base[i].y;

if (x < 0 || x >= imgW || y < 0 || y >= imgH)

{

oobCount++;

continue; // Skip out-of-bounds points

}

out_pts.push_back(cv::Point2f(x, y));

nextIds.push_back(vpi_track_state_.ids[i]);

nextAges.push_back(vpi_track_state_.ages[i]);

trackedCount++;

}

else

{

lostCount++;

}

}

CHECK_STATUS(vpiArrayUnlock(curFeatures_));

RCLCPP_INFO(this->get_logger(), "VPI prune: %d tracked, %d lost, %d OOB",

trackedCount, lostCount, oobCount);

vpi_track_state_.ids = nextIds;

vpi_track_state_.ages = nextAges;

}

void replenishFeatures(cv::Mat &cvFrame, std::vector<cv::Point2f> &vpi_pts)

{

CHECK_STATUS(vpiImageSetWrappedOpenCVMat(imgTempFrame_, cvFrame));

CHECK_STATUS(vpiSubmitConvertImageFormat(stream_, backend_, imgTempFrame_, imgFrame_, NULL));

CHECK_STATUS(vpiStreamSync(stream_));

VPIHarrisCornerDetectorParams harrisParams;

CHECK_STATUS(vpiInitHarrisCornerDetectorParams(&harrisParams));

harrisParams.strengthThresh = 10.0f;

harrisParams.sensitivity = 0.01f;

harrisParams.minNMSDistance = 8.0f;

CHECK_STATUS(vpiSubmitHarrisCornerDetector(stream_, backend_, harris_, imgFrame_,

candidates_, candidateScores_, &harrisParams));

CHECK_STATUS(vpiStreamSync(stream_));

SortKeypoints(candidates_, candidateScores_, MAX_KEYPOINTS);

VPIArrayData candData;

CHECK_STATUS(vpiArrayLockData(candidates_, VPI_LOCK_READ, VPI_ARRAY_BUFFER_HOST_AOS, &candData));

int candN = *candData.buffer.aos.sizePointer;

auto *candBase = (VPIKeypointF32 *)candData.buffer.aos.data;

int curN = vpi_pts.size();

for (int i = 0; i < candN && curN < MAX_KEYPOINTS; ++i)

{

VPIKeypointF32 candPt = candBase[i];

bool isFar = true;

for (const auto &pt : vpi_pts)

{

float dx = candPt.x - pt.x;

float dy = candPt.y - pt.y;

if ((dx * dx + dy * dy) < MIN_DIST_SQ)

{

isFar = false;

break;

}

}

if (isFar)

{

cv::Point2f newPt(candPt.x, candPt.y);

vpi_pts.push_back(newPt);

int new_id = global_id_counter_++;

vpi_track_state_.addTrack(new_id, newPt);

cv_cur_pts_.push_back(newPt);

cv_track_state_.addTrack(new_id, newPt);

curN++;

}

}

CHECK_STATUS(vpiArrayUnlock(candidates_));

}

void PruneTracksCV(std::vector<cv::Point2f> &pts, const std::vector<uchar> &status, TrackState &state)

{

if (status.empty())

return;

size_t writeIdx = 0;

for (size_t i = 0; i < pts.size() && i < status.size(); ++i)

{

if (status[i] == 1 && i < state.ids.size())

{

if (writeIdx != i)

{

pts[writeIdx] = pts[i];

state.ids[writeIdx] = state.ids[i];

state.ages[writeIdx] = state.ages[i];

}

writeIdx++;

}

}

pts.resize(writeIdx);

state.ids.resize(writeIdx);

state.ages.resize(writeIdx);

}

VisualData extractVpiVisData(const std::vector<cv::Point2f> &pts)

{

VisualData data;

data.points = pts;

data.ids = vpi_track_state_.ids;

data.ages = vpi_track_state_.ages;

data.prev_map = vpi_track_state_.prev_pts_map;

return data;

}

VisualData extractCvVisData()

{

VisualData data;

data.points = cv_cur_pts_;

data.ids = cv_track_state_.ids;

data.ages = cv_track_state_.ages;

data.prev_map = cv_track_state_.prev_pts_map;

return data;

}

void publishDebugImage(VPIImage vpiImg, const std_msgs::msg::Header &header)

{

if (debug_pub_->get_subscription_count() == 0)

return;

VPIImageFormat fmt;

vpiImageGetFormat(vpiImg, &fmt);

int w, h;

vpiImageGetSize(vpiImg, &w, &h);

cv::Mat outMat;

if (fmt == VPI_IMAGE_FORMAT_U8)

outMat.create(h, w, CV_8UC1);

else

return;

VPIImageData data;

vpiImageLockData(vpiImg, VPI_LOCK_READ, VPI_IMAGE_BUFFER_HOST_PITCH_LINEAR, &data);

for (int i = 0; i < h; ++i)

{

memcpy(outMat.ptr(i), (uint8_t *)data.buffer.pitch.planes[0].data + i * data.buffer.pitch.planes[0].pitchBytes, w);

}

vpiImageUnlock(vpiImg);

debug_pub_->publish(*cv_bridge::CvImage(header, "mono8", outMat).toImageMsg());

}

void drawAndPublish(const cv::Mat &src, const VisualData &vpiData, const VisualData &cvData,

const std_msgs::msg::Header &header)

{

cv::Mat vpiImg = src.clone();

cv::Mat cvImg = src.clone();

auto draw = [&](cv::Mat &img, const VisualData &d, const std::string &lbl)

{

cv::putText(img, lbl + ": " + std::to_string(d.points.size()), cv::Point(10, 30),

cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 255, 0), 2);

for (size_t i = 0; i < d.points.size(); ++i)

{

if (i >= d.ages.size() || i >= d.ids.size())

continue;

double len = std::min(1.0, 1.0 * d.ages[i] / 50.0);

cv::Scalar color(255 * (1 - len), 0, 255 * len);

cv::circle(img, d.points[i], 2, color, 2);

int id = d.ids[i];

auto iter = d.prev_map.find(id);

if (iter != d.prev_map.end())

{

cv::arrowedLine(img, iter->second, d.points[i], cv::Scalar(0, 255, 0), 1, cv::LINE_8, 0, 0.3f);

}

}

};

draw(vpiImg, vpiData, "VPI (CUDA)");

draw(cvImg, cvData, "OpenCV (CPU)");

cv::Mat combined;

cv::hconcat(vpiImg, cvImg, combined);

pub_->publish(*cv_bridge::CvImage(header, "bgr8", combined).toImageMsg());

}

};

int main(int argc, char **argv)

{

rclcpp::init(argc, argv);

rclcpp::spin(std::make_shared<VpiLkNode>());

rclcpp::shutdown();

return 0;

}