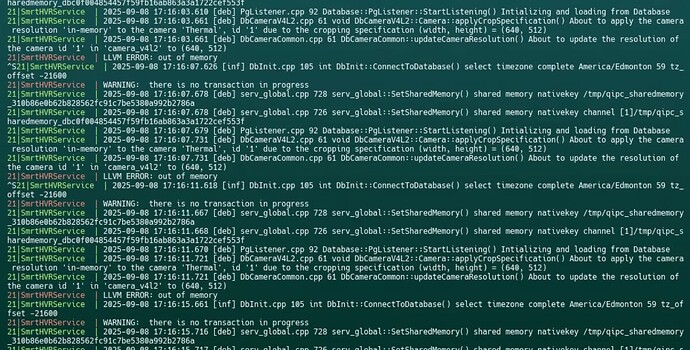

@DaneLLL, thanks for your reply. I rebuild without opencv_world. I had to modify C++ projects to include and link the OpenCV libraries independently. I am a bit stuck using the C++ code to generate the YOLO v8 nano model from an ONNX format (13MB file) to an engine file for TensorRT. The C++ code that we have been using since YOLOv4 and more recently with YOLOv8 for JetPack 5.1.2 fails on JetPack 6.2 with an out-of-memory error. I am using a more up-to-date Python 3 script to do the same; however, the error after a few minutes is the same.

This is the script:

onnx_to_tensorrt.py.txt (8.7 KB)

yolov8n_1_3_640_640.onnx.txt (12.2 MB)

python3 onnx_to_tensorrt.py --fp16 --workspace 512 --force

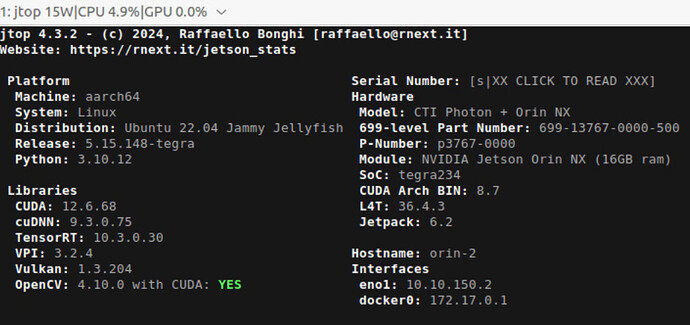

[2025-09-10 03:55:38,959] [INFO] === System Information ===

[2025-09-10 03:55:38,959] [INFO] TensorRT version: 10.3.0

[2025-09-10 03:55:38,960] [INFO] pynvml not available, skipping GPU memory info

[2025-09-10 03:55:38,960] [INFO] ===============================

[2025-09-10 03:55:38,960] [INFO] Starting TensorRT engine conversion...

[2025-09-10 03:55:38,960] [INFO] Input ONNX: /home/intelliview/git/hvr7/SmrtHVR/3rdParty/YoloData/Sources/yolov8n_1_3_640_640.onnx

[2025-09-10 03:55:38,960] [INFO] Output Engine: yolov8n_1_3_640_640_fp16_jp62.engine

[2025-09-10 03:55:38,960] [INFO] Workspace Size: 512 MB

[2025-09-10 03:55:38,960] [INFO] Precision: FP16=True, INT8=False

[09/10/2025-03:55:39] [TRT] [I] [MemUsageChange] Init CUDA: CPU +13, GPU +0, now: CPU 35, GPU 4562 (MiB)

[09/10/2025-03:55:41] [TRT] [I] [MemUsageChange] Init builder kernel library: CPU +927, GPU +754, now: CPU 1005, GPU 5360 (MiB)

[2025-09-10 03:55:41,351] [INFO] Applying Jetson Orin NX memory optimizations...

[2025-09-10 03:55:41,351] [INFO] Workspace memory limit: 512 MB

[2025-09-10 03:55:41,351] [INFO] Tactic shared memory: 128 MB

[2025-09-10 03:55:41,351] [INFO] FP16 precision enabled

[2025-09-10 03:55:41,352] [INFO] Parsing ONNX model...

[2025-09-10 03:55:41,385] [INFO] ONNX model parsed successfully

[2025-09-10 03:55:41,385] [INFO] Network inputs: 1

[2025-09-10 03:55:41,385] [INFO] Network outputs: 1

[2025-09-10 03:55:41,385] [INFO] Input 0: images - Shape: (1, 3, 640, 640) - Dtype: DataType.FLOAT

[2025-09-10 03:55:41,386] [INFO] Output 0: output0 - Shape: (1, 84, 8400) - Dtype: DataType.FLOAT

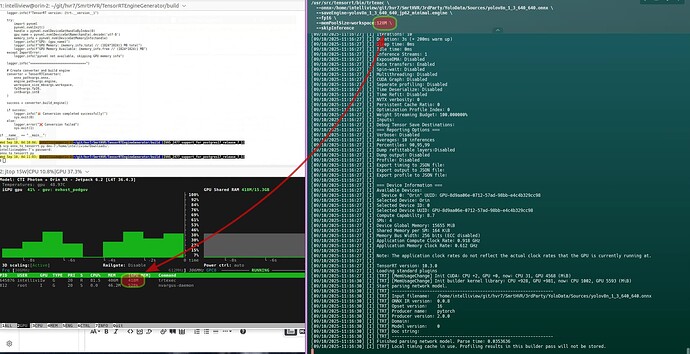

[2025-09-10 03:55:41,386] [INFO] Building TensorRT engine... This may take several minutes.

[09/10/2025-03:55:41] [TRT] [I] Local timing cache in use. Profiling results in this builder pass will not be stored.

[09/10/2025-04:04:19] [TRT] [I] Detected 1 inputs and 3 output network tensors.

[09/10/2025-04:04:20] [TRT] [E] [defaultAllocator.cpp::allocateAsync::48] Error Code 1: Cuda Runtime (out of memory)

[09/10/2025-04:04:20] [TRT] [W] Requested amount of GPU memory (512 bytes) could not be allocated. There may not be enough free memory for allocation to succeed.

[09/10/2025-04:04:20] [TRT] [E] [wtsEngineRtUtils.cpp::executeWtsEngine::159] Error Code 2: OutOfMemory (Requested size was 512 bytes.)

[2025-09-10 04:04:20,371] [ERROR] Failed to build TensorRT engine

[2025-09-10 04:04:20,846] [ERROR] ❌ Conversion failed

It appears that something is wrong with the memory allocation used by TensorRT.

What are my options at this point?

The same C++ code used to work with JP 5.1.2 on exactly the same hardware to perform this conversion. The Python code confirms the memory allocation challenge faced by TensorRT 10.3.0:

[09/10/2025-04:15:28] [TRT] [E] [defaultAllocator.cpp::allocateAsync::48] Error Code 1: Cuda Runtime (out of memory)

[09/10/2025-04:15:28] [TRT] [E] [wtsEngineRtUtils.cpp::executeWtsEngine::159] Error Code 2: OutOfMemory (Requested size was 512 bytes.)

Thanks,

Pablo

P.S.: I also tried using the trtexectool to do the ONNX to engine Yolov8 conversion with the same OutOfMemory error. The command line is as follows. I also tried smaller and larger memory pool sizes without impact in the error.

/usr/src/tensorrt/bin/trtexec

–onnx=/home/intelliview/git/hvr7/SmrtHVR/3rdParty/YoloData/Sources/yolov8n_1_3_640_640.onnx

–saveEngine=yolov8n_1_3_640_640_jp62.engine

–fp16

–memPoolSize=workspace:512M

Are the GPU memory and the trtexec memPoolSizerequested related this way when I lowered it to 128MB? jtop reports much higher GPU memory, ~420 MB, and it is at around 520MB when it hits the error and stops.