Greetings to everyone!

First of all, please pardon my ignorance, as I’ve got just basic understanding of networking and my question might seem stupid. The hardware is actually OEM (HPE), but I’m writing my question here, because I find this forum really helpful.

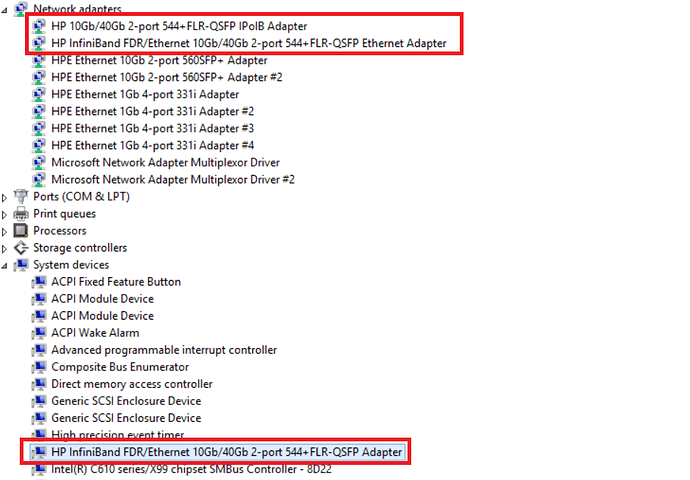

So, here is the thing - I need to connect two servers using ConnectX-3 Pro HCA’s without IB switch to run MPI applications with RDMA in Windows Server 2012 R2. To get the IB network running, I follow normal procedures: install the driver and WinOF, create the OpenSM service. Actually, everything works properly, and MPI tests show expected latency (~2 usec) and bandwidth (~4500 MB/s). But the problems arise when the servers are rebooted - ports #1 on both HCA’s always negotiate to use Ethernet protocol when the cable is plugged during system boot, regardless of the UEFI setting (per port, which strictly corresponds to mlxconfig setting). For example, when port 1 cable is plugged and port 2 is unplugged, the configuration becomes: Port 1 - Eth, Port 2 - IB. This is how it looks from the device manager:

And I’m unable to change that, because the driver settings are grayed out:

The availability of port protocol settings for both ports depends on UEFI(=mlxconfig) setting. For the first port it also depends on cable presence. When the cable is plugged during system boot, Port 1 is always grayed out with “Eth” chosen, regardless of the UEFI(=mlxconfig) setting. Rebooting the bus driver (disable/enable IB Adapter system device in Device Manager) doesn’t help. When the cable is unplugged and port protocol is set to VPI, the setting is available. The setting also becomes available right after the cable is unplugged from a running system. And the IB network works normally on the first port until next reboot. Seems like the Windows driver ignores 1st port setting from firmware. I would greatly appreciate any ideas why that may happen. Currently the workaround is to unplug the cable from both ports #1. I suspected, that one of the cables was faulty, but switching the cables had no effect, so I guess the cable is OK.

Here is some additional info on my configuration:

Part numbers for the HCAs and cables:

764285-B21 - HP IB FDR/EN 40Gb 2P 544+FLR-QSFP Adptr

808722-B21 - HP 3M IB FDR QSFP V-series Optical Cbl

Firmware version: 2.40.5072

Driver version: 5.35.12978.0

WinOF version: 5.35

OpenSM: 3.3.11 (comes with WinOF)

WinMFT version: 4.6.0.48

mlxconfig -d mt4103_pci_cr0 query output (note the LINK_TYPE_P1 = IB, while the driver is showing ETH):

Device type: ConnectX3Pro

PCI device: mt4103_pci_cr0

Configurations: Next Boot

SRIOV_EN True(1)

NUM_OF_VFS 16

WOL_MAGIC_EN_P2 True(1)

LINK_TYPE_P1 IB(1)

LINK_TYPE_P2 IB(1)

LOG_BAR_SIZE 5

BOOT_PKEY_P1 0

BOOT_PKEY_P2 0

BOOT_OPTION_ROM_EN_P1 True(1)

BOOT_VLAN_EN_P1 False(0)

BOOT_RETRY_CNT_P1 0

LEGACY_BOOT_PROTOCOL_P1 PXE(1)

BOOT_VLAN_P1 1

BOOT_OPTION_ROM_EN_P2 True(1)

BOOT_VLAN_EN_P2 False(0)

BOOT_RETRY_CNT_P2 0

LEGACY_BOOT_PROTOCOL_P2 PXE(1)

BOOT_VLAN_P2 1

IP_VER_P1 IPv4(0)

IP_VER_P2 IPv4(0)

ibv_devinfo -v output (when Port 1 is empty):

hca_id: ibv_device0

fw_ver: 2.40.5072

node_guid: 7010:6fff:ffa8:1870

sys_image_guid: 7010:6fff:ffa8:1873

vendor_id: 0x02c9

vendor_part_id: 4103

hw_ver: 0x0

phys_port_cnt: 2

max_mr_size: 0xffffffffffffffff

page_size_cap: 0x1000

max_qp: 65472

max_qp_wr: 16351

device_cap_flags: 0x00005876

max_sge: 32

max_sge_rd: 0

max_cq: 65408

max_cqe: 4194303

max_mr: 130816

max_pd: 32764

max_qp_rd_atom: 16

max_ee_rd_atom: 0

max_res_rd_atom: 0

max_qp_init_rd_atom: 128

max_ee_init_rd_atom: 0

atomic_cap: ATOMIC_HCA (1)

max_ee: 0

max_rdd: 0

max_mw: 0

max_raw_ipv6_qp: 0

max_raw_ethy_qp: 0

max_mcast_grp: 8192

max_mcast_qp_attach: 244

max_total_mcast_qp_attach: 1998848

max_ah: 0

max_fmr: 0

max_srq: 65472

max_srq_wr: 16383

max_srq_sge: 31

max_pkeys: 128

local_ca_ack_delay: 15

port: 1

state: PORT_DOWN (1)

max_mtu: 4096 (5)

active_mtu: 4096 (5)

sm_lid: 0

port_lid: 0

port_lmc: 0x00

transport: IB

max_msg_sz: 0x40000000

port_cap_flags: 0x00005890

max_vl_num: 2 (2)

bad_pkey_cntr: 0x0

qkey_viol_cntr: 0x0

sm_sl: 0

pkey_tbl_len: 16

gid_tbl_len: 128

subnet_timeout: 0

init_type_reply: 0

active_width: 4X (2)

active_speed: 10.0 Gbps (4)

phys_state: POLLING (2)

GID[ 0]: fe80:0000:0000:0000:7010:6fff:ff

a8:1871

port: 2

state: PORT_ACTIVE (4)

max_mtu: 4096 (5)

active_mtu: 4096 (5)

sm_lid: 3

port_lid: 3

port_lmc: 0x00

transport: IB

max_msg_sz: 0x40000000

port_cap_flags: 0x00005890

max_vl_num: 2 (2)

bad_pkey_cntr: 0x0

qkey_viol_cntr: 0x0

sm_sl: 0

pkey_tbl_len: 16

gid_tbl_len: 128

subnet_timeout: 18

init_type_reply: 0

active_width: 4X (2)

active_speed: invalid speed (16)

phys_state: LINK_UP (5)

GID[ 0]: fe80:0000:0000:0000:7010:6fff:ff

a8:1872

Here it reports wrong active speed, and I haven’t figured out how to change it, but the fabric works. I’m not sure if it has anything to do with the problem.

Thanks in advance!

Best wishes,

Dmitry