Please provide the following info (tick the boxes after creating this topic):

Software Version

DRIVE OS 6.0.10.0

DRIVE OS 6.0.8.1

DRIVE OS 6.0.6

DRIVE OS 6.0.5

DRIVE OS 6.0.4 (rev. 1)

DRIVE OS 6.0.4 SDK

other

Target Operating System

Linux

QNX

other

Hardware Platform

DRIVE AGX Orin Developer Kit (940-63710-0010-300)

DRIVE AGX Orin Developer Kit (940-63710-0010-200)

DRIVE AGX Orin Developer Kit (940-63710-0010-100)

DRIVE AGX Orin Developer Kit (940-63710-0010-D00)

DRIVE AGX Orin Developer Kit (940-63710-0010-C00)

DRIVE AGX Orin Developer Kit (not sure its number)

other

SDK Manager Version

2.1.0

other

Host Machine Version

native Ubuntu Linux 20.04 Host installed with SDK Manager

native Ubuntu Linux 20.04 Host installed with DRIVE OS Docker Containers

native Ubuntu Linux 18.04 Host installed with DRIVE OS Docker Containers

other

Issue Description

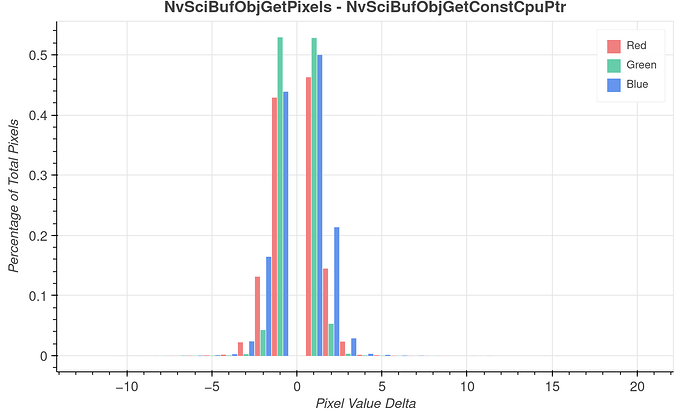

I am writing a pipeline in which some processing happens as YUV, before getting converted to an RGB image to be exported. I am doing this with NvMedia2DCompose into a buffer configured with an NvSciColor_B8G8R8A8 NvSciBufAttrValColorFmt and NvSciBufImage_PitchLinearType NvSciBufAttrValImageLayoutType. As far as I can tell, there are two ways to move the buffer to userspace memory: NvSciBufObjGetPixels and NvSciBufObjGetConstCpuPtr. If I run both of these operations on the same buffer, I’ve noticed that the data are slightly different. If you view the images side by side it’s difficult to tell, but If I just subtract one image from the other, about 1.5% of the pixels have different values. The histogram below is a typical example (the 0 column has been skipped for clarity). Most of the pixels are only different by a value of 1 or 2, but the delta can be over 20. It also happens regardless of which function I call first.

- Could someone explain why there is a difference between these two outputs?

- Is there a reason why

NvSciBufObjGetPixelsis slow to run? It takes about 40ms for this 4K image, and over 500ms if the buffer is in block linear layout.

example code for grabbing the pixels using both functions is below (dstBuf is the BGRA buffer mentioned above).

size_t ptrsize = (4U * 2160U * 3840U);

uint8_t* bufptr = (uint8_t*)malloc(sizeof(uint8_t) * ptrsize);

if (bufptr == NULL) {

return 1;

}

uint8_t** bufptrs = &bufptr;

uint32_t bufptrsizes = (uint32_t)ptrsize;

uint32_t dstPitches[1] = { 15360 };

// note: SW caching is disabled.

rc_sci = NvSciBufObjGetPixels(dstBuf, NULL, (void**)bufptrs, &bufptrsizes, dstPitches);

if (rc_sci != NvSciError_Success)

{

free(bufptr);

return 1;

}

char rgbName[128];

snprintf(rgbName, 128, "/tmp/frame_%lu_get_pixels.bgra", frameCount);

FILE *rgbFile = fopen(rgbName, "wb");

if (rgbFile == NULL) {

return 1;

}

if (fwrite(bufptr, ptrsize, 1U, rgbFile) <= 0) {

fclose(rgbFile);

free(bufptr);

return 1;

}

fclose(rgbFile);

void* va_ptr = nullptr;

rc_sci = NvSciBufObjGetConstCpuPtr(dstBuf, (const void**)&va_ptr);

if (rc_sci != NvSciError_Success) {

return 1;

}

uint8_t* basePtr = static_cast<uint8_t*>(va_ptr);

snprintf(rgbName, 128, "/tmp/frame_%lu_get_pointer.bgra", frameCount);

rgbFile = fopen(rgbName, "wb");

if (rgbFile == NULL) {

return 1;

}

if (fwrite(basePtr, 4U * 3840U * 2160, 1U, rgbFile) <= 0) {

fclose(rgbFile);

return 1;

}

fclose(rgbFile);