Specifications:

- AGX Xavier

- Jetpack 4.6

- TensorRT 8.0.1

- CUDA10.2

- cuDNN 8.2.1

I am basically running a script that runs a face detection model, then runs a tracker, then runs a classifier model on the detected faces. I am facing an issue where:

- When I load the face detection model on its own, and then load the face classifier model and run some tests, I have a stray -1.875 and -1. in my model. My code:

#load in the models

stream = cuda.Stream()

TRT_LOGGER = trt.Logger()

explicit_batch = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

#load in the face detector tensorrt model

with open("weights/yolov5s-face-448x800.trt", "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

fd_engine = runtime.deserialize_cuda_engine(f.read())

for binding in fd_engine:

if fd_engine.binding_is_input(binding):

fd_device_input = cuda.mem_alloc(trt.volume(fd_engine.get_binding_shape(binding)) * fd_engine.max_batch_size * np.dtype(np.float32).itemsize)

else:

fd_host_output = cuda.pagelocked_empty(trt.volume(fd_engine.get_binding_shape(binding)) * fd_engine.max_batch_size, dtype=np.float32)

fd_device_output = cuda.mem_alloc(fd_host_output.nbytes)

fd_context = fd_engine.create_execution_context()

#load in the face classifier tensorrt model

with open("weights/resnet34_fc_fp16.engine", "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

fc_engine = runtime.deserialize_cuda_engine(f.read())

for binding in fc_engine:

if fc_engine.binding_is_input(binding):

fc_device_input = cuda.mem_alloc(abs(trt.volume(fc_engine.get_binding_shape(binding))) * fc_engine.max_batch_size * np.dtype(np.float32).itemsize)

else:

fc_host_output = cuda.pagelocked_empty(abs(trt.volume(fc_engine.get_binding_shape(binding))) * fc_engine.max_batch_size, dtype=np.float32)

fc_device_output = cuda.mem_alloc(fc_host_output.nbytes)

fc_context = fc_engine.create_execution_context()

#Test face classifier

test_batchsize = 3

fc_context.set_binding_shape(0, (test_batchsize, 3, 224, 224))

batch_list = []

for j in range(test_batchsize):

img = Image.open("test_materials/turkish_coffee.jpg")

img = img.resize((224, 224))

if img.mode == "RGBA":

img = img.convert("RGB")

convert_to_tensor = transforms.ToTensor()

normalize = transforms.Normalize(mean = [0.485, 0.456, 0.406], std = [0.229, 0.224, 0.225])

batch_list.append(normalize(convert_to_tensor(img)))

input = torch.stack(batch_list)

fc_host_input = np.array(input.numpy(), dtype=np.float32, order='C')

cuda.memcpy_htod_async(fc_device_input, fc_host_input, stream)

fc_context.execute_async_v2(bindings=[int(fc_device_input), int(fc_device_output)], stream_handle=stream.handle)

cuda.memcpy_dtoh_async(fc_host_output, fc_device_output, stream)

stream.synchronize()

print(fc_host_output)

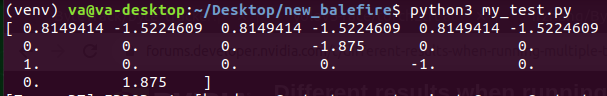

I get this result:

As can be seen the -1.875 and -1 are strays.

But suppose that I remove the loading of the face detector tensorrt model:

#load in the models

stream = cuda.Stream()

TRT_LOGGER = trt.Logger()

explicit_batch = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

#load in the face classifier tensorrt model

with open("weights/resnet34_fc_fp16.engine", "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

fc_engine = runtime.deserialize_cuda_engine(f.read())

for binding in fc_engine:

if fc_engine.binding_is_input(binding):

fc_device_input = cuda.mem_alloc(abs(trt.volume(fc_engine.get_binding_shape(binding))) * fc_engine.max_batch_size * np.dtype(np.float32).itemsize)

else:

fc_host_output = cuda.pagelocked_empty(abs(trt.volume(fc_engine.get_binding_shape(binding))) * fc_engine.max_batch_size, dtype=np.float32)

fc_device_output = cuda.mem_alloc(fc_host_output.nbytes)

fc_context = fc_engine.create_execution_context()

#Test face classifier

test_batchsize = 3

fc_context.set_binding_shape(0, (test_batchsize, 3, 224, 224))

batch_list = []

for j in range(test_batchsize):

img = Image.open("test_materials/turkish_coffee.jpg")

img = img.resize((224, 224))

if img.mode == "RGBA":

img = img.convert("RGB")

convert_to_tensor = transforms.ToTensor()

normalize = transforms.Normalize(mean = [0.485, 0.456, 0.406], std = [0.229, 0.224, 0.225])

batch_list.append(normalize(convert_to_tensor(img)))

input = torch.stack(batch_list)

fc_host_input = np.array(input.numpy(), dtype=np.float32, order='C')

cuda.memcpy_htod_async(fc_device_input, fc_host_input, stream)

fc_context.execute_async_v2(bindings=[int(fc_device_input), int(fc_device_output)], stream_handle=stream.handle)

cuda.memcpy_dtoh_async(fc_host_output, fc_device_output, stream)

stream.synchronize()

print(fc_host_output)

I instead get this:

Could someone advise why this is happening?