We are try to run tensorflow on virtualized gpus grid of Tesla T4 on virtual machine system Ubuntu 18.04, but when we checked the availability of vgpus, the following error popped up.

tf.test.is_gpu_available()

2019-11-07 20:54:08.422120: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2019-11-07 20:54:08.440825: I tensorflow/stream_executor/platform/default/dso_loader.cc:42] Successfully opened dynamic library libcuda.so.1

2019-11-07 20:54:08.504290: W tensorflow/compiler/xla/service/platform_util.cc:256] unable to create StreamExecutor for CUDA:0: failed initializing StreamExecutor for CUDA device ordinal 0: Internal: failed call to cuDevicePrimaryCtxRetain: CUDA_ERROR_UNKNOWN: unknown error

2019-11-07 20:54:08.504434: F tensorflow/stream_executor/lib/statusor.cc:34] Attempting to fetch value instead of handling error Internal: no supported devices found for platform CUDA

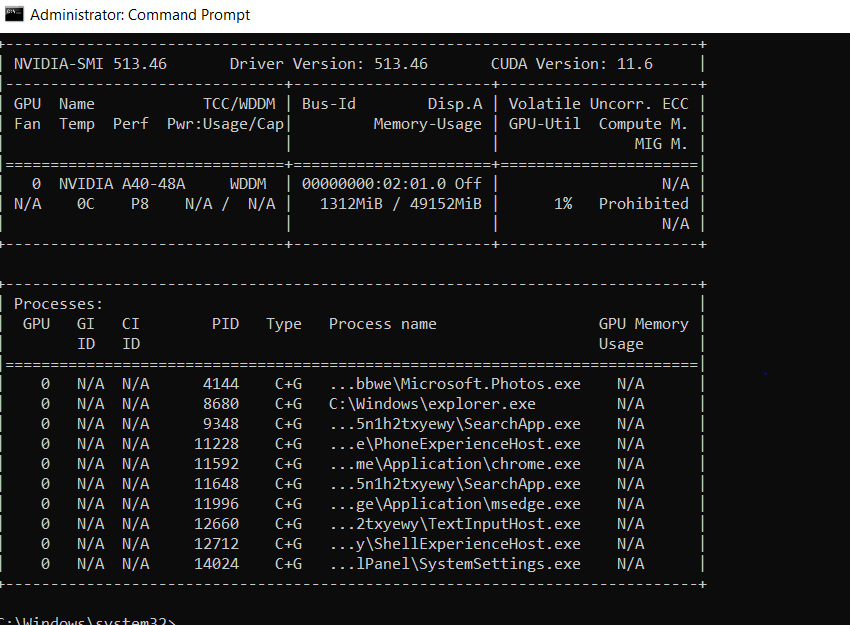

Our guess was that happened because the computing mode of the virtualized gpu was “prohibited”. We tried to change it back to default but failed, because ”Setting compute mode to DEFAULT is not supported.”

gpu@gpu-KVM:~$ nvidia-smi

Thu Nov 7 20:47:04 2019

±----------------------------------------------------------------------------+

| NVIDIA-SMI 430.30 Driver Version: 430.30 CUDA Version: 10.2 |

|-------------------------------±---------------------±---------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GRID T4-1B On | 00000000:00:09.0 Off | N/A |

| N/A N/A P8 N/A / N/A | 80MiB / 1016MiB | 0% Prohibited |

±------------------------------±---------------------±---------------------+

±----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

±----------------------------------------------------------------------------+

gpu@gpu-KVM:~$ sudo nvidia-smi -i 0 -c 0

[sudo] password for gpu:

Setting compute mode to DEFAULT is not supported.

Treating as warning and moving on.

All done.