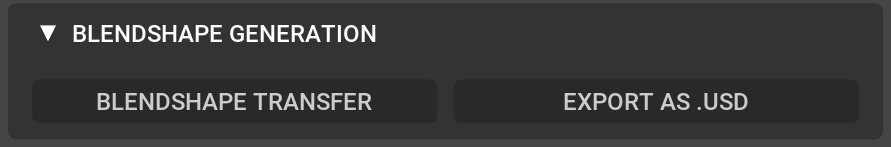

I want to “Export as USD SkelAnimation”, for that I need to “Set Up BlendShape Solve”. BlendShape Solve only works on meshes that already have valid Blendshapes. After importing a custom character and animating the skin, eyes, tongue and teeth I need to generate shapekeys to those meshes. Pressing “BlendShapr Transfer” I only get the skin.

Is only getting the skin only in Blendshape Generation the way it is supposed to work?

Is there a way to generate blendshapes for the eyes, tongue and teeth?

Will generating blendshapes for all meshes be a feature later on?

The Blendshape Generation tutorial works only with the skin, so there is no way for me to know if there is a workflow to also do the eyes and teeth.

Hi @dmtzcain,

We are aware of some of the rough corners with our full-face workflow and we are working to address them in the next fix or version.

In short:

- Blendshape transfer only works for skin

- Tongue can be solved with blendshapes and exported but the process is convoluted.

- Eyes / Teeth can be exported as cache or rigid xforms

Let me go into some details.

Blendshape creation

The eyes and teeth are animated through rigid transforms whereas the skin and tongue are mesh deformations.

The blendshape transfer is mainly addressed for these mesh deformations. However, as of right now the tongue is not supported for blendshape transfer.

For things that move along with the skin (such as eyebrows or beards) @esusantolim showed how to set up the blendshapes here.

Exporting Full-face

Currently the only way to export all the full-face elements is through caches, however you can choose either cache or rigid xform for eyes and lower_teeth (that would be the jaw motion).

The blendshape animation for the skin can be exported separately.

Exporting blendshape animation for tongue

Right now, it is a bit convoluted to have both a skin blendshape solve and a tongue blendshape solve. This is something that we have fixed and plan to release soon.

One work-around to export both facial blendshape weights & tongue blendshape weights would be to:

- Set up facial blendshape solve and export weights

- Delete the facial blendshape set up

- Set up tongue blendshape solve and export weights

Tongue blendshapes

In anycase, you would need to have a tongue model with blendshapes.

As the tongue motion is not too complex, creating some simple shapes is enough.

For example, by sculpting them or creating a simple rig and make few poses for the tongue to create few blendshapes.

We currently don’t have a template for tongue blendshapes but some of the Realusion characters have, that can serve as guidance for creating the tongue blendshapes.

1 Like

Thank you for the clear explanation, I understand better now. I guess automating lower teeth and eyes as shapekeys is complex.

I’ll try exporting the tongue by itself.

What I already did was stetup in DCC blendshapes for lower teeth and eyes and attaching them to the exported skin. It works since the skin already exports with some eye movement weights.

What is not clear to me is that even if I have a tongue with the correct blendshapes, I have noticed that the exported skin does not export with any tongue weights, so the tongue wouldn’t move at all. I guess the only way is try to " * Set up tongue blendshape solve and export weights"

Thanks

Yes, you are right.

Right now, the exporter is tied to a single blend shape solver, so the simplest way to deal with this limitation is to export separately as I mentioned in the previous post.

In the next version, A2F will be able to setup multiple blend shape solvers ( eg. one for the skin, one for the tongue) and export them as well.

Feel free to ask if something not clear or any suggestions!

1 Like

Two questions.

-

Since Audio2Face currently doesn’t generate tongue Blendshapes, I need to import a tongue with the Blndshapes and “Export As .USD” the tongue only, correct?

-

Using the same logic, wouldn’t it be possible to import also Eyes and Lower Jaw with their respective Blendshapes and drive those? even if each have to be exported separately? I understand from your previous post that eyes and lower jaw are driven as rigid xforms; is Blendshape deformation for eyes an lower jaw a feature that may come in the future?

It seems to me that driving everything through blendshapes would make the workflow more streamlined, though I guess xform transformations help performance? i guess?

Thanks for your time and insight!

- correct

- In theory would work. I don’t think that we will support blendshapes for the eyes or the lower denture, we currently plan to support joints. On a side note, the jaw motion is part of the skin blendshapes already. Supporting the lower denture would be possible.

The main reason for using joints or xforms is that the eyes and jaw are pure rotations and blendshapes cannot represent these motions that well since they are linear interpolations of the different shapes.

In many setups the jaw itself would be also skinned to a joint, with the “corrective” blendshapes applied on top of it.

1 Like

I see.

Thanks! I’ll keep exploring

1 Like

Hi, maybe you managed some workflow on how to use eyes, lower jaw, eyebrows and tongue animation from a2f into Blender? Thanks.

I did.

Oddly enough, the data for eye movement and tongue movement is exported.

All you need to do is set up externally eye and tongue shapekeys and join them to the exported skin.

Then all works together.

Hope this helps.

the data for eye and tongue movement is being exported from a2f into blender using usdskel usd file? What do you mean saying set up eye and tongue externally? You mean I need to give them blendshapes in a2f using prox ui then exporting usdskel into blender? Thank you

Will there be any workflow on how to use eyes rigid xforms or cache in Blender? It is way to hard to understand how it is working. I’ve exported rigid xform and also cache but the file size already is about 50 mb, it is making this method unusable, because I’m trying to make audio2face work for my game creation, with those file sizes the game will just show slideshows.