Hello. First of all, I’m not familiar with English. I’m sorry.

I’m currently training a tensorflow model using RTX4090. It’s tensorflow-gpu2.10.0, cuda11.8, cudnn8.8.1 in Windows environment.

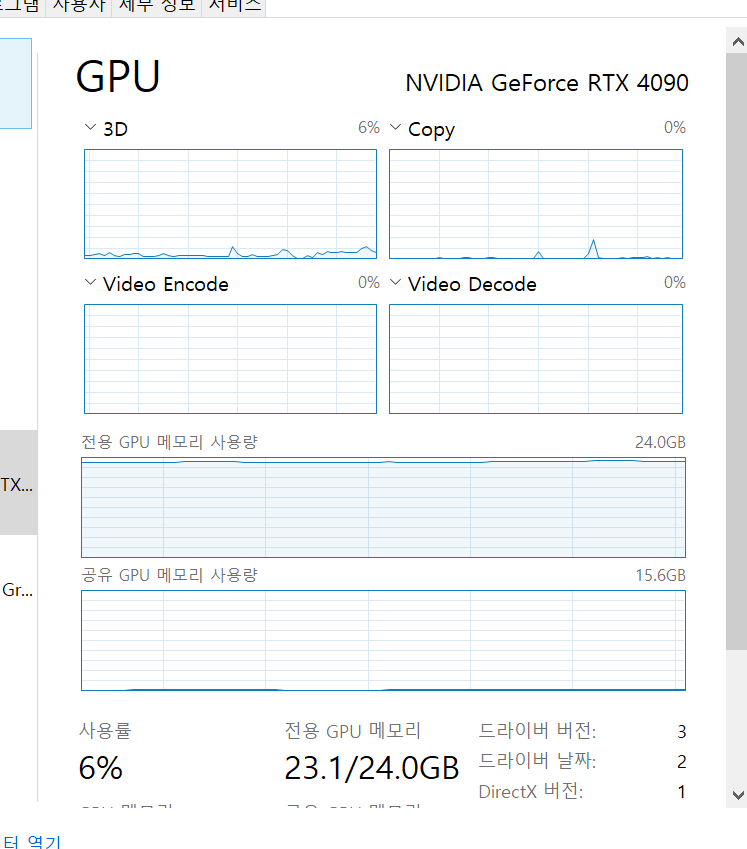

When I run the training in this environment, it only uses GPU VRAM and the usage is not high. I’m wondering if this is a proper situation. Shouldn’t the usage be higher?

Or should I switch to cuda12 version? It’s a new version that just came out, so I’m hesitant to upgrade.

I attach a reference picture. Thank you.

You can find dozens of questions like this on the internet forums. Here is an example. They don’t all pertain to 4090 so the problem is evidently not specific to the 4090. As indicated there, the problem likely has to do with the actual model training activity you are doing, the batch sizes you are using, or tensorflow settings that affect greedy allocation. As long as tensorflow is working and using the GPU at all, it’s unlikely that your choice of CUDA version is affecting this at all. CUDA 11.8 is an acceptable version to use with 4090. I won’t be able to respond to further requests for help with Tensorflow. This forum may not be the best place to request help with Tensorflow. See here.

1 Like