您好,我在烧录Jetsoon Agx Thor板子的时候,在SDK Manager2.3.0.12626 x86_64软件时,在选择了Jetson Agx thor时,只能选择JetPack 7.0,为什么不能选择JetPack6.2呢??谢谢回复

Hello,

Thanks for visiting the NVIDIA Developer Forums.

To ensure better visibility and support, I’ve moved your post to the Jetson category where it’s more appropriate

Cheers,

Tom

JetPack 6.x is for AGX Orin family only, JetPack 7 supports Jetson Thor only.

You can refer to JetPack Archive | NVIDIA Developer to find more information.

您好,感谢您的回复。

向您确认一下:Jetson AGX Thor T5000不能安装JetPack6.x版本吗?请问有办法安装6.x版本吗

如果只能装JetPack7版本,它可以安装cuda12版本吗

我看图片中的ubuntu22可以安装Jetpack6.x

There are low layer dependencies, so you can’t install JP6.x on Jetson Thor.

No, can’t downgrade. Why CUDA 12 is required?

主要是cuda13太高了,很多东西都没有兼容。比如我要安装vllm进行推理大模型,它就装不了,不知道是不是有什么方法吗

Jetson T5000,是不是只能安装ubuntu24版本呢?

Hi,

Please find our vLLM container for Thor below:

Below is an example to run gpt-oss-20b for your reference:

Thanks.

您好,目前有哪些推理框架是兼容了呢?

顺便方便提供链接吗?

Jetson Agx Thor只支持ubuntu24版本吗

Let me emphasize again, only JetPack7/Ubuntu24 can support on Jetson AGX Thor, no other version.

我在使用tensorrt推理Qwen/Qwen3-VL-8B-Instruct也不行。有像上面vllm的步骤吗、谢谢

Hi,

Please find below the link for an example to run Qwen2.5-VL-3B:

Please update the container to nvcr.io/nvidia/vllm:25.09-py3 for the latest vLLM branch.

Thanks.

我想运行的是qwen3,没有qwen3-vl-8b-instruct版本的吗

您好,为什么,我启动vllm serve Qwen/Qwen2.5-VL-3B-Instruct --gpu-memory-utilization 0.7,时cpu接近跑满了,gpu都没有启用到。

问题:为什么只用cpu跑,而不用gpu跑呢?谢谢回复

Hi,

We can run Qwen/Qwen2.5-VL-3B-Instruct without issues.

Serving

$ docker run -it --rm --gpus all \

-p 9000:9000 \

-e DOCKER_PULL=always --pull always \

-e HF_HUB_CACHE=/root/.cache/huggingface \

-v /home/nvidia/topic_348385/cache:/root/.cache \

nvcr.io/nvidia/vllm:25.09-py3

# pip3 install bitsandbytes>=0.46.1

# vllm serve Qwen/Qwen2.5-VL-3B-Instruct \

--host=0.0.0.0 \

--port=9000 \

--dtype=auto \

--max-num-seqs=1 \

--chat-template-content-format=openai \

--trust-remote-code \

--gpu-memory-utilization=0.75 \

--uvicorn-log-level=debug \

--quantization=bitsandbytes \

--load-format=bitsandbytes

Call

$ curl http://0.0.0.0:9000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"messages": [{

"role": "user",

"content": [{

"type": "text",

"text": "What is in this image?"

},

{

"type": "image_url",

"image_url": {

"url": "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg"

}

}

]}],

"max_tokens": 300

}'

GPU loading is 97%

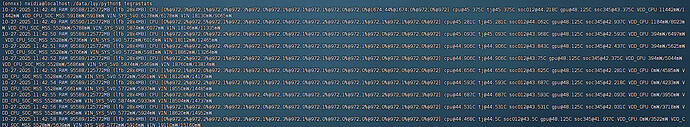

$ nvidia-smi

Thu Oct 23 04:47:46 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 580.00 Driver Version: 580.00 CUDA Version: 13.0 |

+-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA Thor Off | 00000000:01:00.0 Off | N/A |

| N/A N/A N/A N/A / N/A | Not Supported | 97% Default |

| | | Disabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 2443 G /usr/lib/xorg/Xorg 0MiB |

| 0 N/A N/A 2677 G /usr/bin/gnome-shell 0MiB |

| 0 N/A N/A 10253 C VLLM::EngineCore 0MiB |

+-----------------------------------------------------------------------------------------+

Thanks

我也可以部署Qwen/Qwen2.5-VL-3B-Instruct。可是我更想要部署的是Qwen/Qwen3-VL-8b-Instruct

谢谢回复

Hi,

Please note that the GPU is only active when an inference task is deployed.

This means the GPU can be idle when running the vllm serve ... as it is waiting for a task.

Based on your log, both CPU and GPU are in very low usage so there might not be a job running.

Please capture the utilization when running the curl command instead.

vLLM add Qwen3-VL support in 0.11.0 to deploy but our latest container for Thor is v0.10.1.1:

Please wait for our future release to run the Qwen3-VL.

Thanks.

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.