• Hardware Platform (Jetson / GPU): Jetson Orin Nano

• JetPack Version: JetPack 6.1 (r36.4.0), JetPack 6.2 (r36.4.3)

• Issue Type( questions, new requirements, bugs): bug

We are experiencing a significant kernel memory leak when using Argus cameras on the platform detailed above.

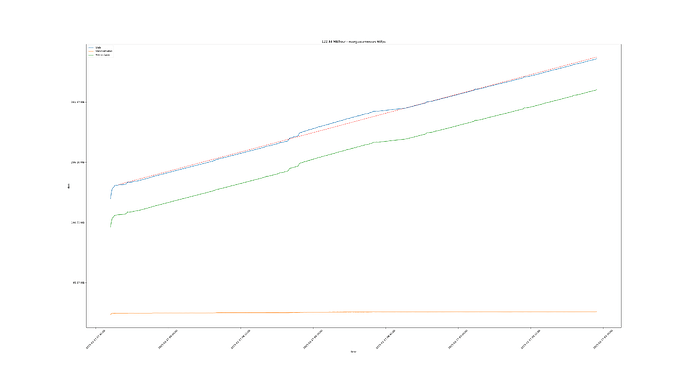

Its rate of growth appears to be dependent on frame rate:

- 122 MB per hour at 60 fps

- 27 MB per hour at 15 fps

which severely limits the up time of applications using the camera on a 4GB Orin Nano.

This can be reproduced with any minimal example consuming images from the camera, for instance:

gst-launch-1.0 nvarguscamerasrc ! fakesink

and observing the Slab memory stats with either /proc/meminfo or slabtop.

The leak seems to be of memory allocated with kmem_cache_alloc in the kmalloc-256 cache each frame, as reported using the kmemleak tool:

unreferenced object 0xffff0000e55b3400 (size 256):

comm "EglStrmComm*321", pid 2013, jiffies 4295303604 (age 966.836s)

hex dump (first 32 bytes):

80 0a c0 8f 00 00 ff ff 18 4e e7 d6 bc bc ff ff .........N......

10 34 5b e5 00 00 ff ff 10 34 5b e5 00 00 ff ff .4[......4[.....

backtrace:

[<0000000016651fb6>] kmem_cache_alloc_trace+0x2bc/0x3c4

[<000000001eda80e9>] host1x_fence_create+0x44/0xe0 [host1x]

[<000000003b75d1b3>] 0xffffbcbcd6fba854

[<00000000b3180eef>] __arm64_sys_ioctl+0xb4/0x100

[<0000000060416e59>] invoke_syscall+0x5c/0x130

[<0000000079086437>] el0_svc_common.constprop.0+0x64/0x110

[<0000000060aeb6be>] do_el0_svc+0x74/0xa0

[<000000007995a0f6>] el0_svc+0x28/0x80

[<0000000056dff231>] el0t_64_sync_handler+0xa4/0x130

[<0000000083dd126d>] el0t_64_sync+0x1a4/0x1a8

Furthermore /proc/meminfo shows this leaked memory is unreclaimable, meaning this memory can only be freed by rebooting the device, as far as I understand if:

Slab: 460280 kB

SReclaimable: 50348 kB

SUnreclaim: 409932 kB

This problem might be related (or the same) to the issue reported in Kernel level memory leak 36.3 nvarguscamerasrc, however:

- That issue mentions an older version of JetPack

- No update on the issue was given since October 2024

Is there any known workaround, or progress on resolving this issue?