By managed memory I was referring to what is described here:

http://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#um-unified-memory-programming-hd

I don’t think you are using it.

You haven’t actually provided a test case. I created one essentially using the CUDA sample code vectorAdd modified to run in a loop 1000 times.

I was able to reproduce the slower execution time in a multi-process/multi-GPU setting. A single instance of the app took about 2.8 seconds to run (1000 loops). With 4 instances running (each targetting separate GPUs) each instance took about 4 seconds.

For my test I only ran one instance on each GPU using taskset to select separate CPU cores for each instance, and also to manage CPU/GPU affinity.

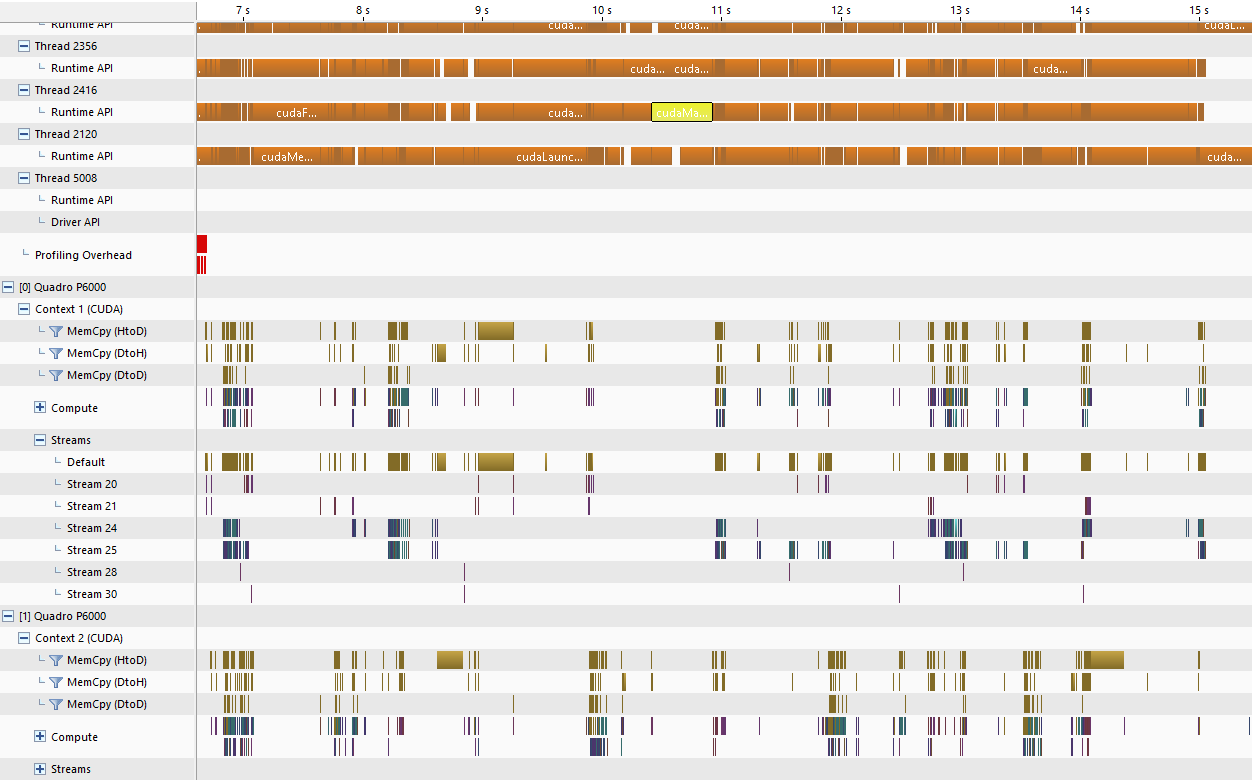

According to my observation via nvprof, the cudaMemcpy times were not worse (although I did not test pinned memory, nor did I test multiple processes per GPU). The main differences in runtime API behavior were in cudaMalloc and cudaFree. I haven’t done the necessary arithmetic to prove that these differences account for nearly all of the runtime difference, but eyeballing the data (below) suggests that may be the case, for my test case.

At the moment I cannot explain this so I’ve filed a (performance) bug. I don’t have any further information at this time, and can’t speculate about when it may be looked at or make any forward-looking statements. These statements are not acknowledging a defect, and at this time the bug is simply for tracking purposes. Although I can’t discuss details, it’s entirely possible that the driver/GPU management may dictate some sort of contention in such a scenario, although there presumably is always room for engineered improvement. The GPU driver is a single point of access/single entity for all GPUs in the system, so you can draw your own conjecture.

Here’s an example of my nvprof output. The first file is a single instance of the application, running on CPU socket 0 attached to GPU 0 (K80. CPU is a 16-core haswell CPU (dual socket). OS is CentOS 6.6, CUDA 7.5, driver 352.68). The second file is looking at the same app running on the same GPU and CPU socket, but with 3 other instances of the program running, all 3 on CPU socket 1, talking to 3 other K80 GPUs (this node has 4 K80’s, 8 devices total, one K80 on socket 0, 3 on socket 1):

$ cat t911.nvprof

==17927== NVPROF is profiling process 17927, command: ./t911 1000

==17927== Profiling application: ./t911 1000

==17927== Profiling result:

Time(%) Time Calls Avg Min Max Name

64.21% 66.303ms 2000 33.151us 29.855us 36.544us [CUDA memcpy HtoD]

30.42% 31.410ms 1000 31.409us 29.663us 33.119us [CUDA memcpy DtoH]

5.37% 5.5454ms 1000 5.5450us 5.0870us 6.0160us vectorAdd(float const *, float const *, float*, int)

==17927== API calls:

Time(%) Time Calls Avg Min Max Name

45.82% 468.49ms 3000 156.16us 3.1140us 235.27ms cudaMalloc

34.71% 354.91ms 3000 118.30us 3.4700us 826.99us cudaFree

18.00% 184.02ms 3000 61.339us 25.650us 188.76us cudaMemcpy

1.17% 12.008ms 1000 12.007us 11.207us 35.353us cudaLaunch

0.12% 1.2672ms 83 15.267us 97ns 570.40us cuDeviceGetAttribute

0.07% 701.96us 4000 175ns 125ns 2.5060us cudaSetupArgument

0.05% 513.99us 1000 513ns 431ns 2.9890us cudaConfigureCall

0.02% 247.69us 1000 247ns 185ns 4.2240us cudaGetLastError

0.01% 126.40us 1 126.40us 126.40us 126.40us cuDeviceTotalMem

0.01% 112.51us 1 112.51us 112.51us 112.51us cuDeviceGetName

0.00% 1.8670us 2 933ns 276ns 1.5910us cuDeviceGetCount

0.00% 480ns 2 240ns 174ns 306ns cuDeviceGet

$

$ cat t911_0.nvprof

==18445== NVPROF is profiling process 18445, command: ./t911 1000

==18445== Profiling application: ./t911 1000

==18445== Profiling result:

Time(%) Time Calls Avg Min Max Name

63.31% 60.864ms 2000 30.432us 29.887us 37.728us [CUDA memcpy HtoD]

30.93% 29.735ms 1000 29.734us 29.663us 35.391us [CUDA memcpy DtoH]

5.76% 5.5410ms 1000 5.5400us 5.0880us 6.0790us vectorAdd(float const *, float const *, float*, int)

==18445== API calls:

Time(%) Time Calls Avg Min Max Name

63.25% 1.26059s 3000 420.20us 3.2020us 898.11ms cudaMalloc

26.66% 531.33ms 3000 177.11us 3.4630us 1.2056ms cudaFree

9.04% 180.09ms 3000 60.029us 25.181us 174.36us cudaMemcpy

0.64% 12.681ms 1000 12.680us 11.110us 35.877us cudaLaunch

0.28% 5.6198ms 83 67.708us 101ns 2.5559ms cuDeviceGetAttribute

0.04% 783.42us 4000 195ns 122ns 11.902us cudaSetupArgument

0.03% 626.19us 1 626.19us 626.19us 626.19us cuDeviceTotalMem

0.03% 574.05us 1 574.05us 574.05us 574.05us cuDeviceGetName

0.03% 523.34us 1000 523ns 412ns 1.3630us cudaConfigureCall

0.01% 279.12us 1000 279ns 201ns 958ns cudaGetLastError

0.00% 8.2310us 2 4.1150us 347ns 7.8840us cuDeviceGetCount

0.00% 450ns 2 225ns 220ns 230ns cuDeviceGet

$

It’s evident that the worst offenders are cudaMalloc and cudaFree, and it’s also evident that the cudaMemcpy operations don’t (in my test case) suffer any degradation. Here’s the nvidia-smi output from my node and test script I used:

$ nvidia-smi

Fri Mar 18 18:20:23 2016

+------------------------------------------------------+

| NVIDIA-SMI 352.68 Driver Version: 352.68 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla K80 On | 0000:04:00.0 Off | 0 |

| N/A 33C P8 28W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 1 Tesla K80 On | 0000:05:00.0 Off | 0 |

| N/A 29C P8 43W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 2 Tesla K80 On | 0000:84:00.0 Off | 0 |

| N/A 37C P8 28W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 3 Tesla K80 On | 0000:85:00.0 Off | 0 |

| N/A 31C P8 42W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 4 Tesla K80 On | 0000:8A:00.0 Off | 0 |

| N/A 29C P8 28W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 5 Tesla K80 On | 0000:8B:00.0 Off | 0 |

| N/A 35C P8 40W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 6 Tesla K80 On | 0000:8E:00.0 Off | 0 |

| N/A 30C P8 28W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 7 Tesla K80 On | 0000:8F:00.0 Off | 0 |

| N/A 41C P8 41W / 175W | 23MiB / 11519MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

$ cat t911.run

#!/bin/bash

CUDA_VISIBLE_DEVICES="0" taskset -c 0 nvprof --log-file t911_%q{CUDA_VISIBLE_DEVICES}.nvprof ./t911 1000 &

CUDA_VISIBLE_DEVICES="2" taskset -c 16 nvprof --log-file t911_%q{CUDA_VISIBLE_DEVICES}.nvprof ./t911 1000 &

CUDA_VISIBLE_DEVICES="4" taskset -c 17 nvprof --log-file t911_%q{CUDA_VISIBLE_DEVICES}.nvprof ./t911 1000 &

CUDA_VISIBLE_DEVICES="6" taskset -c 18 nvprof --log-file t911_%q{CUDA_VISIBLE_DEVICES}.nvprof ./t911 1000 &

Your intuition about a workaround seems valid to me. In general, even apart from this issue, we want to avoid, as much as possible, cudaMalloc and cudaFree in performance-sensitive areas of the code. Ideally, do the minimum number of cudaMalloc operations once, at the beginning of your code. Reusing allocations is beneficial in this regard. Reusing pinned allocations is especially recommended, as the pinning process approximately doubles the allocation time cost.