Please provide the following info (check/uncheck the boxes after clicking “+ Create Topic”):

Software Version

[ x] DRIVE OS Linux 5.2.0

DRIVE OS Linux 5.2.0 and DriveWorks 3.5

NVIDIA DRIVE™ Software 10.0 (Linux)

NVIDIA DRIVE™ Software 9.0 (Linux)

other DRIVE OS version

other

Target Operating System

Linux

QNX

other

Hardware Platform

NVIDIA DRIVE™ AGX Xavier DevKit (E3550)

NVIDIA DRIVE™ AGX Pegasus DevKit (E3550)

other

SDK Manager Version

1.6.0.8170

1.5.1.7815

1.5.0.7774

other

Host Machine Version

native Ubuntu 18.04

other

Hello, I had profiled our application with two approaches: multiple process + multithreaded vs single process with multithreading on Pegasus, the performance difference is huge.

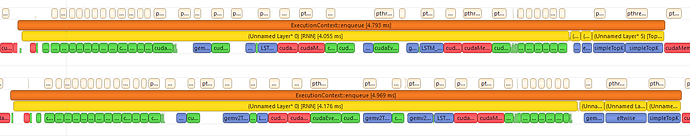

There are 7 threads with tensorRT, each one with a separate execute context, a separate cuda stream. The single thread gpu time (including async memory transfer and kernel launch) is all around 20ms. one of them are trivial and not included here.

With multiple process, we had 1,3,1,1 threads in 5 processes, the execution time is 59, (95, 95, 119), 57, 57ms. However if we put all of them on a single process, the time would be 190, (112, 112, 124), 190, 190ms.

I read many doc about the best practice and also did some simple tests on multithreading. The multithreading concurrent is quite unpredictable, sometimes it has very good concurrent overlap (with <=3 threads), sometimes no at all (even with 2 threads), sometimes could be worse than serialization.

We are not using MPS since it is not available on pegasus (not sure about it). Per my understanding, the multiprocess cannot share the GPU and it uses context switching. Multithreading using separate stream share the GPU, but it seems that the sharing is very poor. My simple test program with Nsight nsys shows that with 4 or 8 threads the overlap takes quite a bit time and also it all depends (you cannot guarantee similar overlaps for the same code).

I also tested limiting the number of threads accessing the GPU at the same time, so far the best is limiting 1 thread a time, which yield about 110ms for each threads, which is still worse than the multiprocess approach)

So my question is: how to improve the multithreading performance? What is the best practice anyway? Why sharing the GPU is worse than not sharing? As stated in Nvidia document, multistream sharing is automatic and avoids event synchronization and could be better, but in most cases, it is on the contrary side.

Any input? Thanks