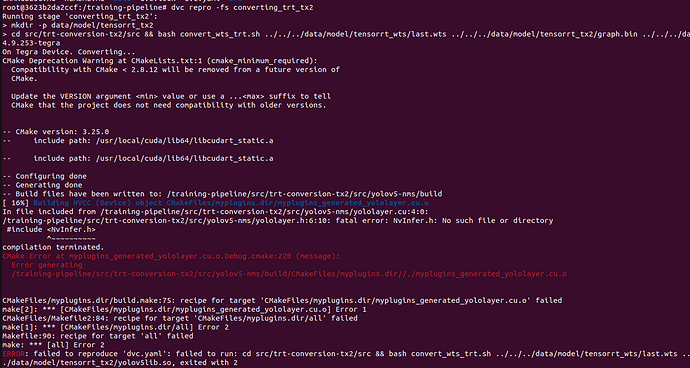

I am using l4t-base:r32.6.1 to build a docker image. The image should mount all the necessary files from CUDA, tensorrt from host machine to the docker image. But it failed to mount which causing building error for my codes.

I can find the NvInfer.h in my host machine:

run sudo find / -name NvInfer.h:

/usr/include/aarch64-linux-gnu/NvInfer.h

run find / -name NvInfer.h in the docker image, it showed nothing.

The mapping files existed in /etc/nvidia-container-runtime/host-files-for-container.dare cuda.csv, cudnn.csv, l4t.csv, tensorrt.csv

cat /etc/nvidia-container-runtime/host-files-for-container.d/tensorrt.csv

lib, /usr/lib/aarch64-linux-gnu/libnvinfer.so.8.0.1

lib, /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so.8.0.1

lib, /usr/lib/aarch64-linux-gnu/libnvparsers.so.8.0.1

lib, /usr/lib/aarch64-linux-gnu/libnvonnxparser.so.8.0.1

sym, /usr/lib/aarch64-linux-gnu/libnvinfer.so.8

sym, /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so.8

sym, /usr/lib/aarch64-linux-gnu/libnvparsers.so.8

sym, /usr/lib/aarch64-linux-gnu/libnvonnxparser.so.8

sym, /usr/lib/aarch64-linux-gnu/libnvinfer.so

sym, /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so

sym, /usr/lib/aarch64-linux-gnu/libnvparsers.so

sym, /usr/lib/aarch64-linux-gnu/libnvonnxparser.so

lib, /usr/include/aarch64-linux-gnu/NvInfer.h

lib, /usr/include/aarch64-linux-gnu/NvInferRuntime.h

lib, /usr/include/aarch64-linux-gnu/NvInferRuntimeCommon.h

lib, /usr/include/aarch64-linux-gnu/NvInferVersion.h

lib, /usr/include/aarch64-linux-gnu/NvInferImpl.h

lib, /usr/include/aarch64-linux-gnu/NvInferLegacyDims.h

lib, /usr/include/aarch64-linux-gnu/NvUtils.h

lib, /usr/include/aarch64-linux-gnu/NvInferPlugin.h

lib, /usr/include/aarch64-linux-gnu/NvInferPluginUtils.h

lib, /usr/include/aarch64-linux-gnu/NvCaffeParser.h

lib, /usr/include/aarch64-linux-gnu/NvUffParser.h

lib, /usr/include/aarch64-linux-gnu/NvOnnxConfig.h

lib, /usr/include/aarch64-linux-gnu/NvOnnxParser.h

dir, /usr/lib/python3.6/dist-packages/tensorrt

dir, /usr/lib/python3.6/dist-packages/graphsurgeon

dir, /usr/lib/python3.6/dist-packages/uff

dir, /usr/lib/python3.6/dist-packages/onnx_graphsurgeon

dir, /usr/src/tensorrt

Based on the information in this page, libnvidia-container/mount_plugins.md at jetson · NVIDIA/libnvidia-container · GitHub, it should be able to mount all the necessary files from host to docker image, but it still failed.

Could anyone know how to solve this problem?