I am developing using Optix 6.5 SDK through Visual Studio 2017 with Cuda 10. My machine is running an i5 6500 CPU and RTX 2060 Super with 1080p resolution.

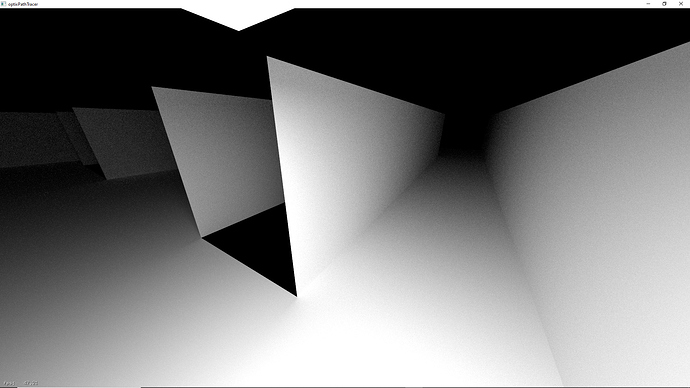

Running the Path Tracing demo, I saw that it was consuming around 400 mega bytes of RAM, which I think is odd considering the simplicity of the geometry of the scene. Upon scaling the window to full screen, the frame rate more than halved from 60 to <30 fps when I am looking directly into the scene (as opposed to the void). The same humongous memory usage and slow down happened for the Whitted demo.

I check the my RTX card GPU utilization through task manager, and it was barely peaking 3% usage. After installing Nsight, I ran a profiler and saw that none of the demos were using any GPU at all.CPU usage was really high during those demos.

All of the demos I tested are barely touched, and the same performance issue happens for precompiled samples.

What could be the issue? Is the GPU tripping or the CPU somehow assigned for the work? (or does Optix have that much RAM overhead and my RTX card is that bad?)

The overall GPU load in the TaskManager might not show the compute workload by default.

Please select the TaskManager’s Performance tab and click on the GPU icon to show the individual engines and change one of the graphs to CUDA.

That should run at over 90% load when running the optixPathTracer. The 3D load inside the other graph there is the OpenGL texture blit of the rendered image.

That the OptiX 6.5.0 optixPathTracer example is only running around 60 fps in its default windows size and much slower in full screen is normal. That is implementing an iterative global illumination path tracer which shoots multiple paths per pixel at once. It’s expensive.

The final display in the OptiX SDK examples is normally done with an OpenGL texture blit to the back buffer and a swap buffers. By default that swap is synchronized to the monitor refresh rate, so giving a maximum of 60 fps if your monitor is running with 60 Hz.

For benchmarking it’s recommended to disable the vertical sync inside the NVIDIA Control Panel.

Right-click on the desktop, select NVIDIA Control Panel, go to 3D Settings → Manage 3D Settings → Settings → Vertical Sync and change the value to Off.

Then run the simple OptiX examples like the optixMeshViewer again. In a small window that should run well in the hundreds of frames per second. (On my Quadro RTX 6000 that runs at >650 fps at default window size.)

The memory usage on the device is dependent on what CUDA allocates alone for the CUDA context and then all OptiX buffers, textures, acceleration structures, shader programs, etc.

Performance analyses with Nsight need to happen with the standalone programs Nsight Systems for the overall application behaviour and Nsight Compute for the individual CUDA device kernels you programmed in OptiX. (Nsight Graphics won’t help with CUDA compute workloads.)

Use the latest Nsight versions and display drivers and make sure your PTX code was compiled with --generate-lineinfo (-lineinfo) to be able to match the CUDA source code to the PTX and SASS assembly.

When starting new projects with OptiX, I would recommend to use OptiX 7.0.0 which has a completely different host API which is a lot more modern and generally faster. Everything around the actual OptiX calls is handled in native CUDA Runtime or Driver API calls which gives you much better control and flexibility.

OptiX 7 based examples implementing rather fast and flexible unidirectional path tracers can be found here.

https://forums.developer.nvidia.com/t/optix-advanced-samples-on-github/48410

They should generally be more interactive than the OptiX 6.5.0 optixPathTracer example.

1 Like

This isn’t exactly on topic, but i just realized that the user defined CUDA programs does everything including bounding box and intersections. This made me wonder what does the RT cores actually do? It seems like there isn’t any ASIC acceleration.

In OptiX 6 and newer the BVH traversal will always use the RT core hardware on RTX boards.

The additional hardware triangle intersection is used for the built-in triangles primitives only.

These do not have a developer-defined bounding box or intersection program as they are built-in.

Only custom geometric primitives have developer-defined bounding box and intersection programs.

The bounding box program is only called during acceleration structure builds, the intersection program is called during the BVH traversal when the ray is hitting an AABB.

OptiX 7 doesn’t have bounding box programs at all. It’s your responsibility to calculate the AABB input for custom primitives, normally with a native CUDA kernel for performance reasons.

OptiX 6: https://raytracing-docs.nvidia.com/optix6/guide_6_5/index.html#host#triangles

OptiX 7: https://raytracing-docs.nvidia.com/optix7/guide/index.html#acceleration_structures#primitive-build-inputs

Please read the OptiX programming guides and these threads:

https://forums.developer.nvidia.com/t/enable-rtx-mode-in-optix-7-0/122620

https://forums.developer.nvidia.com/t/optix-6-5-wireframe-rendering/119619/10

1 Like

Ok, I have gotten to play around with Optix geometry and materials and created my own simple scene. But the performance still struck me as under performing for a hardware accelerated API.

I ran a lot of GLSL path tracing demos and from scenes as simple as Wolfenstine 3D to as complex as Minecraft RTX run reasonably real time (>60 fps) on my 2060 super. Why can’t this scene with only one area light even run in real time after setting to only one light sample per bounce? I am stilling basing on this otixPathTracingDemo.

Is it really the overhead of Optix?

Comparing ray tracing performance of completely different light transport algorithms is normally misleading. Different render algorithms use different amounts of rays. If you’re not comparing the same things, then you cannot expect reasonable outcomes.

In this case you’re comparing a full global illumination progressive path tracer with diffuse materials, which is basically the worst for ray convergence, against some specialized real-time ray tracers which handle shadows and reflections individually with custom temporal filtering to get rid of the noise and possibly work with deferred rendering off some rasterized G-buffer.

Means you’re comparing a simple final frame rendering light transport algorithm SDK example against a highly optimized special case real-time implementation. The ray budget between these approaches is vastly different.

If you didn’t set the variable sqrt_num_samples (resp. the samples_per_launch in the OptiX 7 version of that) to 1 or simply removed that whole loop over the number of paths inside the ray generation program, that is doing a lot more work than my path tracer examples per sub-frame.

OptiX 6 actually can have some internal overhead if you’re not careful. Some of that will become visible when enabling the usage report feature. Because of that I would really recommend using OptiX 7 for new developments. It’s an explicit API without some of the pitfalls in earlier OptiX versions.