Originally published at: https://developer.nvidia.com/blog/preparing-models-for-object-detection-with-real-and-synthetic-data-and-tlt/

The long, cumbersome slog of data procurement has been slowing down innovation in AI, especially in computer vision, which relies on labeled images and video for training. But now you can jumpstart your machine learning process by quickly generating synthetic data using AI.Reverie. With the AI.Reverie synthetic data platform, you can create the exact training…

Author here. I’m glad I had the opportunity to trial NVIDIA’s latest TLT package to see what it could do in combination with the synthetic data we create at AI.Reverie. I’m still amazed at how easy it was to optimize our model sizes and inference speeds to be hundreds of times better! TLT is definitely a handy tool to have at your disposal if you are deploying neural nets to production or on edge.

If you have any questions or comments, please let us know.

When I run this in jupyter notebook

download(‘s3://rareplanes-public/real/tarballs/metadata_annotations.tar.gz’,

‘metadata_annotations’, 9)

I get this error

BStarting download

fatal error: Unable to locate credentials

Extracting…

pv: metadata_annotations.tar.gz: No such file or directory

gzip: stdin: unexpected end of file

tar: Child returned status 1

tar: Error is not recoverable: exiting now

Removing compressed file.

rm: cannot remove ‘data/real/tarballs/metadata_annotations.tar.gz’: No such file or directory

I had to get AWS credential setup to allow me to download images

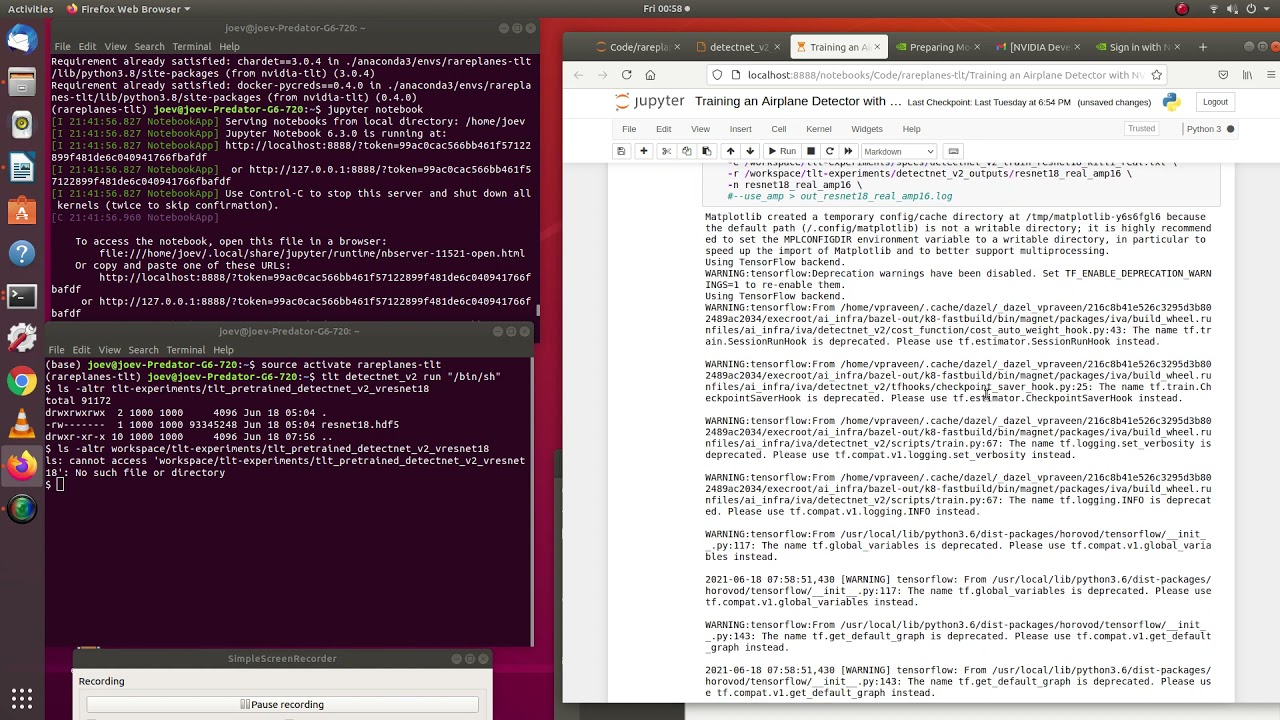

Im running the TLT3 jupyter notebook and I have got hung up because it cant find the path to “tlt_pretrained_detectnet_v2_vresnet18/resnet18.hdf5”

here is a video that explains it better

@adventuredaisy This is interesting, could you try running

tlt detectnet_v2 run "/bin/sh"

This let’s you shell into the docker container. At this point you can navigate the file system to see if everything is mounting properly. e.g.

ls -altr tlt-experiments/tlt_pretrained_detectnet_v2_vresnet18

Let me know if the file is accessible through the docker container then we can figure out which direction to take our debugging.

OK looks like this is working now

!tlt detectnet_v2 train --key tlt --gpu_index 0

-e /workspace/tlt-experiments/specs/detectnet_v2_train_resnet18_kitti_real.txt

-r /workspace/tlt-experiments/detectnet_v2_outputs/resnet18_real_amp16

-n resnet18_real_amp16

#–use_amp > out_resnet18_real_amp16.log

Ethier you guys got it of I didn’t fat finger something this time around on my end.

Unable to use

“#–use_amp > out_resnet18_real_amp16.log”

option though

I ran through the jupyter notebook.

Its quite on par with TLT3 jupyter notebooks from nvidia.

Nice work.

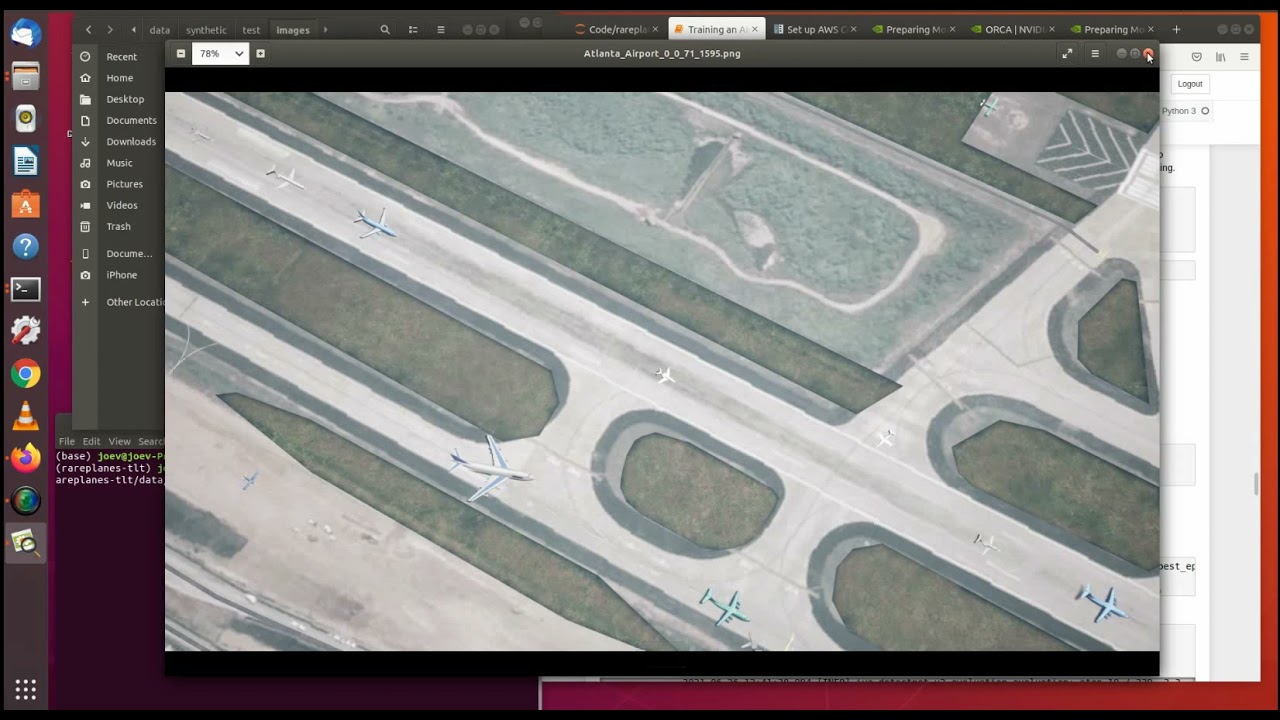

Only thing I came across that my be issue is when I run this:

convert_split(‘kitti_synthetic_train’)

It seems like it cant find a picture

Matplotlib created a temporary config/cache directory at /tmp/matplotlib-afk40id4 because the default path (/.config/matplotlib) is not a writable directory; it is highly recommended to set the MPLCONFIGDIR environment variable to a writable directory, in particular to speed up the import of Matplotlib and to better support multiprocessing.

Using TensorFlow backend.

WARNING:tensorflow:Deprecation warnings have been disabled. Set TF_ENABLE_DEPRECATION_WARNINGS=1 to re-enable them.

Using TensorFlow backend.

2021-06-21 12:28:10,764 - iva.detectnet_v2.dataio.build_converter - INFO - Instantiating a kitti converter

2021-06-21 12:28:16,463 - iva.detectnet_v2.dataio.kitti_converter_lib - INFO - Num images in

Train: 44550 Val: 450

2021-06-21 12:28:16,463 - iva.detectnet_v2.dataio.kitti_converter_lib - INFO - Validation data in partition 0. Hence, while choosing the validationset during training choose validation_fold 0.

2021-06-21 12:28:16,500 - iva.detectnet_v2.dataio.dataset_converter_lib - INFO - Writing partition 0, shard 0

WARNING:tensorflow:From /home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/dataset_converter_lib.py:142: The name tf.python_io.TFRecordWriter is deprecated. Please use tf.io.TFRecordWriter instead.

2021-06-21 12:28:16,500 - tensorflow - WARNING - From /home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/dataset_converter_lib.py:142: The name tf.python_io.TFRecordWriter is deprecated. Please use tf.io.TFRecordWriter instead.

/usr/local/lib/python3.6/dist-packages/iva/detectnet_v2/dataio/kitti_converter_lib.py:273: VisibleDeprecationWarning: Reading unicode strings without specifying the encoding argument is deprecated. Set the encoding, use None for the system default.

2021-06-21 12:28:19,905 - iva.detectnet_v2.dataio.dataset_converter_lib - INFO - Writing partition 0, shard 1

2021-06-21 12:28:22,604 - iva.detectnet_v2.dataio.dataset_converter_lib - INFO - Writing partition 0, shard 2

2021-06-21 12:28:25,107 - iva.detectnet_v2.dataio.dataset_converter_lib - INFO - Writing partition 0, shard 3

2021-06-21 12:28:27,343 - iva.detectnet_v2.dataio.dataset_converter_lib - INFO - Writing partition 0, shard 4

Traceback (most recent call last):

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/scripts/dataset_convert.py”, line 90, in

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/scripts/dataset_convert.py”, line 86, in main

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/dataset_converter_lib.py”, line 71, in convert

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/dataset_converter_lib.py”, line 105, in _write_partitions

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/dataset_converter_lib.py”, line 146, in _write_shard

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/kitti_converter_lib.py”, line 173, in _create_example_proto

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/kitti_converter_lib.py”, line 212, in _example_proto

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/dataio/kitti_converter_lib.py”, line 206, in _get_image_size

File “/usr/local/lib/python3.6/dist-packages/PIL/Image.py”, line 2766, in open

fp = builtins.open(filename, “rb”)

FileNotFoundError: [Errno 2] No such file or directory: ‘/workspace/tlt-experiments/data/kitti/synthetic_train/images/Miami_Airport_0_0_200_25266.png’

Traceback (most recent call last):

File “/usr/local/bin/detectnet_v2”, line 8, in

sys.exit(main())

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/detectnet_v2/entrypoint/detectnet_v2.py”, line 12, in main

File “/home/vpraveen/.cache/dazel/_dazel_vpraveen/216c8b41e526c3295d3b802489ac2034/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/common/entrypoint/entrypoint.py”, line 296, in launch_job

AssertionError: Process run failed.

2021-06-21 05:28:30,000 [INFO] tlt.components.docker_handler.docker_handler: Stopping container.

-use_amp would only work on GPUs that have support for mixed precision training. Can I ask which GPU you are trying this on?

As for the image file, can you confirm that it was downloaded to your local disk. e.g., I saved it here: ls -altr ~/Data/rareplanes-public/kitti/synthetic_train/images/Miami_Airport_0_0_200_25266.png

If it’s not there, you might want to try redownloading from s3. If it is, we need to verify that the file is accessible in the docker container.

Create a shell into the docker container like before: tlt detectnet_v2 run "/bin/sh"

Then we check it the file is available through the docker container: ls -altr tlt-experiments/data/kitti/synthetic_train/images/Miami_Airport_0_0_200_25266.png

If it’s not there, try and cd into the folders under tlt-experiments and see if any of the images are making it through at all.