Please provide complete information as applicable to your setup.

**• Hardware Platform (Jetson / GPU) jetson orin nano

**• DeepStream Version 6.3

Good day, I am trying to process multiple streams on the jetson orin and send them through an IP network so that another computer can access the processed stream and view the video that is sent.

I am able to process one video on my jetson orin and send that through an IP network, but now I want to be able to process multiple videos and send them through the IP network, but I am not quite able to get that right yet. Below is a section of code that creates the server:

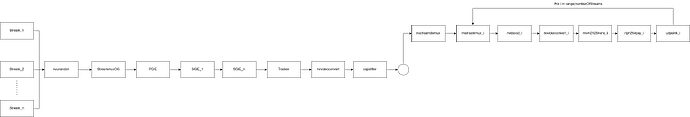

server = GstRtspServer.RTSPServer.new() server.props.service = "%d" % 8554 server.attach(None) nvstreamdemux = Gst.ElementFactory.make('nvstreamdemux', 'demux') pipeline.add(nvstreamdemux) queue.link(nvstreamdemux) queue = make_queue() pipeline.add(queue) ip_address = get_ip_address() for streamID in range(number_of_streams): srcPadName = "src_%u" % streamID sinkPadName = "sink_%u" % streamID demuxsrcpad = nvstreamdemux.get_request_pad(srcPadName) streammux = Gst.ElementFactory.make('nvstreammux', f'streammux_{streamID}') streammux.set_property('width', 1920) streammux.set_property('height', 1080) streammux.set_property('batch-size', 1) streammux.set_property('batched-push-timeout', 40000) if is_live: streammux.set_property('live-source', 1) else: streammux.set_property('live-source', 0) pipeline.add(streammux) streamMuxSinkPad = streammux.get_request_pad(sinkPadName) demuxsrcpad.link(streamMuxSinkPad) streammux.link(queue) nvosd = Gst.ElementFactory.make("nvdsosd", f"onscreendisplay_{streamID}") nvosd.set_property('process-mode',OSD_PROCESS_MODE) nvosd.set_property('display-text',OSD_DISPLAY_TEXT) pipeline.add(nvosd) queue.link(nvosd) queue = make_queue() pipeline.add(queue) nvosd.link(queue) nvvidconv_postosd = Gst.ElementFactory.make("nvvideoconvert", f"convertor_postosd_{streamID}") pipeline.add(nvvidconv_postosd) queue.link(nvvidconv_postosd) queue = make_queue() pipeline.add(queue) nvvidconv_postosd.link(queue) if is_aarch64(): caps1 = Gst.Caps.from_string("video/x-raw, format=I420") filter1 = Gst.ElementFactory.make("capsfilter", f"capsfilter_{streamID}") filter1.set_property("caps", caps1) pipeline.add(filter1) queue.link(filter1) queue = make_queue() pipeline.add(queue) filter1.link(queue) if is_aarch64(): encoder = Gst.ElementFactory.make("x264enc", f'encoder_{streamID}') encoder.set_property('speed-preset', 'medium') encoder.set_property('tune', 'zerolatency') encoder.set_property('pass', 'pass1') # encoder.set_property('bitrate', 999999) # encoder.set_property('bitrate', 999999) else: encoder = Gst.ElementFactory.make("nvv4l2h264enc", f'encoder_{streamID}') encoder.set_property('bitrate', 2000000) pipeline.add(encoder) queue.link(encoder) queue = make_queue() pipeline.add(queue) encoder.link(queue) rtppay = Gst.ElementFactory.make("rtph264pay", f"rtppay_{streamID}") pipeline.add(rtppay) queue.link(rtppay) queue = make_queue() pipeline.add(queue) rtppay.link(queue) updsink_port_num=5400 codec='H264' sink = Gst.ElementFactory.make("udpsink", f"udpsink_{streamID}") sink.set_property('host', '224.224.255.255') #Subnet mask sink.set_property('port', updsink_port_num) sink.set_property('async', False) sink.set_property('sync', 1) sink.set_property("qos", 0) pipeline.add(sink) queue.link(sink) factory = GstRtspServer.RTSPMediaFactory.new() factory.set_launch( "( udpsrc name=pay0 port=%d buffer-size=524288 caps=\"application/x-rtp, media=video, clock-rate=90000, encoding-name=(string)%s, payload=96 \" )" % (updsink_port_num, codec)) factory.set_shared(True) server.get_mount_points().add_factory(f"/stream-{streamID}", factory) print(f"Stream {streamID} is reachable with this URL: rtsp://{ip_address}:{updsink_port_num}/stream-{streamID}") print('Then streaming service should be set up within the next 5 seconds')

When I am only processing one stream, then I am able to access the stream through this URL: “rtsp://10.0.0.34:5400/stream-0”. But when I process two streams, then I am not able to access the streams…

The streams are still being processed, but I am not able to access it so I suspect that the problem might be with how the server is created…

Here is the code for the “get_ip_address” function:

def get_ip_address():

# Create a socket object

s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)# Connect to an external server (doesn't actually send data) s.connect(('8.8.8.8', 80)) # Get the local IP address ip_address = s.getsockname()[0] return ip_address