So, I wish to retrain with already pretrained .tlt(unpruned) models from previous trainings ( keeping them as initial weights ). Is that possible ? Or we can use tlt only with original nvidia models (.hdf5).

Yes, it is possible. Actually in the retraining spec file, the tlt format file is set as a pre-trained model.

How can we use a .tlt model that we have already trained? When I update the pretrained_model_file in the spec with a .tlt model I had previously trained, the mean average precision goes back to 0 as if it’s not loading in those weights.

Can you attach your spec file?

FYI - the precision is also lost when I retrain the pruned model, even when the pruned model had a 1.0 Pruning ratio.

Here is the command I’m using for training:

!tlt-train detectnet_v2 -e $SPECS_DIR/detectnet_v2_train_mobilenet_v2_kitti.txt \

-r $USER_EXPERIMENT_DIR/experiment_dir_unpruned \

-k $KEY \

-n mobilenet_v2_detector

This is the training spec file:

random_seed: 42

dataset_config {

data_sources {

tfrecords_path: "/workspace/tlt-experiments/tfrecords/kitti_trainval/*"

image_directory_path: "/workspace/tlt-experiments/data/training"

}

image_extension: "png"

target_class_mapping {

key: "truck"

value: "truck"

}

target_class_mapping {

key: "valve_open"

value: "valve_open"

}

target_class_mapping {

key: "valve_closed"

value: "valve_closed"

}

target_class_mapping {

key: "hose_connected"

value: "hose_connected"

}

target_class_mapping {

key: "hose_disconnected"

value: "hose_disconnected"

}

validation_fold: 0

}

augmentation_config {

preprocessing {

output_image_width: 1280

output_image_height: 720

min_bbox_width: 1.0

min_bbox_height: 1.0

output_image_channel: 3

}

spatial_augmentation {

hflip_probability: 0.0

zoom_min: 0.9

zoom_max: 1.1

translate_max_x: 0.0

translate_max_y: 0.0

}

color_augmentation {

hue_rotation_max: 10.0

saturation_shift_max: 0.2

contrast_scale_max: 0.1

contrast_center: 0.5

}

}

postprocessing_config {

target_class_config {

key: "truck"

value {

clustering_config {

coverage_threshold: 0.005

dbscan_eps: 0.15

dbscan_min_samples: 0.05

minimum_bounding_box_height: 20

}

}

}

target_class_config {

key: "valve_open"

value {

clustering_config {

coverage_threshold: 0.005

dbscan_eps: 0.15

dbscan_min_samples: 0.05

minimum_bounding_box_height: 20

}

}

}

target_class_config {

key: "valve_closed"

value {

clustering_config {

coverage_threshold: 0.005

dbscan_eps: 0.15

dbscan_min_samples: 0.05

minimum_bounding_box_height: 20

}

}

}

target_class_config {

key: "hose_connected"

value {

clustering_config {

coverage_threshold: 0.005

dbscan_eps: 0.15

dbscan_min_samples: 0.05

minimum_bounding_box_height: 20

}

}

}

target_class_config {

key: "hose_disconnected"

value {

clustering_config {

coverage_threshold: 0.005

dbscan_eps: 0.15

dbscan_min_samples: 0.05

minimum_bounding_box_height: 20

}

}

}

}

model_config {

pretrained_model_file: "/workspace/tlt-experiments/experiment_dir_unpruned/weights/mobilenet_v2_detector.tlt"

load_graph: true

num_layers: 18

use_batch_norm: true

activation {

activation_type: "relu"

}

objective_set {

bbox {

scale: 35.0

offset: 0.5

}

cov {

}

}

training_precision {

backend_floatx: FLOAT32

}

arch: "mobilenet_v2"

}

evaluation_config {

validation_period_during_training: 5

first_validation_epoch: 5

minimum_detection_ground_truth_overlap {

key: "truck"

value: 0.5

}

minimum_detection_ground_truth_overlap {

key: "valve_open"

value: 0.5

}

minimum_detection_ground_truth_overlap {

key: "valve_closed"

value: 0.5

}

minimum_detection_ground_truth_overlap {

key: "hose_connected"

value: 0.5

}

minimum_detection_ground_truth_overlap {

key: "hose_disconnected"

value: 0.5

}

evaluation_box_config {

key: "truck"

value {

minimum_height: 200

maximum_height: 9999

minimum_width: 100

maximum_width: 9999

}

}

evaluation_box_config {

key: "valve_open"

value {

minimum_height: 20

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

evaluation_box_config {

key: "valve_closed"

value {

minimum_height: 20

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

evaluation_box_config {

key: "hose_connected"

value {

minimum_height: 20

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

evaluation_box_config {

key: "hose_disconnected"

value {

minimum_height: 20

maximum_height: 9999

minimum_width: 10

maximum_width: 9999

}

}

average_precision_mode: INTEGRATE

}

cost_function_config {

target_classes {

name: "truck"

class_weight: 1.0

coverage_foreground_weight: 0.05

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 1.0

weight_target: 1.0

}

}

target_classes {

name: "valve_open"

class_weight: 1.0

coverage_foreground_weight: 0.05

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 1.0

weight_target: 1.0

}

}

target_classes {

name: "valve_closed"

class_weight: 1.0

coverage_foreground_weight: 0.05

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 1.0

weight_target: 1.0

}

}

target_classes {

name: "hose_connected"

class_weight: 1.0

coverage_foreground_weight: 0.05

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 1.0

weight_target: 1.0

}

}

target_classes {

name: "hose_disconnected"

class_weight: 1.0

coverage_foreground_weight: 0.05

objectives {

name: "cov"

initial_weight: 1.0

weight_target: 1.0

}

objectives {

name: "bbox"

initial_weight: 1.0

weight_target: 1.0

}

}

enable_autoweighting: true

max_objective_weight: 0.9999

min_objective_weight: 0.0001

}

training_config {

batch_size_per_gpu: 4

num_epochs: 500

learning_rate {

soft_start_annealing_schedule {

min_learning_rate: 5e-06

max_learning_rate: 5e-04

soft_start: 0.1

annealing: 0.7

}

}

regularizer {

type: L1

weight: 3.00000002618e-09

}

optimizer {

adam {

epsilon: 9.99999993923e-09

beta1: 0.899999976158

beta2: 0.999000012875

}

}

cost_scaling {

initial_exponent: 20.0

increment: 0.005

decrement: 1.0

}

checkpoint_interval: 5

}

bbox_rasterizer_config {

target_class_config {

key: "truck"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 0.4

cov_radius_y: 0.4

bbox_min_radius: 1.0

}

}

target_class_config {

key: "valve_open"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 0.4

cov_radius_y: 0.4

bbox_min_radius: 1.0

}

}

target_class_config {

key: "valve_closed"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 0.4

cov_radius_y: 0.4

bbox_min_radius: 1.0

}

}

target_class_config {

key: "hose_connected"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 0.4

cov_radius_y: 0.4

bbox_min_radius: 1.0

}

}

target_class_config {

key: "hose_disconnected"

value {

cov_center_x: 0.5

cov_center_y: 0.5

cov_radius_x: 0.4

cov_radius_y: 0.4

bbox_min_radius: 1.0

}

}

deadzone_radius: 0.5

}

Can you attach your training spec too? May I know more info about your case?

I assume as below, please correct me if any.

- You train with the training spec, mAP is accepted.(Please paste your result here)

- You retrain with the unpruned tlt model, and modify into above retraining spec, mAP is 0.

I attached the training spec in my previous post…did you mean my retrain spec? They are actually the same, just that in my “Training Spec” I am using a previously trained, unpruned model as the pretrained_model_file and in the “Retrain spec” I am using the resulting pruned model as the pretrained_model_file.

Here are the situations I’ve tried:

- Train with training spec where I use the pretrained Mobilenet V2 model from the NGC model registry (

.hdf5filetype). The first 50 epochs all have 0% mAP, and eventually I was able to achieve 94% mAP after several hours of training. - Prune the model

- Retrain the pruned model. The first 50 epochs are all back at 0% mAP and it takes several hours again to get back up to 94% mAP

I’ve also tried:

- Train with training spec where I use my own previously trained model, this time it is a

.tltfile. Again, the first 50 or so epochs are back to 0% mAP, even though I can confirm that thistltmodel used as thepretrained_model_filehas an mAP of around 94% when I evaluate it separately. I was eventually able to achieve around 94% mAP after several hours of training. - Prune the model

- Retrain the pruned model. The first 50 epochs are, again, all back at 0% mAP and it takes several hours again to get back up to 94% mAP.

My theory is that the weights aren’t actually being initialized, but I cannot confirm this by looking at the logs during training.

Here is the training log, up to the first validation checkpoint, for the situation where I am trying to train an unpruned model using my previously trained model .tlt file that has a 94% mAP:

Using TensorFlow backend.

2020-03-20 18:15:14.574814: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2020-03-20 18:15:14.668492: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2020-03-20 18:15:14.668915: I tensorflow/compiler/xla/service/service.cc:150] XLA service 0x663eeb0 executing computations on platform CUDA. Devices:

2020-03-20 18:15:14.668937: I tensorflow/compiler/xla/service/service.cc:158] StreamExecutor device (0): GeForce GTX 1070 Ti, Compute Capability 6.1

2020-03-20 18:15:14.670438: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3600000000 Hz

2020-03-20 18:15:14.670735: I tensorflow/compiler/xla/service/service.cc:150] XLA service 0x66a9060 executing computations on platform Host. Devices:

2020-03-20 18:15:14.670753: I tensorflow/compiler/xla/service/service.cc:158] StreamExecutor device (0): <undefined>, <undefined>

2020-03-20 18:15:14.670908: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1433] Found device 0 with properties:

name: GeForce GTX 1070 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.683

pciBusID: 0000:01:00.0

totalMemory: 7.92GiB freeMemory: 7.33GiB

2020-03-20 18:15:14.670926: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1512] Adding visible gpu devices: 0

2020-03-20 18:15:14.671460: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] Device interconnect StreamExecutor with strength 1 edge matrix:

2020-03-20 18:15:14.671473: I tensorflow/core/common_runtime/gpu/gpu_device.cc:990] 0

2020-03-20 18:15:14.671483: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1003] 0: N

2020-03-20 18:15:14.671613: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1115] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 7129 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1070 Ti, pci bus id: 0000:01:00.0, compute capability: 6.1)

2020-03-20 18:15:14,672 [INFO] iva.detectnet_v2.scripts.train: Loading experiment spec at /workspace/examples/detectnet_v2/specs/detectnet_v2_train_mobilenet_v2_kitti.txt.

2020-03-20 18:15:14,672 [INFO] iva.detectnet_v2.spec_handler.spec_loader: Merging specification from /workspace/examples/detectnet_v2/specs/detectnet_v2_train_mobilenet_v2_kitti.txt

WARNING:tensorflow:From ./detectnet_v2/dataloader/utilities.py:114: tf_record_iterator (from tensorflow.python.lib.io.tf_record) is deprecated and will be removed in a future version.

Instructions for updating:

Use eager execution and:

`tf.data.TFRecordDataset(path)`

2020-03-20 18:15:14,678 [WARNING] tensorflow: From ./detectnet_v2/dataloader/utilities.py:114: tf_record_iterator (from tensorflow.python.lib.io.tf_record) is deprecated and will be removed in a future version.

Instructions for updating:

Use eager execution and:

`tf.data.TFRecordDataset(path)`

2020-03-20 18:15:14,712 [INFO] iva.detectnet_v2.scripts.train: Cannot iterate over exactly 394 samples with a batch size of 4; each epoch will therefore take one extra step.

WARNING:tensorflow:From /usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

2020-03-20 18:15:14,716 [WARNING] tensorflow: From /usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

WARNING:tensorflow:From /usr/local/lib/python2.7/dist-packages/horovod/tensorflow/__init__.py:91: div (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Deprecated in favor of operator or tf.math.divide.

2020-03-20 18:15:14,725 [WARNING] tensorflow: From /usr/local/lib/python2.7/dist-packages/horovod/tensorflow/__init__.py:91: div (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Deprecated in favor of operator or tf.math.divide.

/usr/local/lib/python2.7/dist-packages/keras/engine/saving.py:292: UserWarning: No training configuration found in save file: the model was *not* compiled. Compile it manually.

warnings.warn('No training configuration found in save file: '

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 3, 720, 1280) 0

__________________________________________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 3, 722, 1282) 0 input_1[0][0]

__________________________________________________________________________________________________

conv1 (Conv2D) (None, 32, 360, 640) 864 conv1_pad[0][0]

__________________________________________________________________________________________________

bn_conv1 (BatchNormalization) (None, 32, 360, 640) 128 conv1[0][0]

__________________________________________________________________________________________________

re_lu_1 (ReLU) (None, 32, 360, 640) 0 bn_conv1[0][0]

__________________________________________________________________________________________________

expanded_conv_depthwise_pad (Ze (None, 32, 362, 642) 0 re_lu_1[0][0]

__________________________________________________________________________________________________

expanded_conv_depthwise (Depthw (None, 32, 360, 640) 288 expanded_conv_depthwise_pad[0][0]

__________________________________________________________________________________________________

expanded_conv_depthwise_bn (Bat (None, 32, 360, 640) 128 expanded_conv_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_2 (ReLU) (None, 32, 360, 640) 0 expanded_conv_depthwise_bn[0][0]

__________________________________________________________________________________________________

expanded_conv_project (Conv2D) (None, 16, 360, 640) 512 re_lu_2[0][0]

__________________________________________________________________________________________________

expanded_conv_project_bn (Batch (None, 16, 360, 640) 64 expanded_conv_project[0][0]

__________________________________________________________________________________________________

block_1_expand (Conv2D) (None, 96, 360, 640) 1536 expanded_conv_project_bn[0][0]

__________________________________________________________________________________________________

block_1_expand_bn (BatchNormali (None, 96, 360, 640) 384 block_1_expand[0][0]

__________________________________________________________________________________________________

re_lu_3 (ReLU) (None, 96, 360, 640) 0 block_1_expand_bn[0][0]

__________________________________________________________________________________________________

block_1_depthwise_pad (ZeroPadd (None, 96, 362, 642) 0 re_lu_3[0][0]

__________________________________________________________________________________________________

block_1_depthwise (DepthwiseCon (None, 96, 180, 320) 864 block_1_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_1_depthwise_bn (BatchNorm (None, 96, 180, 320) 384 block_1_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_4 (ReLU) (None, 96, 180, 320) 0 block_1_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_1_project (Conv2D) (None, 24, 180, 320) 2304 re_lu_4[0][0]

__________________________________________________________________________________________________

block_1_project_bn (BatchNormal (None, 24, 180, 320) 96 block_1_project[0][0]

__________________________________________________________________________________________________

block_2_expand (Conv2D) (None, 144, 180, 320 3456 block_1_project_bn[0][0]

__________________________________________________________________________________________________

block_2_expand_bn (BatchNormali (None, 144, 180, 320 576 block_2_expand[0][0]

__________________________________________________________________________________________________

re_lu_5 (ReLU) (None, 144, 180, 320 0 block_2_expand_bn[0][0]

__________________________________________________________________________________________________

block_2_depthwise_pad (ZeroPadd (None, 144, 182, 322 0 re_lu_5[0][0]

__________________________________________________________________________________________________

block_2_depthwise (DepthwiseCon (None, 144, 180, 320 1296 block_2_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_2_depthwise_bn (BatchNorm (None, 144, 180, 320 576 block_2_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_6 (ReLU) (None, 144, 180, 320 0 block_2_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_2_project (Conv2D) (None, 24, 180, 320) 3456 re_lu_6[0][0]

__________________________________________________________________________________________________

block_2_project_bn (BatchNormal (None, 24, 180, 320) 96 block_2_project[0][0]

__________________________________________________________________________________________________

block_2_add (Add) (None, 24, 180, 320) 0 block_1_project_bn[0][0]

block_2_project_bn[0][0]

__________________________________________________________________________________________________

block_3_expand (Conv2D) (None, 144, 180, 320 3456 block_2_add[0][0]

__________________________________________________________________________________________________

block_3_expand_bn (BatchNormali (None, 144, 180, 320 576 block_3_expand[0][0]

__________________________________________________________________________________________________

re_lu_7 (ReLU) (None, 144, 180, 320 0 block_3_expand_bn[0][0]

__________________________________________________________________________________________________

block_3_depthwise_pad (ZeroPadd (None, 144, 182, 322 0 re_lu_7[0][0]

__________________________________________________________________________________________________

block_3_depthwise (DepthwiseCon (None, 144, 90, 160) 1296 block_3_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_3_depthwise_bn (BatchNorm (None, 144, 90, 160) 576 block_3_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_8 (ReLU) (None, 144, 90, 160) 0 block_3_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_3_project (Conv2D) (None, 32, 90, 160) 4608 re_lu_8[0][0]

__________________________________________________________________________________________________

block_3_project_bn (BatchNormal (None, 32, 90, 160) 128 block_3_project[0][0]

__________________________________________________________________________________________________

block_4_expand (Conv2D) (None, 192, 90, 160) 6144 block_3_project_bn[0][0]

__________________________________________________________________________________________________

block_4_expand_bn (BatchNormali (None, 192, 90, 160) 768 block_4_expand[0][0]

__________________________________________________________________________________________________

re_lu_9 (ReLU) (None, 192, 90, 160) 0 block_4_expand_bn[0][0]

__________________________________________________________________________________________________

block_4_depthwise_pad (ZeroPadd (None, 192, 92, 162) 0 re_lu_9[0][0]

__________________________________________________________________________________________________

block_4_depthwise (DepthwiseCon (None, 192, 90, 160) 1728 block_4_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_4_depthwise_bn (BatchNorm (None, 192, 90, 160) 768 block_4_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_10 (ReLU) (None, 192, 90, 160) 0 block_4_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_4_project (Conv2D) (None, 32, 90, 160) 6144 re_lu_10[0][0]

__________________________________________________________________________________________________

block_4_project_bn (BatchNormal (None, 32, 90, 160) 128 block_4_project[0][0]

__________________________________________________________________________________________________

block_4_add (Add) (None, 32, 90, 160) 0 block_3_project_bn[0][0]

block_4_project_bn[0][0]

__________________________________________________________________________________________________

block_5_expand (Conv2D) (None, 192, 90, 160) 6144 block_4_add[0][0]

__________________________________________________________________________________________________

block_5_expand_bn (BatchNormali (None, 192, 90, 160) 768 block_5_expand[0][0]

__________________________________________________________________________________________________

re_lu_11 (ReLU) (None, 192, 90, 160) 0 block_5_expand_bn[0][0]

__________________________________________________________________________________________________

block_5_depthwise_pad (ZeroPadd (None, 192, 92, 162) 0 re_lu_11[0][0]

__________________________________________________________________________________________________

block_5_depthwise (DepthwiseCon (None, 192, 90, 160) 1728 block_5_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_5_depthwise_bn (BatchNorm (None, 192, 90, 160) 768 block_5_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_12 (ReLU) (None, 192, 90, 160) 0 block_5_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_5_project (Conv2D) (None, 32, 90, 160) 6144 re_lu_12[0][0]

__________________________________________________________________________________________________

block_5_project_bn (BatchNormal (None, 32, 90, 160) 128 block_5_project[0][0]

__________________________________________________________________________________________________

block_5_add (Add) (None, 32, 90, 160) 0 block_4_add[0][0]

block_5_project_bn[0][0]

__________________________________________________________________________________________________

block_6_expand (Conv2D) (None, 192, 90, 160) 6144 block_5_add[0][0]

__________________________________________________________________________________________________

block_6_expand_bn (BatchNormali (None, 192, 90, 160) 768 block_6_expand[0][0]

__________________________________________________________________________________________________

re_lu_13 (ReLU) (None, 192, 90, 160) 0 block_6_expand_bn[0][0]

__________________________________________________________________________________________________

block_6_depthwise_pad (ZeroPadd (None, 192, 92, 162) 0 re_lu_13[0][0]

__________________________________________________________________________________________________

block_6_depthwise (DepthwiseCon (None, 192, 45, 80) 1728 block_6_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_6_depthwise_bn (BatchNorm (None, 192, 45, 80) 768 block_6_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_14 (ReLU) (None, 192, 45, 80) 0 block_6_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_6_project (Conv2D) (None, 64, 45, 80) 12288 re_lu_14[0][0]

__________________________________________________________________________________________________

block_6_project_bn (BatchNormal (None, 64, 45, 80) 256 block_6_project[0][0]

__________________________________________________________________________________________________

block_7_expand (Conv2D) (None, 384, 45, 80) 24576 block_6_project_bn[0][0]

__________________________________________________________________________________________________

block_7_expand_bn (BatchNormali (None, 384, 45, 80) 1536 block_7_expand[0][0]

__________________________________________________________________________________________________

re_lu_15 (ReLU) (None, 384, 45, 80) 0 block_7_expand_bn[0][0]

__________________________________________________________________________________________________

block_7_depthwise_pad (ZeroPadd (None, 384, 47, 82) 0 re_lu_15[0][0]

__________________________________________________________________________________________________

block_7_depthwise (DepthwiseCon (None, 384, 45, 80) 3456 block_7_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_7_depthwise_bn (BatchNorm (None, 384, 45, 80) 1536 block_7_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_16 (ReLU) (None, 384, 45, 80) 0 block_7_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_7_project (Conv2D) (None, 64, 45, 80) 24576 re_lu_16[0][0]

__________________________________________________________________________________________________

block_7_project_bn (BatchNormal (None, 64, 45, 80) 256 block_7_project[0][0]

__________________________________________________________________________________________________

block_7_add (Add) (None, 64, 45, 80) 0 block_6_project_bn[0][0]

block_7_project_bn[0][0]

__________________________________________________________________________________________________

block_8_expand (Conv2D) (None, 384, 45, 80) 24576 block_7_add[0][0]

__________________________________________________________________________________________________

block_8_expand_bn (BatchNormali (None, 384, 45, 80) 1536 block_8_expand[0][0]

__________________________________________________________________________________________________

re_lu_17 (ReLU) (None, 384, 45, 80) 0 block_8_expand_bn[0][0]

__________________________________________________________________________________________________

block_8_depthwise_pad (ZeroPadd (None, 384, 47, 82) 0 re_lu_17[0][0]

__________________________________________________________________________________________________

block_8_depthwise (DepthwiseCon (None, 384, 45, 80) 3456 block_8_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_8_depthwise_bn (BatchNorm (None, 384, 45, 80) 1536 block_8_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_18 (ReLU) (None, 384, 45, 80) 0 block_8_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_8_project (Conv2D) (None, 64, 45, 80) 24576 re_lu_18[0][0]

__________________________________________________________________________________________________

block_8_project_bn (BatchNormal (None, 64, 45, 80) 256 block_8_project[0][0]

__________________________________________________________________________________________________

block_8_add (Add) (None, 64, 45, 80) 0 block_7_add[0][0]

block_8_project_bn[0][0]

__________________________________________________________________________________________________

block_9_expand (Conv2D) (None, 384, 45, 80) 24576 block_8_add[0][0]

__________________________________________________________________________________________________

block_9_expand_bn (BatchNormali (None, 384, 45, 80) 1536 block_9_expand[0][0]

__________________________________________________________________________________________________

re_lu_19 (ReLU) (None, 384, 45, 80) 0 block_9_expand_bn[0][0]

__________________________________________________________________________________________________

block_9_depthwise_pad (ZeroPadd (None, 384, 47, 82) 0 re_lu_19[0][0]

__________________________________________________________________________________________________

block_9_depthwise (DepthwiseCon (None, 384, 45, 80) 3456 block_9_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_9_depthwise_bn (BatchNorm (None, 384, 45, 80) 1536 block_9_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_20 (ReLU) (None, 384, 45, 80) 0 block_9_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_9_project (Conv2D) (None, 64, 45, 80) 24576 re_lu_20[0][0]

__________________________________________________________________________________________________

block_9_project_bn (BatchNormal (None, 64, 45, 80) 256 block_9_project[0][0]

__________________________________________________________________________________________________

block_9_add (Add) (None, 64, 45, 80) 0 block_8_add[0][0]

block_9_project_bn[0][0]

__________________________________________________________________________________________________

block_10_expand (Conv2D) (None, 384, 45, 80) 24576 block_9_add[0][0]

__________________________________________________________________________________________________

block_10_expand_bn (BatchNormal (None, 384, 45, 80) 1536 block_10_expand[0][0]

__________________________________________________________________________________________________

re_lu_21 (ReLU) (None, 384, 45, 80) 0 block_10_expand_bn[0][0]

__________________________________________________________________________________________________

block_10_depthwise_pad (ZeroPad (None, 384, 47, 82) 0 re_lu_21[0][0]

__________________________________________________________________________________________________

block_10_depthwise (DepthwiseCo (None, 384, 45, 80) 3456 block_10_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_10_depthwise_bn (BatchNor (None, 384, 45, 80) 1536 block_10_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_22 (ReLU) (None, 384, 45, 80) 0 block_10_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_10_project (Conv2D) (None, 96, 45, 80) 36864 re_lu_22[0][0]

__________________________________________________________________________________________________

block_10_project_bn (BatchNorma (None, 96, 45, 80) 384 block_10_project[0][0]

__________________________________________________________________________________________________

block_11_expand (Conv2D) (None, 576, 45, 80) 55296 block_10_project_bn[0][0]

__________________________________________________________________________________________________

block_11_expand_bn (BatchNormal (None, 576, 45, 80) 2304 block_11_expand[0][0]

__________________________________________________________________________________________________

re_lu_23 (ReLU) (None, 576, 45, 80) 0 block_11_expand_bn[0][0]

__________________________________________________________________________________________________

block_11_depthwise_pad (ZeroPad (None, 576, 47, 82) 0 re_lu_23[0][0]

__________________________________________________________________________________________________

block_11_depthwise (DepthwiseCo (None, 576, 45, 80) 5184 block_11_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_11_depthwise_bn (BatchNor (None, 576, 45, 80) 2304 block_11_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_24 (ReLU) (None, 576, 45, 80) 0 block_11_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_11_project (Conv2D) (None, 96, 45, 80) 55296 re_lu_24[0][0]

__________________________________________________________________________________________________

block_11_project_bn (BatchNorma (None, 96, 45, 80) 384 block_11_project[0][0]

__________________________________________________________________________________________________

block_11_add (Add) (None, 96, 45, 80) 0 block_10_project_bn[0][0]

block_11_project_bn[0][0]

__________________________________________________________________________________________________

block_12_expand (Conv2D) (None, 576, 45, 80) 55296 block_11_add[0][0]

__________________________________________________________________________________________________

block_12_expand_bn (BatchNormal (None, 576, 45, 80) 2304 block_12_expand[0][0]

__________________________________________________________________________________________________

re_lu_25 (ReLU) (None, 576, 45, 80) 0 block_12_expand_bn[0][0]

__________________________________________________________________________________________________

block_12_depthwise_pad (ZeroPad (None, 576, 47, 82) 0 re_lu_25[0][0]

__________________________________________________________________________________________________

block_12_depthwise (DepthwiseCo (None, 576, 45, 80) 5184 block_12_depthwise_pad[0][0]

__________________________________________________________________________________________________

block_12_depthwise_bn (BatchNor (None, 576, 45, 80) 2304 block_12_depthwise[0][0]

__________________________________________________________________________________________________

re_lu_26 (ReLU) (None, 576, 45, 80) 0 block_12_depthwise_bn[0][0]

__________________________________________________________________________________________________

block_12_project (Conv2D) (None, 96, 45, 80) 55296 re_lu_26[0][0]

__________________________________________________________________________________________________

block_12_project_bn (BatchNorma (None, 96, 45, 80) 384 block_12_project[0][0]

__________________________________________________________________________________________________

block_12_add (Add) (None, 96, 45, 80) 0 block_11_add[0][0]

block_12_project_bn[0][0]

__________________________________________________________________________________________________

output_bbox (Conv2D) (None, 20, 45, 80) 1940 block_12_add[0][0]

__________________________________________________________________________________________________

output_cov (Conv2D) (None, 5, 45, 80) 485 block_12_add[0][0]

==================================================================================================

Total params: 561,081

Trainable params: 544,953

Non-trainable params: 16,128

__________________________________________________________________________________________________

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

2020-03-20 18:15:18,274 [INFO] iva.detectnet_v2.scripts.train: Found 394 samples in training set

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

target/truncation is not updated to match the crop areaif the dataset contains target/truncation.

2020-03-20 18:15:23,069 [INFO] iva.detectnet_v2.scripts.train: Found 63 samples in validation set

INFO:tensorflow:Create CheckpointSaverHook.

2020-03-20 18:15:26,773 [INFO] tensorflow: Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

2020-03-20 18:15:27,678 [INFO] tensorflow: Graph was finalized.

2020-03-20 18:15:27.678562: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1512] Adding visible gpu devices: 0

2020-03-20 18:15:27.678589: I tensorflow/core/common_runtime/gpu/gpu_device.cc:984] Device interconnect StreamExecutor with strength 1 edge matrix:

2020-03-20 18:15:27.678596: I tensorflow/core/common_runtime/gpu/gpu_device.cc:990] 0

2020-03-20 18:15:27.678603: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1003] 0: N

2020-03-20 18:15:27.678741: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1115] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 7129 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1070 Ti, pci bus id: 0000:01:00.0, compute capability: 6.1)

INFO:tensorflow:Running local_init_op.

2020-03-20 18:15:28,990 [INFO] tensorflow: Running local_init_op.

INFO:tensorflow:Done running local_init_op.

2020-03-20 18:15:29,211 [INFO] tensorflow: Done running local_init_op.

INFO:tensorflow:Saving checkpoints for step-0.

2020-03-20 18:15:36,307 [INFO] tensorflow: Saving checkpoints for step-0.

2020-03-20 18:16:01.212227: I tensorflow/stream_executor/dso_loader.cc:152] successfully opened CUDA library libcublas.so.10.0 locally

2020-03-20 18:16:01.318690: I tensorflow/core/kernels/cuda_solvers.cc:159] Creating CudaSolver handles for stream 0x66e4a60

2020-03-20 18:16:02.231869: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.09GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.238245: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.40GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.280204: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.08GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.283842: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.24GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.385723: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.07GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.386907: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.24GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.389052: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.05GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.390794: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.08GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.481579: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.06GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2020-03-20 18:16:02.484193: W tensorflow/core/common_runtime/bfc_allocator.cc:211] Allocator (GPU_0_bfc) ran out of memory trying to allocate 3.40GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

INFO:tensorflow:epoch = 0.0, loss = 0.54143083, step = 0

2020-03-20 18:16:03,019 [INFO] tensorflow: epoch = 0.0, loss = 0.54143083, step = 0

2020-03-20 18:16:03,019 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/task_progress_monitor_hook.pyc: Epoch 0/500: loss: 0.54143 Time taken: 0:00:00 ETA: 0:00:00

INFO:tensorflow:epoch = 0.07070707070707072, loss = 0.547261, step = 7 (7.983 sec)

2020-03-20 18:16:11,001 [INFO] tensorflow: epoch = 0.07070707070707072, loss = 0.547261, step = 7 (7.983 sec)

INFO:tensorflow:global_step/sec: 0.804866

2020-03-20 18:16:14,201 [INFO] tensorflow: global_step/sec: 0.804866

INFO:tensorflow:epoch = 0.14141414141414144, loss = 0.5353711, step = 14 (5.714 sec)

2020-03-20 18:16:16,715 [INFO] tensorflow: epoch = 0.14141414141414144, loss = 0.5353711, step = 14 (5.714 sec)

INFO:tensorflow:global_step/sec: 1.99343

2020-03-20 18:16:18,716 [INFO] tensorflow: global_step/sec: 1.99343

2020-03-20 18:16:21,823 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 4.383

INFO:tensorflow:epoch = 0.25252525252525254, loss = 0.5337452, step = 25 (5.615 sec)

2020-03-20 18:16:22,330 [INFO] tensorflow: epoch = 0.25252525252525254, loss = 0.5337452, step = 25 (5.615 sec)

INFO:tensorflow:global_step/sec: 1.95584

2020-03-20 18:16:23,317 [INFO] tensorflow: global_step/sec: 1.95584

INFO:tensorflow:epoch = 0.36363636363636365, loss = 0.52291155, step = 36 (5.520 sec)

2020-03-20 18:16:27,850 [INFO] tensorflow: epoch = 0.36363636363636365, loss = 0.52291155, step = 36 (5.520 sec)

INFO:tensorflow:global_step/sec: 1.98533

2020-03-20 18:16:27,851 [INFO] tensorflow: global_step/sec: 1.98533

INFO:tensorflow:global_step/sec: 1.96531

2020-03-20 18:16:32,430 [INFO] tensorflow: global_step/sec: 1.96531

INFO:tensorflow:epoch = 0.4747474747474748, loss = 0.5189539, step = 47 (5.587 sec)

2020-03-20 18:16:33,437 [INFO] tensorflow: epoch = 0.4747474747474748, loss = 0.5189539, step = 47 (5.587 sec)

2020-03-20 18:16:34,474 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.905

INFO:tensorflow:global_step/sec: 1.97808

2020-03-20 18:16:36,980 [INFO] tensorflow: global_step/sec: 1.97808

INFO:tensorflow:epoch = 0.5858585858585859, loss = 0.51284915, step = 58 (5.570 sec)

2020-03-20 18:16:39,007 [INFO] tensorflow: epoch = 0.5858585858585859, loss = 0.51284915, step = 58 (5.570 sec)

INFO:tensorflow:global_step/sec: 1.92838

2020-03-20 18:16:41,647 [INFO] tensorflow: global_step/sec: 1.92838

INFO:tensorflow:epoch = 0.696969696969697, loss = 0.51052964, step = 69 (5.674 sec)

2020-03-20 18:16:44,680 [INFO] tensorflow: epoch = 0.696969696969697, loss = 0.51052964, step = 69 (5.674 sec)

INFO:tensorflow:global_step/sec: 1.98563

2020-03-20 18:16:46,180 [INFO] tensorflow: global_step/sec: 1.98563

2020-03-20 18:16:47,239 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.835

INFO:tensorflow:epoch = 0.8080808080808082, loss = 0.508822, step = 80 (5.693 sec)

2020-03-20 18:16:50,374 [INFO] tensorflow: epoch = 0.8080808080808082, loss = 0.508822, step = 80 (5.693 sec)

INFO:tensorflow:global_step/sec: 1.91123

2020-03-20 18:16:50,889 [INFO] tensorflow: global_step/sec: 1.91123

INFO:tensorflow:global_step/sec: 1.92121

2020-03-20 18:16:55,573 [INFO] tensorflow: global_step/sec: 1.92121

INFO:tensorflow:epoch = 0.9191919191919192, loss = 0.50488627, step = 91 (5.703 sec)

2020-03-20 18:16:56,077 [INFO] tensorflow: epoch = 0.9191919191919192, loss = 0.50488627, step = 91 (5.703 sec)

INFO:tensorflow:global_step/sec: 1.85098

2020-03-20 18:17:00,436 [INFO] tensorflow: global_step/sec: 1.85098

2020-03-20 18:17:00,436 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/task_progress_monitor_hook.pyc: Epoch 1/500: loss: 0.00096 Time taken: 0:01:00.898557 ETA: 8:26:28.379943

2020-03-20 18:17:00,436 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.577

INFO:tensorflow:epoch = 1.0303030303030305, loss = 0.0009843046, step = 102 (5.908 sec)

2020-03-20 18:17:01,985 [INFO] tensorflow: epoch = 1.0303030303030305, loss = 0.0009843046, step = 102 (5.908 sec)

INFO:tensorflow:global_step/sec: 1.93703

2020-03-20 18:17:05,082 [INFO] tensorflow: global_step/sec: 1.93703

INFO:tensorflow:epoch = 1.1414141414141414, loss = 0.00095792516, step = 113 (5.737 sec)

2020-03-20 18:17:07,722 [INFO] tensorflow: epoch = 1.1414141414141414, loss = 0.00095792516, step = 113 (5.737 sec)

INFO:tensorflow:global_step/sec: 1.90025

2020-03-20 18:17:09,818 [INFO] tensorflow: global_step/sec: 1.90025

INFO:tensorflow:epoch = 1.2525252525252526, loss = 0.0009852392, step = 124 (5.682 sec)

2020-03-20 18:17:13,404 [INFO] tensorflow: epoch = 1.2525252525252526, loss = 0.0009852392, step = 124 (5.682 sec)

2020-03-20 18:17:13,404 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.711

INFO:tensorflow:global_step/sec: 1.9556

2020-03-20 18:17:14,420 [INFO] tensorflow: global_step/sec: 1.9556

INFO:tensorflow:epoch = 1.3636363636363638, loss = 0.00089812273, step = 135 (5.703 sec)

2020-03-20 18:17:19,107 [INFO] tensorflow: epoch = 1.3636363636363638, loss = 0.00089812273, step = 135 (5.703 sec)

INFO:tensorflow:global_step/sec: 1.92018

2020-03-20 18:17:19,107 [INFO] tensorflow: global_step/sec: 1.92018

INFO:tensorflow:global_step/sec: 1.91623

2020-03-20 18:17:23,804 [INFO] tensorflow: global_step/sec: 1.91623

INFO:tensorflow:epoch = 1.474747474747475, loss = 0.0009279676, step = 146 (5.738 sec)

2020-03-20 18:17:24,844 [INFO] tensorflow: epoch = 1.474747474747475, loss = 0.0009279676, step = 146 (5.738 sec)

2020-03-20 18:17:26,380 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.707

INFO:tensorflow:global_step/sec: 1.93415

2020-03-20 18:17:28,457 [INFO] tensorflow: global_step/sec: 1.93415

INFO:tensorflow:epoch = 1.585858585858586, loss = 0.0009106908, step = 157 (5.671 sec)

2020-03-20 18:17:30,516 [INFO] tensorflow: epoch = 1.585858585858586, loss = 0.0009106908, step = 157 (5.671 sec)

INFO:tensorflow:global_step/sec: 1.93502

2020-03-20 18:17:33,108 [INFO] tensorflow: global_step/sec: 1.93502

INFO:tensorflow:epoch = 1.696969696969697, loss = 0.0009452889, step = 168 (5.735 sec)

2020-03-20 18:17:36,251 [INFO] tensorflow: epoch = 1.696969696969697, loss = 0.0009452889, step = 168 (5.735 sec)

INFO:tensorflow:global_step/sec: 1.92834

2020-03-20 18:17:37,776 [INFO] tensorflow: global_step/sec: 1.92834

2020-03-20 18:17:39,344 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.714

INFO:tensorflow:epoch = 1.8080808080808082, loss = 0.0009398138, step = 179 (5.668 sec)

2020-03-20 18:17:41,919 [INFO] tensorflow: epoch = 1.8080808080808082, loss = 0.0009398138, step = 179 (5.668 sec)

INFO:tensorflow:global_step/sec: 1.92218

2020-03-20 18:17:42,458 [INFO] tensorflow: global_step/sec: 1.92218

INFO:tensorflow:global_step/sec: 1.9167

2020-03-20 18:17:47,153 [INFO] tensorflow: global_step/sec: 1.9167

INFO:tensorflow:epoch = 1.9191919191919193, loss = 0.0009455455, step = 190 (5.757 sec)

2020-03-20 18:17:47,676 [INFO] tensorflow: epoch = 1.9191919191919193, loss = 0.0009455455, step = 190 (5.757 sec)

INFO:tensorflow:global_step/sec: 1.91853

2020-03-20 18:17:51,844 [INFO] tensorflow: global_step/sec: 1.91853

2020-03-20 18:17:51,845 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/task_progress_monitor_hook.pyc: Epoch 2/500: loss: 0.00092 Time taken: 0:00:51.417653 ETA: 7:06:45.991194

2020-03-20 18:17:52,362 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.682

INFO:tensorflow:epoch = 2.0303030303030303, loss = 0.0009290565, step = 201 (5.761 sec)

2020-03-20 18:17:53,438 [INFO] tensorflow: epoch = 2.0303030303030303, loss = 0.0009290565, step = 201 (5.761 sec)

INFO:tensorflow:global_step/sec: 1.8937

2020-03-20 18:17:56,597 [INFO] tensorflow: global_step/sec: 1.8937

INFO:tensorflow:epoch = 2.1414141414141414, loss = 0.0009679984, step = 212 (5.769 sec)

2020-03-20 18:17:59,207 [INFO] tensorflow: epoch = 2.1414141414141414, loss = 0.0009679984, step = 212 (5.769 sec)

INFO:tensorflow:global_step/sec: 1.91842

2020-03-20 18:18:01,288 [INFO] tensorflow: global_step/sec: 1.91842

INFO:tensorflow:epoch = 2.2525252525252526, loss = 0.0009079506, step = 223 (5.740 sec)

2020-03-20 18:18:04,946 [INFO] tensorflow: epoch = 2.2525252525252526, loss = 0.0009079506, step = 223 (5.740 sec)

2020-03-20 18:18:05,468 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.630

INFO:tensorflow:global_step/sec: 1.91774

2020-03-20 18:18:05,981 [INFO] tensorflow: global_step/sec: 1.91774

INFO:tensorflow:epoch = 2.3636363636363638, loss = 0.000969926, step = 234 (5.768 sec)

2020-03-20 18:18:10,714 [INFO] tensorflow: epoch = 2.3636363636363638, loss = 0.000969926, step = 234 (5.768 sec)

INFO:tensorflow:global_step/sec: 1.90139

2020-03-20 18:18:10,715 [INFO] tensorflow: global_step/sec: 1.90139

INFO:tensorflow:global_step/sec: 1.90066

2020-03-20 18:18:15,450 [INFO] tensorflow: global_step/sec: 1.90066

INFO:tensorflow:epoch = 2.474747474747475, loss = 0.00086104346, step = 245 (5.783 sec)

2020-03-20 18:18:16,497 [INFO] tensorflow: epoch = 2.474747474747475, loss = 0.00086104346, step = 245 (5.783 sec)

2020-03-20 18:18:18,603 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.613

INFO:tensorflow:global_step/sec: 1.91597

2020-03-20 18:18:20,147 [INFO] tensorflow: global_step/sec: 1.91597

INFO:tensorflow:epoch = 2.585858585858586, loss = 0.0009799077, step = 256 (5.711 sec)

2020-03-20 18:18:22,208 [INFO] tensorflow: epoch = 2.585858585858586, loss = 0.0009799077, step = 256 (5.711 sec)

INFO:tensorflow:global_step/sec: 1.91937

2020-03-20 18:18:24,836 [INFO] tensorflow: global_step/sec: 1.91937

INFO:tensorflow:epoch = 2.6969696969696972, loss = 0.00094006327, step = 267 (5.753 sec)

2020-03-20 18:18:27,961 [INFO] tensorflow: epoch = 2.6969696969696972, loss = 0.00094006327, step = 267 (5.753 sec)

INFO:tensorflow:global_step/sec: 1.92418

2020-03-20 18:18:29,514 [INFO] tensorflow: global_step/sec: 1.92418

2020-03-20 18:18:31,623 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.681

INFO:tensorflow:epoch = 2.8080808080808084, loss = 0.000921318, step = 278 (5.777 sec)

2020-03-20 18:18:33,738 [INFO] tensorflow: epoch = 2.8080808080808084, loss = 0.000921318, step = 278 (5.777 sec)

INFO:tensorflow:global_step/sec: 1.89646

2020-03-20 18:18:34,259 [INFO] tensorflow: global_step/sec: 1.89646

INFO:tensorflow:global_step/sec: 1.91425

2020-03-20 18:18:38,961 [INFO] tensorflow: global_step/sec: 1.91425

INFO:tensorflow:epoch = 2.9191919191919196, loss = 0.0009311149, step = 289 (5.749 sec)

2020-03-20 18:18:39,486 [INFO] tensorflow: epoch = 2.9191919191919196, loss = 0.0009311149, step = 289 (5.749 sec)

INFO:tensorflow:global_step/sec: 1.89139

2020-03-20 18:18:43,719 [INFO] tensorflow: global_step/sec: 1.89139

2020-03-20 18:18:43,720 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/task_progress_monitor_hook.pyc: Epoch 3/500: loss: 0.00102 Time taken: 0:00:51.835348 ETA: 7:09:22.167956

2020-03-20 18:18:44,758 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.614

INFO:tensorflow:epoch = 3.0303030303030307, loss = 0.00094757596, step = 300 (5.801 sec)

2020-03-20 18:18:45,287 [INFO] tensorflow: epoch = 3.0303030303030307, loss = 0.00094757596, step = 300 (5.801 sec)

INFO:tensorflow:global_step/sec: 1.91187

2020-03-20 18:18:48,427 [INFO] tensorflow: global_step/sec: 1.91187

INFO:tensorflow:epoch = 3.141414141414142, loss = 0.0009914932, step = 311 (5.777 sec)

2020-03-20 18:18:51,064 [INFO] tensorflow: epoch = 3.141414141414142, loss = 0.0009914932, step = 311 (5.777 sec)

INFO:tensorflow:global_step/sec: 1.91593

2020-03-20 18:18:53,124 [INFO] tensorflow: global_step/sec: 1.91593

INFO:tensorflow:epoch = 3.2525252525252526, loss = 0.0009799146, step = 322 (5.715 sec)

2020-03-20 18:18:56,779 [INFO] tensorflow: epoch = 3.2525252525252526, loss = 0.0009799146, step = 322 (5.715 sec)

INFO:tensorflow:global_step/sec: 1.90648

2020-03-20 18:18:57,845 [INFO] tensorflow: global_step/sec: 1.90648

2020-03-20 18:18:57,845 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.641

INFO:tensorflow:epoch = 3.3636363636363638, loss = 0.00084329536, step = 333 (5.735 sec)

2020-03-20 18:19:02,513 [INFO] tensorflow: epoch = 3.3636363636363638, loss = 0.00084329536, step = 333 (5.735 sec)

INFO:tensorflow:global_step/sec: 1.92764

2020-03-20 18:19:02,514 [INFO] tensorflow: global_step/sec: 1.92764

INFO:tensorflow:global_step/sec: 1.91651

2020-03-20 18:19:07,210 [INFO] tensorflow: global_step/sec: 1.91651

INFO:tensorflow:epoch = 3.474747474747475, loss = 0.00087175716, step = 344 (5.763 sec)

2020-03-20 18:19:08,276 [INFO] tensorflow: epoch = 3.474747474747475, loss = 0.00087175716, step = 344 (5.763 sec)

2020-03-20 18:19:10,886 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.668

INFO:tensorflow:global_step/sec: 1.90723

2020-03-20 18:19:11,929 [INFO] tensorflow: global_step/sec: 1.90723

INFO:tensorflow:epoch = 3.585858585858586, loss = 0.00095952966, step = 355 (5.780 sec)

2020-03-20 18:19:14,056 [INFO] tensorflow: epoch = 3.585858585858586, loss = 0.00095952966, step = 355 (5.780 sec)

INFO:tensorflow:global_step/sec: 1.90483

2020-03-20 18:19:16,654 [INFO] tensorflow: global_step/sec: 1.90483

INFO:tensorflow:epoch = 3.6969696969696972, loss = 0.0009282512, step = 366 (5.716 sec)

2020-03-20 18:19:19,772 [INFO] tensorflow: epoch = 3.6969696969696972, loss = 0.0009282512, step = 366 (5.716 sec)

INFO:tensorflow:global_step/sec: 1.91711

2020-03-20 18:19:21,348 [INFO] tensorflow: global_step/sec: 1.91711

2020-03-20 18:19:23,980 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.637

INFO:tensorflow:epoch = 3.8080808080808084, loss = 0.0009802162, step = 377 (5.758 sec)

2020-03-20 18:19:25,530 [INFO] tensorflow: epoch = 3.8080808080808084, loss = 0.0009802162, step = 377 (5.758 sec)

INFO:tensorflow:global_step/sec: 1.92291

2020-03-20 18:19:26,029 [INFO] tensorflow: global_step/sec: 1.92291

INFO:tensorflow:global_step/sec: 1.91303

2020-03-20 18:19:30,733 [INFO] tensorflow: global_step/sec: 1.91303

INFO:tensorflow:epoch = 3.9191919191919196, loss = 0.0009512324, step = 388 (5.735 sec)

2020-03-20 18:19:31,265 [INFO] tensorflow: epoch = 3.9191919191919196, loss = 0.0009512324, step = 388 (5.735 sec)

INFO:tensorflow:global_step/sec: 1.9056

2020-03-20 18:19:35,456 [INFO] tensorflow: global_step/sec: 1.9056

2020-03-20 18:19:35,457 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/task_progress_monitor_hook.pyc: Epoch 4/500: loss: 0.00093 Time taken: 0:00:51.785088 ETA: 7:08:05.403648

INFO:tensorflow:epoch = 4.03030303030303, loss = 0.0009777864, step = 399 (5.765 sec)

2020-03-20 18:19:37,030 [INFO] tensorflow: epoch = 4.03030303030303, loss = 0.0009777864, step = 399 (5.765 sec)

2020-03-20 18:19:37,030 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.663

INFO:tensorflow:global_step/sec: 1.91782

2020-03-20 18:19:40,149 [INFO] tensorflow: global_step/sec: 1.91782

INFO:tensorflow:epoch = 4.141414141414142, loss = 0.0009197957, step = 410 (5.788 sec)

2020-03-20 18:19:42,818 [INFO] tensorflow: epoch = 4.141414141414142, loss = 0.0009197957, step = 410 (5.788 sec)

INFO:tensorflow:global_step/sec: 1.8968

2020-03-20 18:19:44,894 [INFO] tensorflow: global_step/sec: 1.8968

INFO:tensorflow:epoch = 4.252525252525253, loss = 0.0009904406, step = 421 (5.740 sec)

2020-03-20 18:19:48,557 [INFO] tensorflow: epoch = 4.252525252525253, loss = 0.0009904406, step = 421 (5.740 sec)

INFO:tensorflow:global_step/sec: 1.91622

2020-03-20 18:19:49,591 [INFO] tensorflow: global_step/sec: 1.91622

2020-03-20 18:19:50,109 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.646

INFO:tensorflow:epoch = 4.363636363636364, loss = 0.0009531719, step = 432 (5.767 sec)

2020-03-20 18:19:54,324 [INFO] tensorflow: epoch = 4.363636363636364, loss = 0.0009531719, step = 432 (5.767 sec)

INFO:tensorflow:global_step/sec: 1.90127

2020-03-20 18:19:54,324 [INFO] tensorflow: global_step/sec: 1.90127

INFO:tensorflow:global_step/sec: 1.91397

2020-03-20 18:19:59,026 [INFO] tensorflow: global_step/sec: 1.91397

INFO:tensorflow:epoch = 4.474747474747475, loss = 0.0009472327, step = 443 (5.742 sec)

2020-03-20 18:20:00,065 [INFO] tensorflow: epoch = 4.474747474747475, loss = 0.0009472327, step = 443 (5.742 sec)

2020-03-20 18:20:03,241 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.615

INFO:tensorflow:global_step/sec: 1.89664

2020-03-20 18:20:03,772 [INFO] tensorflow: global_step/sec: 1.89664

INFO:tensorflow:epoch = 4.5858585858585865, loss = 0.00094064797, step = 454 (5.816 sec)

2020-03-20 18:20:05,882 [INFO] tensorflow: epoch = 4.5858585858585865, loss = 0.00094064797, step = 454 (5.816 sec)

INFO:tensorflow:global_step/sec: 1.90762

2020-03-20 18:20:08,490 [INFO] tensorflow: global_step/sec: 1.90762

INFO:tensorflow:epoch = 4.696969696969697, loss = 0.0009906166, step = 465 (5.740 sec)

2020-03-20 18:20:11,622 [INFO] tensorflow: epoch = 4.696969696969697, loss = 0.0009906166, step = 465 (5.740 sec)

INFO:tensorflow:global_step/sec: 1.91803

2020-03-20 18:20:13,182 [INFO] tensorflow: global_step/sec: 1.91803

2020-03-20 18:20:16,365 [INFO] /usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/tfhooks/sample_counter_hook.pyc: Samples / sec: 7.620

INFO:tensorflow:epoch = 4.808080808080809, loss = 0.00091935333, step = 476 (5.790 sec)

2020-03-20 18:20:17,411 [INFO] tensorflow: epoch = 4.808080808080809, loss = 0.00091935333, step = 476 (5.790 sec)

INFO:tensorflow:global_step/sec: 1.89198

2020-03-20 18:20:17,939 [INFO] tensorflow: global_step/sec: 1.89198

INFO:tensorflow:global_step/sec: 1.92182

2020-03-20 18:20:22,622 [INFO] tensorflow: global_step/sec: 1.92182

INFO:tensorflow:epoch = 4.91919191919192, loss = 0.00093365816, step = 487 (5.718 sec)

2020-03-20 18:20:23,129 [INFO] tensorflow: epoch = 4.91919191919192, loss = 0.00093365816, step = 487 (5.718 sec)

INFO:tensorflow:Saving checkpoints for step-495.

2020-03-20 18:20:26,800 [INFO] tensorflow: Saving checkpoints for step-495.

2020-03-20 18:20:26,955 [INFO] iva.detectnet_v2.evaluation.evaluation: step 0 / 15, 0.00s/step

/usr/local/lib/python2.7/dist-packages/iva/detectnet_v2/evaluation/metadata.py:38: UserWarning: One or more metadata field(s) are missing from ground_truth batch_data, and will be replaced with defaults: ['frame/camera_location']

2020-03-20 18:21:32,403 [INFO] iva.detectnet_v2.evaluation.evaluation: step 10 / 15, 6.54s/step

Epoch 5/500

=========================

Validation cost: 0.000688

Mean average_precision (in %): 0.0000

class name average precision (in %)

----------------- --------------------------

hose_connected 0

hose_disconnected 0

truck 0

valve_closed 0

valve_open 0

Median Inference Time: 0.029676

I have also tried setting load_graph to false in the train spec, and the initial mAP is still reverting back to 0%.

As you mentioned, your training or retraining results can reach about 94% in the end. So, it does not matter what mAP the intermediate epoch is.

It absolutely matters! When I use other frameworks like Keras + Tensorflow or Darknet, I can load the weights from a previously trained model and pick up from where I left off, which saves countless hours of training time. This library is the “Transfer Learning Toolkit” but it appears to not even be transferring weights from my own previously trained models.

Hi harryhsl8c,

From my own test experience, the pre-trained weights can have an effect on retraining. Your case is very strange. Maybe it results in your datasets. It has only 300+ training images.

Did you ever trigger test via the KITTI dataset by default in jupyter notebook? I tested it, and found the tlt model can quickly help get a good mAP during retraining.

I suggest you test with KITTI dataset to check.

More, if you pruned a tlt model, and set it as a pre-trained model to retrain, it is necessay to set “load_graph” to true.

Yes I initially went through the notebooks using the provided datasets, but I only tried using the NGC pretrained models. I have not yet tried training a model using an already trained .tlt model. I will run some experiments tonight and see what the resulting mAP values are for both the standard KITTI dataset and my own custom dataset, using a fixed number of epochs.

During the first set of training with my own custom dataset, I understand that the mAP will be very low in the beginning. After about 50 epochs, the mAP starts to exceed 1% and slowly it increases up to around 94% after 200 epochs…then it fluctuates between 89-95% mAP until all epochs are complete. It makes no sense that if I were to take that completed model and try training again, why does it go back to 0% and take another 150 epochs before it gets back up to 94%? Even with different learning rates from learning-rate annealing, for the first 50+ epochs to have 0% mAP makes me think the weights are just not being initialized properly…I will continue to experiment unless you are able to point out something I am missing in my spec file or my training logs.

I am going to run the following experiments and report results, with DetectNet_V2 and MobileNet_V2, on my own custom dataset, 200 epochs, with the only changes being:

- Using the NGC registry (

.hdf5model file) aspretrained_model_file - Using the resulting model from #1 above (

.tltmodel file) aspretrained_model_file - Using nothing for

pretrained_model_file… (the documentation does not state that this parameter is required so my assumption is that the weights will be initialized randomly if no value is given forpretrained_model_file)

Meanwhile, the other thought I have is if I’m trying to use one of my own previously-trained .tlt model files, should I be providing a value for freeze_blocks? That may be the next set of experiments I try after these 3.

Several comments here.

- For training spec, please do not set “load_graph”.

For retraining spec, if you pruned a tlt model, and set it as a pre-trained model. it is necessay to set “load_graph” to true. - For training time, if you pruned the tlt model after training, the tlt model will be smaller, it will save time during retraining. My previous test experiences on KITTI dataset can prove this.

- For pretrained weights loading, per my previous result on kITTI dataset, I set 120 epochs for both training and retraining. The mAP at 20th epoch of retraining is much better than the mAP at 20th epoch of training. So it proves that pre-trained weights make effect.

- The model after you trained is a tlt model instead of etlt model.

- For the 3 experiments I’m performing above, I will set

load_graphto false - The 3 experiments above are to be performed without any pruning

- Interesting, I’m curious to see what happens with my custom dataset, will know in approx 6 hours

- You are right, I keep saying

.etltand I mean to say.tlt– I will correct my previous posts.

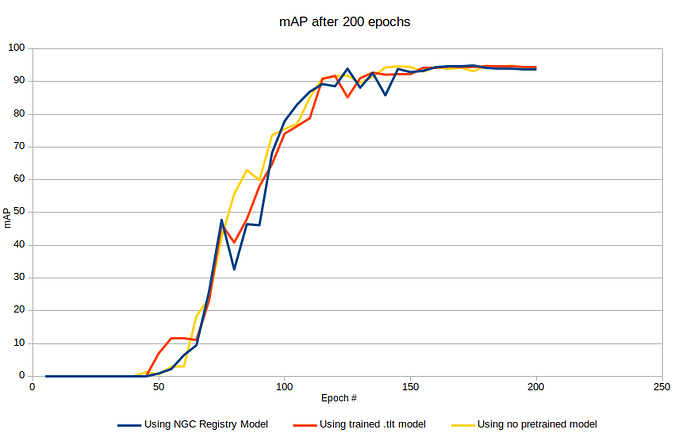

Ok, @Morganh I’ve completed the 3 experiments and the results appear to support the theory that the TLT isn’t initializing the model weights at all. I have the same training performance whether I use the NGC pre-trained model, my own trained model, or nothing for the pretrained_model_file parameter in the spec file. I have saved each of the specs files and the training logs and can attach here if you would like to see that–they are all very similar to what I’ve already shared above.

Again, these were the 3 experiments I ran:

- Using the NGC registry (

.hdf5model file) aspretrained_model_file- Using the resulting model from #1 above (

.tltmodel file) aspretrained_model_file- Using nothing for

pretrained_model_file… (the documentation does not state that this parameter is required so my assumption is that the weights will be initialized randomly if no value is given forpretrained_model_file)

Here are the training results on a graph of mAP every 5 epochs:

Hi harryhsl8c,

Could you attach all the spec files along with the log here?

More, did you try other TLT network, like SSD or Faster-rcnn?

I have not performed these experiments with other model types.

Spec and log files available here:

https://1drv.ms/u/s!Aq7sB_tNnHUGgY9djw1apz7v_ARhpw?e=nGi1th

Hi harryhsl8c,

I cannot access the folder for unknown reason. Is it possible to attach a zip file here?

Hi harryhsl8c,

For the reason why the first 50 epochs are all back at 0% mAP, it results from soft_start parameter in the spec. And you already set training epochs to 500, so, for the epochs which are less than 0.1*500 = 50, the mAP is not high.

soft_start: 0.1

Make soft_start smaller will let mAP increase earlier.

And also from your previous test result graph, I can see the mAP at 50 epoch with pre-trained model is higher than using no-pretrained model. Therefore, it proves that pretrained model helps.