Please provide complete information as applicable to your setup.

•Hardware Platform (Jetson / GPU): Jetson Xavier

•DeepStream Version: 6.0

•JetPack Version (valid for Jetson only):4.6

•TensorRT Version:8.0.1

•How to reproduce the issue ?

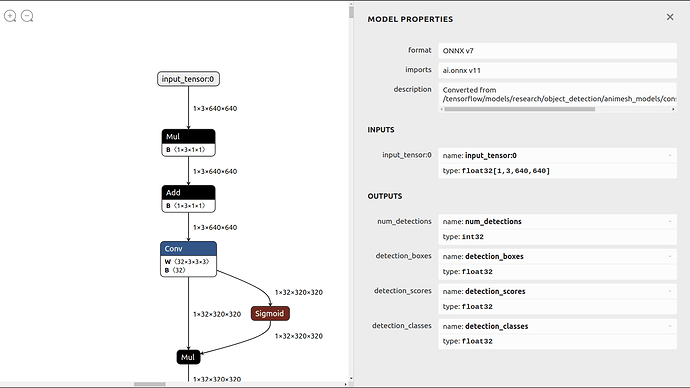

I’ve a trained SSD efficientnet model from TF object detection API 2.x. I’ve converted it to onnx using TensorRT/samples/python/efficientdet at main · NVIDIA/TensorRT · GitHub which gives me BatchedNMS_TRT based layer with 4 output layers which are supported in deepstream using the code create_onnx.py. By taking this onnx model, I converted it to .engine file on my xavier using build_engine.py. I got the .engine file. After that, I created the libnvds_infercustomparser.so plugin as mentioned in the documentation of deepstream for custom model inference.

Following is my config file:

[property]

gpu-id=0

net-scale-factor=1.0

offsets=103.939;116.779;123.68

model-color-format=0

labelfile-path=/opt/nvidia/deepstream/deepstream-6.0/sources/deepstream_python_apps/apps/deepstream-ssd-parser/animesh_construction/excavator_class_list.txt

model-engine-file=/opt/nvidia/deepstream/deepstream-6.0/sources/deepstream_python_apps/apps/deepstream-ssd-parser/animesh_construction/saved_model_using_code_batched_NMS.engine

infer-dims=3;640;640

#uff-input-order=0

#force-implicit-batch-dim=1

maintain-aspect-ratio=1

#uff-input-blob-name=Input

batch-size=1

## 0=FP32, 1=INT8, 2=FP16 mode

network-mode=0

num-detected-classes=4

parse-bbox-func-name = NvDsInferParseCustomTfSSD

custom-lib-path=/opt/nvidia/deepstream/deepstream-6.0/sources/libs/nvdsinfer_customparser/libnvds_infercustomparser.so

output-blob-names=num_detections;detection_scores;detection_classes;detection_boxes

interval=0

gie-unique-id=1

is-classifier=0

#network-type=0

[class-attrs-all]

pre-cluster-threshold=0.3

roi-top-offset=0

roi-bottom-offset=0

detected-min-w=0

detected-min-h=0

detected-max-w=0

detected-max-h=0

I’m using this in a python code.

This is my terminal screen :

Creating Pipeline

Creating Source

Creating Video Converter

Creating EGLSink

Playing cam /dev/video0

Adding elements to Pipeline

Linking elements in the Pipeline

Starting pipeline

Using winsys: x11

0:00:00.416767011 20569 0x32e718f0 WARN nvinfer gstnvinfer.cpp:635:gst_nvinfer_logger:<primary-inference> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1161> [UID = 1]: Warning, OpenCV has been deprecated. Using NMS for clustering instead of cv::groupRectangles with topK = 20 and NMS Threshold = 0.5

0:00:00.418165461 20569 0x32e718f0 INFO nvinfer gstnvinfer.cpp:638:gst_nvinfer_logger:<primary-inference> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:1914> [UID = 1]: Trying to create engine from model files

WARNING: [TRT]: onnx2trt_utils.cpp:364: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

WARNING: [TRT]: builtin_op_importers.cpp:4552: Attribute scoreBits not found in plugin node! Ensure that the plugin creator has a default value defined or the engine may fail to build.

WARNING: [TRT]: Detected invalid timing cache, setup a local cache instead

0:02:50.662373034 20569 0x32e718f0 INFO nvinfer gstnvinfer.cpp:638:gst_nvinfer_logger:<primary-inference> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:1947> [UID = 1]: serialize cuda engine to file: /opt/nvidia/deepstream/deepstream-6.0/sources/deepstream_python_apps/apps/deepstream-ssd-parser/animesh_construction/saved_model_using_code_batched_NMS.onnx_b1_gpu0_fp32.engine successfully

INFO: [Implicit Engine Info]: layers num: 5

0 INPUT kFLOAT input_tensor:0 3x640x640

1 OUTPUT kINT32 num_detections 0

2 OUTPUT kFLOAT detection_boxes 100x4

3 OUTPUT kFLOAT detection_scores 100

4 OUTPUT kFLOAT detection_classes 100

0:02:50.755151792 20569 0x32e718f0 ERROR nvinfer gstnvinfer.cpp:632:gst_nvinfer_logger:<primary-inference> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::allocateBuffers() <nvdsinfer_context_impl.cpp:1430> [UID = 1]: Failed to allocate cuda output buffer during context initialization

0:02:50.755272471 20569 0x32e718f0 ERROR nvinfer gstnvinfer.cpp:632:gst_nvinfer_logger:<primary-inference> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1280> [UID = 1]: Failed to allocate buffers

0:02:50.840017746 20569 0x32e718f0 WARN nvinfer gstnvinfer.cpp:841:gst_nvinfer_start:<primary-inference> error: Failed to create NvDsInferContext instance

0:02:50.840110648 20569 0x32e718f0 WARN nvinfer gstnvinfer.cpp:841:gst_nvinfer_start:<primary-inference> error: Config file path: /opt/nvidia/deepstream/deepstream-6.0/sources/deepstream_python_apps/apps/deepstream-ssd-parser/ssd_efficientnet.txt, NvDsInfer Error: NVDSINFER_CUDA_ERROR

Error: gst-resource-error-quark: Failed to create NvDsInferContext instance (1): /dvs/git/dirty/git-master_linux/deepstream/sdk/src/gst-plugins/gst-nvinfer/gstnvinfer.cpp(841): gst_nvinfer_start (): /GstPipeline:pipeline0/GstNvInfer:primary-inference:

Config file path: /opt/nvidia/deepstream/deepstream-6.0/sources/deepstream_python_apps/apps/deepstream-ssd-parser/ssd_efficientnet.txt, NvDsInfer Error: NVDSINFER_CUDA_ERROR

What should be done to overcome this issue?