I use a simple organization of 3D data structures to create a grid on CPU and fast searching occupied cells.

struct Voxel

{

uint8_t ID = 0;

uint8_t hit_counter = 0;

};

struct Chunk_level_1

{

uint8_t counter = 0;

Voxel voxel [10][10][10] = {0}; // 1 000

};

struct Chunk_level_2

{

uint8_t counter = 0;

Chunk_level_1 Chk_Lv1 [10][10][10]= {0}; // 1 000 000

};

Chunk_level_2 Chk_Lv2 [10][10][10] = {0}; //1 000 000 000

I want to declare it as a global variable to store in the gpu memory of the entire grid, updates it on gpu from rgb-d data and periodically synchronize filled cells with a similar structure on the CPU. But when I try to compile this, I get an build infinite loop until the memory on my ssd storage runs out.

__device__ struct Voxel

{

uint8_t ID = 0;

uint8_t hit_counter = 0;

};

__device__ struct Chunk_level_1

{

uint8_t counter = 0;

Voxel voxel [10][10][10] = {0}; // 1 000

};

__device__ struct Chunk_level_2

{

uint8_t counter = 0;

Chunk_level_1 Chk_Lv1 [10][10][10]= {0}; // 1 000 000

};

__device__ Chunk_level_2 Chk_Lv2 [10][10][10] = {0}; //1 000 000 000

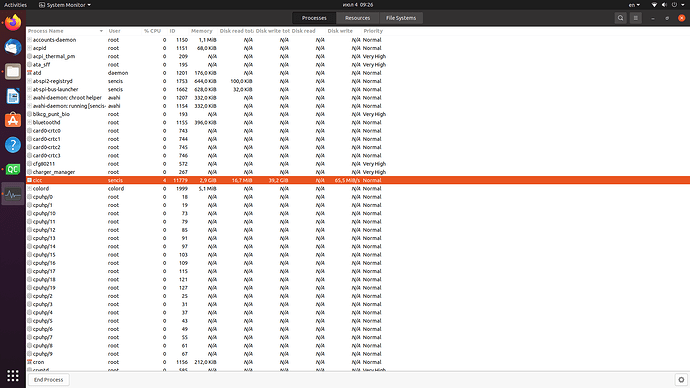

I used cuda toolkit 12.0.1 in mobile 3050ti, qmake config:

# CUDA

# nvcc flags (ptxas option verbose is always useful)

NVCCFLAGS = --compiler-options -fno-strict-aliasing -use_fast_math --ptxas-options=-v

# Path to cuda toolkit install

CUDA_DIR = /usr/local/cuda-12.0

# GPU architecture (ADJUST FOR YOUR GPU)

CUDA_GENCODE = arch=compute_86,code=sm_86

# manually add CUDA sources (ADJUST MANUALLY)

CUDA_SOURCES += cudamap.cu

# Path to header and libs files

INCLUDEPATH += $$CUDA_DIR/include

# libs used in your code

LIBS += -L $$CUDA_DIR/lib64 -lcudart -lcuda

cuda.commands = $$CUDA_DIR/bin/nvcc -c -gencode $$CUDA_GENCODE $$NVCCFLAGS -o ${QMAKE_FILE_OUT} ${QMAKE_FILE_NAME}

cuda.dependency_type = TYPE_C

cuda.depend_command = $$CUDA_DIR/bin/nvcc -M ${QMAKE_FILE_NAME} | sed \"s/^.*: //\" #For Qt 5.12.2

cuda.input = CUDA_SOURCES

cuda.output = ${OBJECTS_DIR}${QMAKE_FILE_BASE}_cuda.o

# Tell Qt that we want add more stuff to the Makefile

QMAKE_EXTRA_COMPILERS += cuda