I am trying to use cuda-dbg under wsl2, my driver version is 531.41 and cuda version is 12.1. GPU card is RTX 2060S.

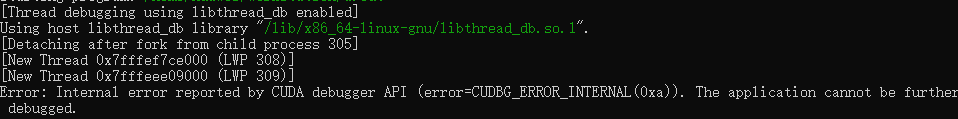

when I use cuda-gdb to debug my program, It reports “Error: Internal error reported by CUDA debugger API (error=CUDBG_ERROR_INTERNAL(0xa)). The application cannot be further debugged.” after exctuing the first cuda function,and also after starting new threads.

Hello Hiao, could you please check if the registry key HKEY_LOCAL_MACHINE\SOFTWARE\NVIDIA Corporation\GPUDebugger\EnableInterface is present, with a value of (DWORD)1?

If not, create it and try again.

Yes,I have checked. The registry key “EnableInterface” is already set to 1(REG_DWORD).

Can you please provide the output of nvidia-smi?

Thank you @hiao, can you run any cuda app outside of the debugger?

Also, can you try with the latest toolkit version 12.1U1?

yes, I can run cuda app outside the dubbger. And I have just tried the latest version, the question is still existing.

I am having a similar issue. was there ever a resolution or do we just have to wait for cuda 12.2?

The issue is under investigation, how did you set up your WSL environment with CUDA?

I am also having a similar issue. My GPU is RTX 3050 Laptop. I have tried different driver versions and cuda versions, but it didn’t work.

Similar issue but I just installed

- WSL2

- Cuda toolkit 12.3

- Win11 driver 546.33

- Running the sample VectorAdd in VSCode w NSight plugin

Cant stop at breakpoint even in CPP code (e.g. main). Is this not working yet in WSL2?

Hi, @drstriate

Please make sure registry key HKEY_LOCAL_MACHINE\SOFTWARE\NVIDIA Corporation\GPUDebugger\EnableInterface is present, with a value of (DWORD)1

Thanks for this. This was set properly, but I did manage to get the samples to compile (again) after the Toolkit 12.3 Update by adding various environment settings found on this forum.

export PATH=/usr/local/cuda-12.3/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda-12.3/lib:$LD_LIBRARY_PATH

export CPATH=/usr/local/cuda-12.3/targets/x86_64-linux/include:$CPATH

Thanks for the update !

So you now have sample built/run successfully on WSL, right ?

Does cuda-gdb ./vectorAdd work now ? If not, can you clarify your GPU and paste the output of cuda-gdb ?

This topic was automatically closed after 13 days. New replies are no longer allowed.