Hardware Platform (Jetson / GPU) Jetson NX

DeepStream Version 5.0

JetPack Version (valid for Jetson only) JetPack 4.5

Currently i was trying to insert LPDnet and LPRnet as 2 different sgie into my previous DeepStream pipeline, and i have encountered some problems.

Briefly speaking, i was trying to achieve the function which was shown in the Github repo, after the PGIE inferencing, showing where the car was, and passing down the result to SGIE part to detect the license plate and then passing down to regconize plate number.

Prework:

I have downloaded, converted and coded everything i would be using, engine file, label file, pgie and sgie config, etc. They will be listed at the end of the topic.

Then something bizzare happened, and i have double-checked almost everthing didn’t see what went wrong. When i was using LPD(License Plate Detector to find where is the plate) as PGIE and LPR(License Plate Regconizor to regconize what the number is) as SGIE, and everything worked just fine, OSD was showing where the license plate is , and what the numbers are. The pipeline i was using: streammux->pgie(LPD)->sgie(LPR)->sink (in short just FYI)

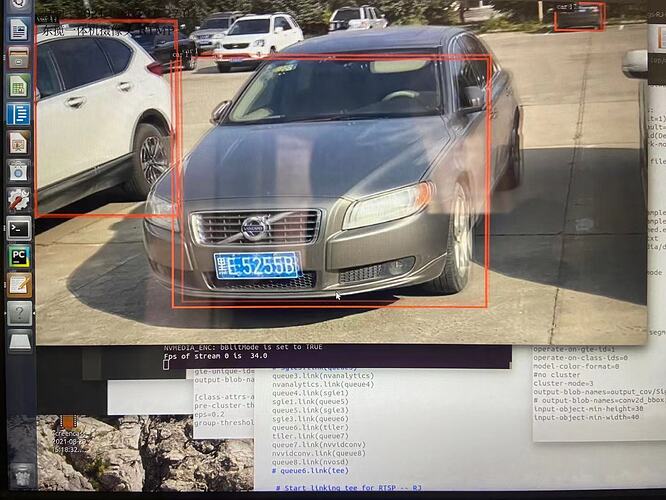

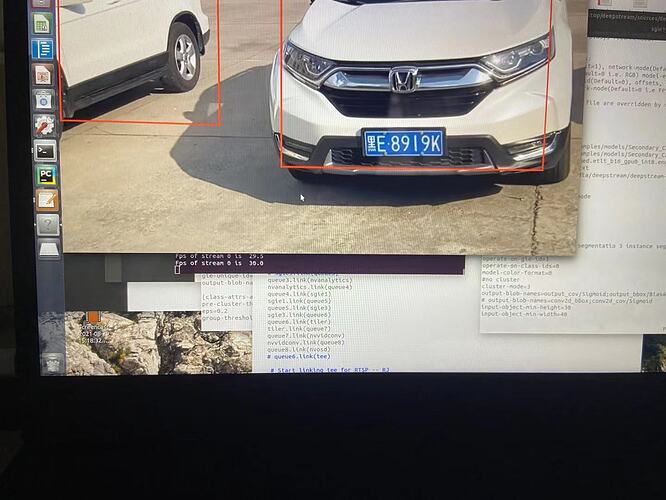

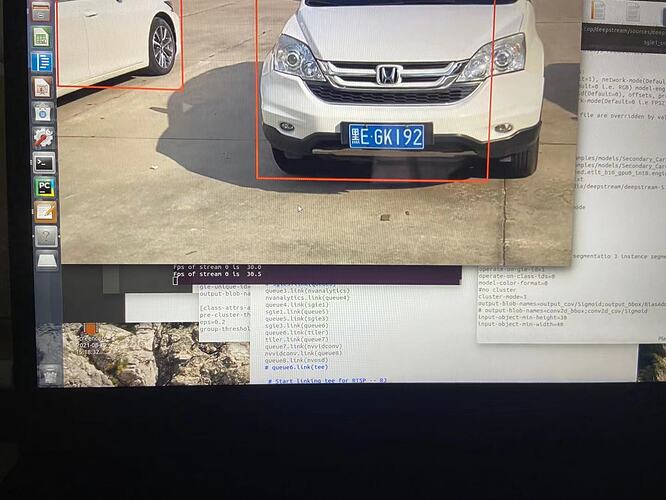

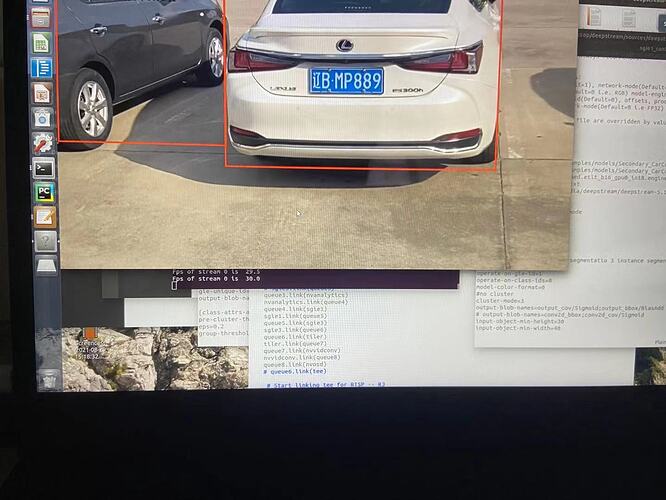

Then i want to insert the LPD and LPR as SGIEs, put them into the pipeline with TrafficCamNet as PGIE. And the result i was hoping to get was:

But the result i got was:

As you can see there was only the PGIE bounding boxes were shown on screen, no licence plate bounding box, no numbers. But i have already adding LPD and LPR as SGIE into the pipeline. The main pipeline and all the inference config are listed below, i am hoping to get some help here, thanks.

Pipeline:

streammux.link(queue1)

queue1.link(pgie)

pgie.link(queue2)

queue2.link(tracker)

tracker.link(queue3)

queue3.link(nvanalytics)

nvanalytics.link(queue4)

queue4.link(sgie1)

sgie1.link(queue5)

queue5.link(sgie2)

sgie2.link(queue6)

queue6.link(tiler)

tiler.link(queue7)

queue7.link(nvvidconv)

nvvidconv.link(queue8)

queue8.link(nvosd)

nvosd.link(tee)

queue9.link(nvvidconv_postosd)

nvvidconv_postosd.link(caps)

caps.link(encoder)

encoder.link(codecparse)

codecparse.link(flvmux)

flvmux.link(sink2)

PGIE config:

[property]

gpu-id=0

net-scale-factor=0.0039215697906911373

model-engine-file=ccpd_pruned.etlt_b16_gpu0_int8.engine

labelfile-path=labels.txt

int8-calib-file=../../../../samples/models/Primary_Detector/cal_trt.bin

force-implicit-batch-dim=1

batch-size=16

process-mode=1

model-color-format=0

network-mode=1

num-detected-classes=4

interval=0

gie-unique-id=1

output-blob-names=conv2d_bbox;conv2d_cov/Sigmoid

[class-attrs-all]

pre-cluster-threshold=0.2

eps=0.2

group-threshold=1

SGIE1 (LPD) config:

[property]

gpu-id=0

net-scale-factor=1

model-engine-file=ccpd_pruned.etlt_b16_gpu0_int8.engine

labelfile-path=labels_lpd.txt

force-implicit-batch-dim=1

batch-size=16

network-mode=1

num-detected-classes=1

##1 Primary 2 Secondary

process-mode=2

interval=0

gie-unique-id=2

#0 detector 1 classifier 2 segmentatio 3 instance segmentation

network-type=0

operate-on-gie-id=1

operate-on-class-ids=0

model-color-format=0

#no cluster

cluster-mode=3

output-blob-names=output_cov/Sigmoid;output_bbox/BiasAdd

# output-blob-names=conv2d_bbox;conv2d_cov/Sigmoid

input-object-min-height=30

input-object-min-width=40

[class-attrs-all]

pre-cluster-threshold=0.3

roi-top-offset=0

roi-bottom-offset=0

detected-min-w=0

detected-min-h=0

detected-max-w=0

detected-max-h=0

SGIE2 (LPR) config:

[property]

gpu-id=0

model-engine-file=lpr_ch_onnx_b16.engine

labelfile-path=labels_ch.txt

batch-size=16

## 0=FP32, 1=INT8, 2=FP16 mode

network-mode=2

interval=0

num-detected-classes=67

gie-unique-id=4

output-blob-names=output_bbox/BiasAdd;output_cov/Sigmoid

#0=Detection 1=Classifier 2=Segmentation

network-type=1

parse-classifier-func-name=NvDsInferParseCustomNVPlate

custom-lib-path=libnvdsinfer_custom_impl_lpr.so

process-mode=2

operate-on-gie-id=2

net-scale-factor=0.00392156862745098

#net-scale-factor=1.0

#0=RGB 1=BGR 2=GRAY

model-color-format=0

maintain-aspect-ratio=0

#scaling-compute-hw=2

[class-attrs-all]

threshold=0.5

Main Program (edited based on deepstream-lpr-app.c and preronamajumder python code):

import argparse

import sys

sys.path.append('../')

import gi

gi.require_version('Gst', '1.0')

gi.require_version('GstRtspServer', '1.0')

import configparser

gi.require_version('Gst', '1.0')

from gi.repository import GObject, Gst, GstRtspServer

from gi.repository import GLib

from ctypes import *

import time

import sys

import math

import platform

from common.is_aarch_64 import is_aarch64

from common.bus_call import bus_call

from common.FPS import GETFPS

import time

import pyds

fps_streams={}

MAX_DISPLAY_LEN=64

PGIE_CLASS_ID_VEHICLE = 0

PGIE_CLASS_ID_BICYCLE = 1

PGIE_CLASS_ID_PERSON = 2

PGIE_CLASS_ID_ROADSIGN = 3

MUXER_OUTPUT_WIDTH=1920

MUXER_OUTPUT_HEIGHT=1080

MUXER_BATCH_TIMEOUT_USEC=4000000

TILED_OUTPUT_WIDTH=1280

TILED_OUTPUT_HEIGHT=720

GST_CAPS_FEATURES_NVMM="memory:NVMM"

OSD_PROCESS_MODE= 0

OSD_DISPLAY_TEXT= 1

pgie_classes_str= ["Vehicle", "TwoWheeler", "Person","RoadSign"]

# nvanlytics_src_pad_buffer_probe will extract metadata received on nvtiler sink pad

# and update params for drawing rectangle, object information etc.

def cb_newpad(decodebin, decoder_src_pad,data):

print("In cb_newpad\n")

caps=decoder_src_pad.get_current_caps()

gststruct=caps.get_structure(0)

gstname=gststruct.get_name()

source_bin=data

features=caps.get_features(0)

# Need to check if the pad created by the decodebin is for video and not

# audio.

print("gstname=",gstname)

if(gstname.find("video")!=-1):

# Link the decodebin pad only if decodebin has picked nvidia

# decoder plugin nvdec_*. We do this by checking if the pad caps contain

# NVMM memory features.

print("features=",features)

if features.contains("memory:NVMM"):

# Get the source bin ghost pad

bin_ghost_pad=source_bin.get_static_pad("src")

if not bin_ghost_pad.set_target(decoder_src_pad):

sys.stderr.write("Failed to link decoder src pad to source bin ghost pad\n")

else:

sys.stderr.write(" Error: Decodebin did not pick nvidia decoder plugin.\n")

def decodebin_child_added(child_proxy,Object,name,user_data):

print("Decodebin child added:", name, "\n")

if(name.find("decodebin") != -1):

Object.connect("child-added",decodebin_child_added,user_data)

if(is_aarch64() and name.find("nvv4l2decoder") != -1):

print("Seting bufapi_version\n")

Object.set_property("bufapi-version",True)

def create_source_bin(index,uri):

print("Creating source bin")

# Create a source GstBin to abstract this bin's content from the rest of the

# pipeline

bin_name="source-bin-%02d" %index

print(bin_name)

nbin=Gst.Bin.new(bin_name)

if not nbin:

sys.stderr.write(" Unable to create source bin \n")

# Source element for reading from the uri.

# We will use decodebin and let it figure out the container format of the

# stream and the codec and plug the appropriate demux and decode plugins.

uri_decode_bin=Gst.ElementFactory.make("uridecodebin", "uri-decode-bin")

if not uri_decode_bin:

sys.stderr.write(" Unable to create uri decode bin \n")

# We set the input uri to the source element

uri_decode_bin.set_property("uri",uri)

# Connect to the "pad-added" signal of the decodebin which generates a

# callback once a new pad for raw data has beed created by the decodebin

uri_decode_bin.connect("pad-added",cb_newpad,nbin)

uri_decode_bin.connect("child-added",decodebin_child_added,nbin)

# We need to create a ghost pad for the source bin which will act as a proxy

# for the video decoder src pad. The ghost pad will not have a target right

# now. Once the decode bin creates the video decoder and generates the

# cb_newpad callback, we will set the ghost pad target to the video decoder

# src pad.

Gst.Bin.add(nbin,uri_decode_bin)

bin_pad=nbin.add_pad(Gst.GhostPad.new_no_target("src",Gst.PadDirection.SRC))

if not bin_pad:

sys.stderr.write(" Failed to add ghost pad in source bin \n")

return None

return nbin

def main(args):

# Check input arguments

args.append("file:/home/nvidia/Desktop/plate.MP4")

if len(args) < 2:

sys.stderr.write("usage: %s <uri1> [uri2] ... [uriN]\n" % args[0])

sys.exit(1)

for i in range(0,len(args)-1):

fps_streams["stream{0}".format(i)]=GETFPS(i)

number_sources=len(args)-1

# Standard GStreamer initialization

GObject.threads_init()

Gst.init(None)

# Create gstreamer elements */

# Create Pipeline element that will form a connection of other elements

print("Creating Pipeline \n ")

pipeline = Gst.Pipeline()

is_live = False

if not pipeline:

sys.stderr.write(" Unable to create Pipeline \n")

print("Creating streamux \n ")

# Create nvstreammux instance to form batches from one or more sources.

streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer")

if not streammux:

sys.stderr.write(" Unable to create NvStreamMux \n")

pipeline.add(streammux)

#############################################################################################

for i in range(number_sources):

print("Creating source_bin ", i, " \n ")

uri_name = args[i + 1]

if uri_name.find("rtsp://") == 0:

is_live = True

source_bin = create_source_bin(i, uri_name)

if not source_bin:

sys.stderr.write("Unable to create source bin \n")

pipeline.add(source_bin)

padname = "sink_%u" % i

sinkpad = streammux.get_request_pad(padname)

if not sinkpad:

sys.stderr.write("Unable to create sink pad bin \n")

srcpad = source_bin.get_static_pad("src")

if not srcpad:

sys.stderr.write("Unable to create src pad bin \n")

srcpad.link(sinkpad)

#########################################################################

queue1=Gst.ElementFactory.make("queue","queue1")

queue2=Gst.ElementFactory.make("queue","queue2")

queue3=Gst.ElementFactory.make("queue","queue3")

queue4=Gst.ElementFactory.make("queue","queue4")

queue5=Gst.ElementFactory.make("queue","queue5")

queue6=Gst.ElementFactory.make("queue","queue6")

queue7=Gst.ElementFactory.make("queue","queue7")

queue8=Gst.ElementFactory.make("queue","queue8")

queue9=Gst.ElementFactory.make("queue","rtsp_queue")

queue10=Gst.ElementFactory.make("queue","rtmp_queue")

pipeline.add(queue1)

pipeline.add(queue2)

pipeline.add(queue3)

pipeline.add(queue4)

pipeline.add(queue5)

pipeline.add(queue6)

pipeline.add(queue7)

pipeline.add(queue8)

pipeline.add(queue9)

pipeline.add(queue10)

print("Creating Pgie \n ")

pgie = Gst.ElementFactory.make("nvinfer", "primary-inference")

if not pgie:

sys.stderr.write(" Unable to create pgie \n")

print("Creating nvtracker \n ")

tracker = Gst.ElementFactory.make("nvtracker", "tracker")

if not tracker:

sys.stderr.write(" Unable to create tracker \n")

sgie1 = Gst.ElementFactory.make("nvinfer", "secondary1-nvinference-engine")

if not sgie1:

sys.stderr.write(" Unable to make sgie1 \n")

sgie2 = Gst.ElementFactory.make("nvinfer", "secondary2-nvinference-engine")

if not sgie1:

sys.stderr.write(" Unable to make sgie2 \n")

print("Creating nvdsanalytics \n ")

nvanalytics = Gst.ElementFactory.make("nvdsanalytics", "analytics")

if not nvanalytics:

sys.stderr.write(" Unable to create nvanalytics \n")

nvanalytics.set_property("config-file", "config_nvdsanalytics.txt")

print("Creating tiler \n ")

tiler=Gst.ElementFactory.make("nvmultistreamtiler", "nvtiler")

if not tiler:

sys.stderr.write(" Unable to create tiler \n")

print("Creating nvvidconv \n ")

nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor")

if not nvvidconv:

sys.stderr.write(" Unable to create nvvidconv \n")

print("Creating nvosd \n ")

nvosd = Gst.ElementFactory.make("nvdsosd", "onscreendisplay")

if not nvosd:

sys.stderr.write(" Unable to create nvosd \n")

nvosd.set_property('process-mode',OSD_PROCESS_MODE)

nvosd.set_property('display-text',OSD_DISPLAY_TEXT)

if(is_aarch64()):

print("Creating transform \n ")

transform=Gst.ElementFactory.make("nvegltransform", "nvegl-transform")

if not transform:

sys.stderr.write(" Unable to create transform \n")

if is_live:

print("Atleast one of the sources is live")

streammux.set_property('live-source', 1)

streammux.set_property('width', 1920)

streammux.set_property('height', 1080)

streammux.set_property('batch-size', number_sources)

streammux.set_property('batched-push-timeout', 4000000)

# set properties of pgie and sgie

pgie.set_property('config-file-path', "dsnvanalytics_pgie_config.txt")

sgie1.set_property('config-file-path', "sgie1_config.txt")

sgie2.set_property('config-file-path', "sgie2_config.txt")

pgie_batch_size=pgie.get_property("batch-size")

if(pgie_batch_size != number_sources):

print("WARNING: Overriding infer-config batch-size",pgie_batch_size," with number of sources ", number_sources," \n")

pgie.set_property("batch-size",number_sources)

tiler_rows=int(math.sqrt(number_sources))

tiler_columns=int(math.ceil((1.0*number_sources)/tiler_rows))

tiler.set_property("rows",tiler_rows)

tiler.set_property("columns",tiler_columns)

tiler.set_property("width", TILED_OUTPUT_WIDTH)

tiler.set_property("height", TILED_OUTPUT_HEIGHT)

# sink.set_property("qos",0)

#Set properties of tracker

config = configparser.ConfigParser()

config.read('dsnvanalytics_tracker_config.txt')

config.sections()

# Newly coded-in pipeline add -- RJ

nvvidconv_postosd = Gst.ElementFactory.make("nvvideoconvert", "convertor_postosd")

if not nvvidconv_postosd:

sys.stderr.write(" Unable to create nvvidconv_postosd \n")

# Create a caps filter --RJ

caps = Gst.ElementFactory.make("capsfilter", "filter")

caps.set_property("caps", Gst.Caps.from_string("video/x-raw(memory:NVMM), format=I420"))

# Make the encoder --RJ

if codec == "H264":

encoder = Gst.ElementFactory.make("nvv4l2h264enc", "encoder")

print("Creating H264 Encoder")

elif codec == "H265":

encoder = Gst.ElementFactory.make("nvv4l2h265enc", "encoder")

print("Creating H265 Encoder")

if not encoder:

sys.stderr.write(" Unable to create encoder")

encoder.set_property('bitrate', bitrate)

if is_aarch64():

encoder.set_property('preset-level', 1)

encoder.set_property('insert-sps-pps', 1)

encoder.set_property('bufapi-version', 1)

# Make the payload-encode video into RTP packets -- RJ

if codec == "H264":

rtppay = Gst.ElementFactory.make("rtph264pay", "rtppay")

print("Creating H264 rtppay")

elif codec == "H265":

rtppay = Gst.ElementFactory.make("rtph265pay", "rtppay")

print("Creating H265 rtppay")

if not rtppay:

sys.stderr.write(" Unable to create rtppay")

# Make the codec -- RJ

codecparse = Gst.ElementFactory.make("h264parse", "h264-parser")

if not codecparse:

sys.stderr.write(" Unable to create h264parse")

# flvmux

flvmux = Gst.ElementFactory.make("flvmux", "flvmux")

if not flvmux:

sys.stderr.write(" Unable to create flvmux")

# Make the tee -- RJ

tee=Gst.ElementFactory.make("tee", "nvsink-tee")

if not tee:

sys.stderr.write(" Unable to create tee \n")

# Make the UDP sink

# updsink_port_num = 5400

# sink = Gst.ElementFactory.make("udpsink", "udpsink")

# if not sink:

# sys.stderr.write(" Unable to create udpsink")

#

# sink.set_property('host', '224.224.255.255')

# sink.set_property('port', updsink_port_num)

# sink.set_property('async', False)

# sink.set_property('sync', 1)

# Make the rtmp sink

sink2 = Gst.ElementFactory.make("rtmpsink", "rtmpsink")

if not sink2:

sys.stderr.write(" Unable to create udpsink2")

sink2.set_property('location', 'rtmp://localhost:1935/hls/url')

# Make the eglsink

sink1 = Gst.ElementFactory.make("nveglglessink", "nvvideo-renderer")

if not sink1:

sys.stderr.write(" Unable to create egl sink \n")

sink1.set_property('sync', 0)

for key in config['tracker']:

if key == 'tracker-width' :

tracker_width = config.getint('tracker', key)

tracker.set_property('tracker-width', tracker_width)

if key == 'tracker-height' :

tracker_height = config.getint('tracker', key)

tracker.set_property('tracker-height', tracker_height)

if key == 'gpu-id' :

tracker_gpu_id = config.getint('tracker', key)

tracker.set_property('gpu_id', tracker_gpu_id)

if key == 'll-lib-file' :

tracker_ll_lib_file = config.get('tracker', key)

tracker.set_property('ll-lib-file', tracker_ll_lib_file)

if key == 'll-config-file' :

tracker_ll_config_file = config.get('tracker', key)

tracker.set_property('ll-config-file', tracker_ll_config_file)

if key == 'enable-batch-process' :

tracker_enable_batch_process = config.getint('tracker', key)

tracker.set_property('enable_batch_process', tracker_enable_batch_process)

if key == 'enable-past-frame' :

tracker_enable_past_frame = config.getint('tracker', key)

tracker.set_property('enable_past_frame', tracker_enable_past_frame)

print("Adding elements to Pipeline \n")

pipeline.add(pgie)

pipeline.add(tracker)

pipeline.add(sgie1)

pipeline.add(sgie2)

pipeline.add(nvanalytics)

pipeline.add(tiler)

pipeline.add(nvvidconv)

pipeline.add(nvosd)

pipeline.add(nvvidconv_postosd)

pipeline.add(caps)

pipeline.add(encoder)

pipeline.add(tee)

# pipeline.add(sink)

pipeline.add(flvmux)

pipeline.add(codecparse)

pipeline.add(sink2)

pipeline.add(transform)

pipeline.add(sink1)

# We link elements in the following order:

# sourcebin -> streammux -> nvinfer -> nvtracker -> nvdsanalytics ->

# nvtiler -> nvvideoconvert -> nvdsosd -> sink

streammux.link(queue1)

queue1.link(pgie)

pgie.link(queue2)

queue2.link(tracker)

tracker.link(queue3)

queue3.link(nvanalytics)

nvanalytics.link(queue4)

queue4.link(sgie1)

sgie1.link(queue5)

queue5.link(sgie2)

sgie2.link(queue6)

queue6.link(tiler)

tiler.link(queue7)

queue7.link(nvvidconv)

nvvidconv.link(queue8)

queue8.link(nvosd)

nvosd.link(tee)

queue9.link(nvvidconv_postosd)

nvvidconv_postosd.link(caps)

caps.link(encoder)

encoder.link(codecparse)

codecparse.link(flvmux)

flvmux.link(sink2)

# Start linking tee for egl -- RJ

queue10.link(transform)

transform.link(sink1)

# Manually link the tee, which has request pads

tee_rtsp_pad = tee.get_request_pad("src_%u")

print("rtsp tee pad branch obtained")

queue9_pad = queue9.get_static_pad("sink")

tee_elg_pad = tee.get_request_pad("src_%u")

print("rtsp tee pad branch obtained")

queue10_pad = queue10.get_static_pad("sink")

tee_rtsp_pad.link(queue9_pad)

tee_elg_pad.link(queue10_pad)

# Manually link the tee, which has request pads

tee_rtsp_pad = tee.get_request_pad("src_%u")

print("rtsp tee pad branch obtained")

queue7_pad = queue7.get_static_pad("sink")

tee_elg_pad = tee.get_request_pad("src_%u")

print("rtsp tee pad branch obtained")

queue8_pad = queue8.get_static_pad("sink")

tee_rtsp_pad.link(queue7_pad)

tee_elg_pad.link(queue8_pad)

# create an event loop and feed gstreamer bus mesages to it

loop = GObject.MainLoop()

bus = pipeline.get_bus()

bus.add_signal_watch()

bus.connect ("message", bus_call, loop)

print("\n *** DeepStream: Launched RTMP Streaming at rtmp://localhost:1935/live/file")

print("Starting pipeline \n")

# start play back and listed to events

pipeline.set_state(Gst.State.PLAYING)

try:

loop.run()

except:

pass

# cleanup

print("Exiting app\n")

pipeline.set_state(Gst.State.NULL)

def parse_args():

global codec

global bitrate

codec = "H264"

bitrate = 2000000

return 0

if __name__ == '__main__':

parse_args()

sys.exit(main(sys.argv))