- I try to train model Unet with TAO toolkit follow(Run in colab): nvidia-tao/unet_isbi.ipynb at main · NVIDIA-AI-IOT/nvidia-tao · GitHub

- After train, I export model weight .tlt to .etlt follow(Run in colab):

!tao unet export

-e $SPECS_DIR/unet_train_resnet_unet_isbi.txt

-m $EXPERIMENT_DIR/isbi_experiment_unpruned/weights/model_isbi.tlt

-o $EXPERIMENT_DIR/isbi_experiment_unpruned/model_isbi.etlt

–engine_file $EXPERIMENT_DIR/isbi_experiment_unpruned/model_isbi.engine

–gen_ds_config

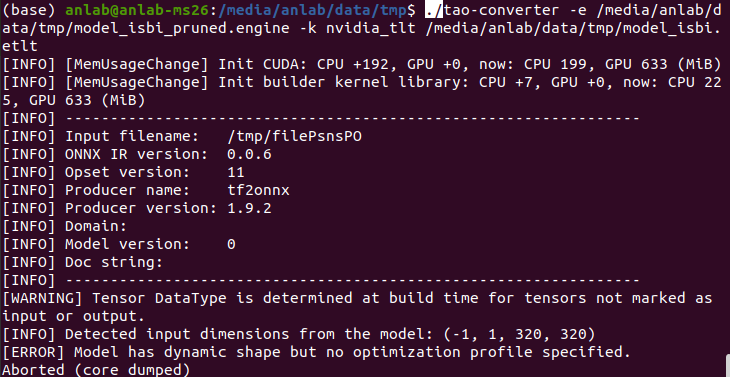

-k $KEY - I using Tao-Converter to gen file .engine, but got error “Model has dynamic shape but no optimization profile specified” (Run in my device):

Thanks

• Hardware (T4/V100/Xavier/Nano/etc)

• Network Type (Detectnet_v2/Faster_rcnn/Yolo_v4/LPRnet/Mask_rcnn/Classification/etc)

• TLT Version (Please run “tlt info --verbose” and share “docker_tag” here)

• Training spec file(If have, please share here)

• How to reproduce the issue ? (This is for errors. Please share the command line and the detailed log here.)