I’m now having some extremely weird bug where my lights receive wrong shadows from other lights… it’s random, seemingly dependent on shader/geometry complexity and the number of samples, though it looks the same as long as the input data doesn’t change. I triple-checked my structures, payload usage, etc, but I can’t figure it out. The code worked fine in OptiX 6. Main part of raygen looks roughly like this:

for (uint i = 0; i < num_lights; i++)

{

LocalLight lightData = localLights[i];

ColorData localColorData;

InitColorData(localColorData);

if (LightPoint(lightData, pos, normal, inputData, localColorData)) // compute unshadowed lighting

{

// Generate shadows

float shadow = 0;

bool shadowmaskFalloff = false;

float lightRadius = lightData.rangeParams.x;

float3 lightPos = lightData.position;

int lightSamples = lightData.lightSamples;

for (int j = 0; j < lightSamples; ++j)

{

float3 randomPos = GenRandomPos() * lightRadius + lightPos;

float3 nndir = normalize(randomPos - worldPos);

Ray occRay = make_Ray(rayOrigin, nndir, 1, epsilonFloatT(rayOrigin, nndir), length(randomPos - worldPos));

RayData occData;

occData.result = 1.0f;

unsigned int _payloadX = __float_as_uint(occData.result);

optixTrace( (OptixPayloadTypeID)0, // there is only 1

root,

occRay.origin,

occRay.direction,

occRay.tmin,

occRay.tmax,

0.0f,

(OptixVisibilityMask)1,

OPTIX_RAY_FLAG_NONE,

occRay.ray_type,

2, // there are 2 ray types total

occRay.ray_type,

_payloadX);

occData.result = __uint_as_float(_payloadX);

shadow += occData.result;

}

shadow /= lightSamples;

AddColorData(colorData, localColorData, shadow);

}

}

And the anyhit function is basically:

if (triAlpha == 0.0f)

{

optixIgnoreIntersection();

return;

}

optixSetPayload_0( __float_as_uint(0.0f) );

optixTerminateRay();

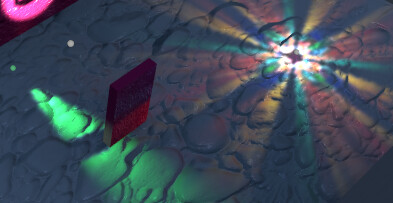

What happens is that suddenly light 2 may start using shadow from light 1 and so on - if I create another light, it shuffles differently again:

(The green spotlight is wrongly using the colored point light’s shadow)

It feels as if the optixTrace / hit programs are not synchronous (??) and I’m not getting the correct payload…

The payloads are configured like this:

// Only one float4 payload type for now

const int numPayloadValues = 4;

unsigned int basePayloadFlags = OPTIX_PAYLOAD_SEMANTICS_TRACE_CALLER_READ_WRITE |

OPTIX_PAYLOAD_SEMANTICS_CH_READ_WRITE |

OPTIX_PAYLOAD_SEMANTICS_AH_READ_WRITE |

OPTIX_PAYLOAD_SEMANTICS_MS_WRITE |

OPTIX_PAYLOAD_SEMANTICS_IS_NONE;

const unsigned int payloadSemantics[numPayloadValues] =

{

basePayloadFlags,

basePayloadFlags,

basePayloadFlags,

basePayloadFlags

};

OptixPayloadType payloadType = {};

payloadType.numPayloadValues = numPayloadValues;

payloadType.payloadSemantics = payloadSemantics;

OptixModuleCompileOptions moduleCompileOptions = {};

moduleCompileOptions.numPayloadTypes = 1;

moduleCompileOptions.payloadTypes = &payloadType;

(Basically everything is read-write for now except for IS which I don’t have and MS which never reads)

…I’m only using 1 value out of the declared 4 here. Just in case, I tried forcing numPayloadValues to 1 and it didn’t help.

…unrolling the samples look actually makes it work, but I don’t want to unroll it…