Hello guys,

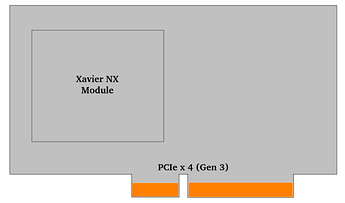

I made pcie board with Xavier NX for transfer camera image data on nx to host PC.

Xavier NX is set to EP mode, it connect to host PC (RP) through virtual ethernet using pcie port.

Connection is well functionning and now I can transfer camera image data on nx to host PC.

I have 4 cameras to transfer image data, each camera image data’s bandwidth is 1.3Gbps total 5.2Gbps camera image data is transfered with gstreamer udp stream.

Gstreamer udp stream is stable without any data loss.

So I can transfer total 5.2Gbps nx camera image data to host pc with gstreamer udp stream now.

It tested on jetpack 4.4.1 and 4.6 with set cpu performace to max on both side.

I have some question about pcie EP virtual ehternet bandwidth.

-

Virtual Ethernet Performance for Jetson Xavier NX - #8 by WayneWWW in this topic, nvidia moderator says that his got around 5Gbps with EP interface.

And he says that his test enviroments is Link width 8x, Link speed: Gen4.

But Xavier NX’s soc has only 4x pcie data line, how can he test about that?

If I use only 4x pcie data line, can I get around 5Gbps? or half? -

According to question 1, if it can get only half of 5Gbps, how can I transfer 5.2Gbps total camera image data with gstreamer udp stream? is it possible?

-

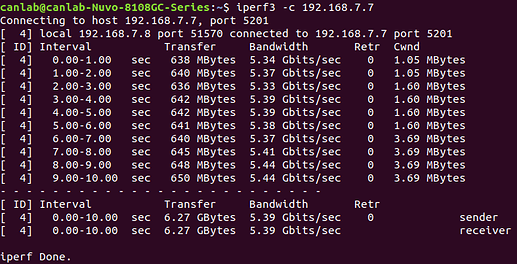

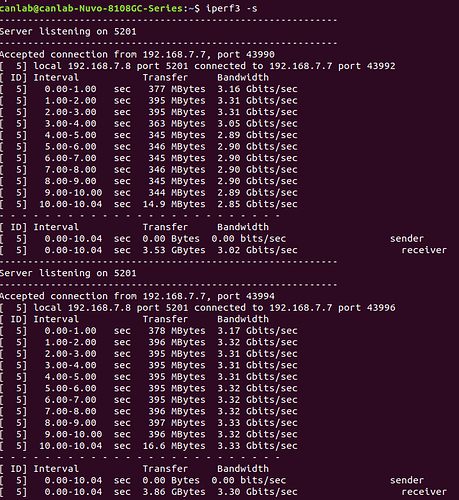

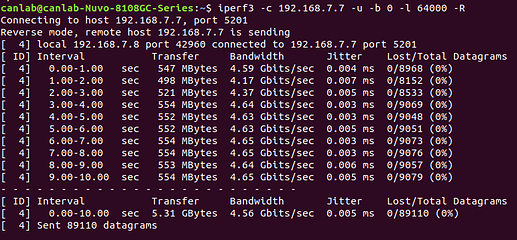

I tested bandwidth using iperf3, but result is odd.

It is slow in one direction.

Test is right? Why one direction is slow than other?

[ Case 1, use TCP ]

-

Xavier NX ( server ) <== Host PC ( client ) / This is fast. Bandwidth is around 5~6Gbps.

-

Host PC ( server ) <== Xavier NX ( client ) / This is slow. Bandwidth is around 2~3Gbps.

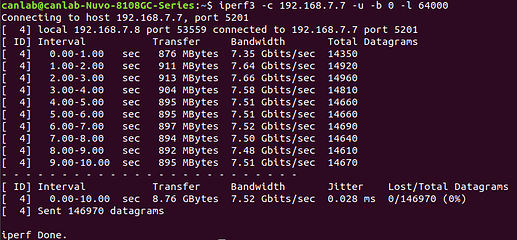

[ Case 2, use UDP ]

-

Xavier NX ( server ) <== Host PC ( client ) / This is fast. Bandwidth is around 7~8Gbps.

-

Host PC ( server ) <== Xavier NX ( client ) / This is slow. Bandwidth is around 4~5Gbps.

- According to question 3, from xavier nx to host pc bandwidth is slower than 5Gbps.

How can I transfer 5.2Gbps total camera image data with gstreamer udp stream?

iperf3 is wrong? Which app is good to test the exact bandwidth?

And, performance is upgraded since that topic? Virtual Ethernet Performance for Jetson Xavier NX - #8 by WayneWWW

I wonder about the exact EP virtual ethernet bandwidth and performance.

I confused that if bandwidth is under 5Gbps, how can I transfer 5.2Gbps data.

And I also confused why tested banwith is slower than nvidia moderator says.

If I know wrong or tested wrong, answer me my question. or not.

Thank you guys.