Dear NVIDIA team,

I’m working on generating a custom dataset with labeled pointclouds. As stated in the Range-Sensor API, the get_semantic_data function returns the semantic id of the hit for each beam in uint16.

To test that I build a very easy scene with a LiDAR and a camera inside the warehouse sample environment. The only semantic class is given to a cube. Here is a picture of the scene:

The camera view is as follow, on the right the lidar parameters are visible:

I added a transform component to the cube that changes the x,y and z coordinate. Not the rotation tho. To extract the Information I need I utilize the Python API. I randomize the scene in total 10 times and after each iteration I wait 5 seconds for the scene to fully load. This was stated to might be a problem here. Furthermore I included a time.sleep() as proposed in this forum entry.

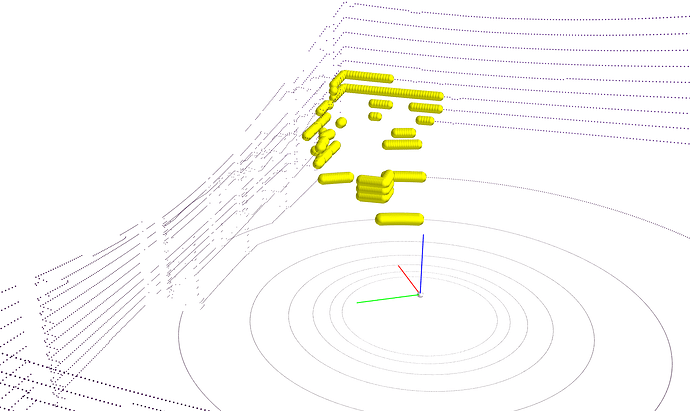

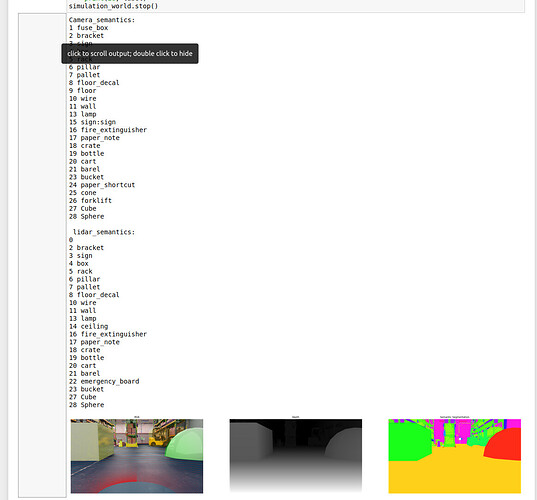

The problem I am facing is that the semantic data that is returned does not entirely match the cube! To show what I mean I plotted the pointcloud, (which looks very promising) and emphasized the specific points that are labeled to be “on the cube” in color yellow.

First of all the bbox2D & semantic groundtruth for scene 1 and 9

BBox-Scene1

Semantic-Scene 1

BBox-Scene 9

Semantic-Scene 9

Everything looks very nice. Now the plotted Pointcloud with emphasized labels in yellow for both scenes:

Pointcloud + labels for class cube in yellow - Scene 1:

Pointcloud + labels for class cube in yellow - Scene 2:

Finally the code that I am using to generate the data:

# Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved.

#

# NVIDIA CORPORATION and its licensors retain all intellectual property

# and proprietary rights in and to this software, related documentation

# and any modifications thereto. Any use, reproduction, disclosure or

# distribution of this software and related documentation without an express

# license agreement from NVIDIA CORPORATION is strictly prohibited.

"""Generate offline synthetic dataset

"""

import asyncio

import copy

import os

import torch

import signal

import numpy as np

import carb

import omni

import time

from omni.isaac.python_app import OmniKitHelper

import random

import math

# Default rendering parameters

RENDER_CONFIG = {

"renderer": "RayTracedLighting",

"samples_per_pixel_per_frame": 12,

"headless": False,

"experience": f'{os.environ["EXP_PATH"]}/omni.isaac.sim.python.kit',

"width": 1280,

"height": 720,

}

class RandomScenario(torch.utils.data.IterableDataset):

def __init__(self, scenario_path, writer_mode, data_dir, max_queue_size, train_size, classes):

self.kit = OmniKitHelper(config=RENDER_CONFIG)

#Imports AFTER OmniKitHelper is initialized! -> https://docs.omniverse.nvidia.com/app_isaacsim/app_isaacsim/python_environment.html#omnikithelper

from omni.isaac.synthetic_utils import SyntheticDataHelper, DataWriter, KittiWriter, DomainRandomization

# import the python bindings to interact with lidar sensor

from omni.isaac.range_sensor import _range_sensor

self.sd_helper = SyntheticDataHelper()

self.dr_helper = DomainRandomization()

self.writer_mode = writer_mode

self.writer_helper = KittiWriter if writer_mode == "kitti" else DataWriter

self.dr_helper.toggle_manual_mode()

self.result = True

self.scenario_path = "/home/jan-oliver/Documents/Bachelor_Thesis/IsaacSim/ARIBIC_scene/sensorrack_test_labels.usd"

scenario_path = self.scenario_path

self.max_queue_size = max_queue_size

self.data_writer = None

self.data_dir = data_dir

self.train_size = train_size

self.classes = classes

self._setup_world(scenario_path)

self.cur_idx = 0

self.exiting = False

self._sensor_settings = {}

#Interface to communicate with the lidar

self.lidarInterface = _range_sensor.acquire_lidar_sensor_interface()

self.timeline = omni.timeline.get_timeline_interface()

self.stage = self.kit.get_stage()

signal.signal(signal.SIGINT, self._handle_exit)

def _handle_exit(self, *args, **kwargs):

print("exiting dataset generation...")

self.exiting = True

async def load_stage(self, path):

await omni.usd.get_context().open_stage_async(path)

def _setup_world(self, scenario_path):

# Load scenario and start playing

print("Loading scene from: ", scenario_path)

setup_task = asyncio.ensure_future(self.load_stage(scenario_path))

while not setup_task.done():

self.kit.update()

self.kit.setup_renderer()

self.kit.update()

self.kit.play()

def __iter__(self):

return self

def __next__(self):

''' Randomize the scene once '''

# step once and then wait for materials to load

self.dr_helper.randomize_once()

self.kit.update()

while self.kit.is_loading():

self.kit.update()

#Keep waiting for a couple more seconds.

update_task = asyncio.ensure_future(self.async_sleep(5))

print("Waiting for scene to fully load!")

while not update_task.done():

self.kit.update()

print("Finished waiting")

'''

....

Default implementation to obtain other sensor data using gt = self.sd_helper.get_groundtruth(gt_list, viewport)

...

'''

viewport_iface = omni.kit.viewport.get_viewport_interface()

viewport_name = "Viewport"

viewport = viewport_iface.get_viewport_window(viewport_iface.get_instance(viewport_name))

''' Get the Lidar data and combine it in one array. '''

#LiDAR setup for interaction

self.lidar_path = "/Root/ARIBIC/velodyne_puck"

if not self.lidarInterface.is_lidar_sensor(self.lidar_path):

print("ERROR: Lidar sensor is not valid!")

self.kit.shutdown()

# Note:

# get_intensity_data() -> Only returns a value of 255, if it was a hit or 0 if it was no hit. It is mostly a hit because the range is at 100 meters.

# Therefore this information is not very useful to us.

# get_depth_data -> The distance from the sensor to the hit for each beam in uint16 and scaled by min and max distance

# Dividing by 65535 and multiplying by 255 returns the intensitiy for a visualization in an image

#Sleep to copy gpu buffers? -> https://forums.developer.nvidia.com/t/get-sensor-data-from-isaac-sim/165169/2

#Keep waiting for a couple more seconds.

time.sleep(0.1)

pointcloud = self.lidarInterface.get_point_cloud_data(self.lidar_path) #In cm = Unit that is used in Isaac

pointcloud = self.get_meters_per_unit() * pointcloud

labels = self.lidarInterface.get_semantic_data(self.lidar_path)

intensities = self.lidarInterface.get_depth_data(self.lidar_path)

lidar_data = self.reshapeAndCombineLidarData(pointcloud,labels,intensities)

print("Point Cloud dimensions:", pointcloud.shape)

print("Labels array dimensions: ", labels.shape)

print("Intensities array dimensions: ", intensities.shape)

print("Combined array dimensions: ", lidar_data.shape)

#Store the Lidar data

lidar_data_storage_folder = self._output_folder + "/" + viewport_name +"/lidar"

self.storeLidarData(lidar_data, lidar_data_storage_folder)

'''

....

Calculate and store the camera extrinsic and intrinsic matrices

....

'''

self.cur_idx += 1

self.kit.update()

return image

async def async_sleep(self, duration):

await asyncio.sleep(duration)

def get_meters_per_unit(self):

from pxr import UsdGeom

stage = omni.usd.get_context().get_stage()

return UsdGeom.GetStageMetersPerUnit(stage)

'''

Stores the lidar data as .npy file in the specified folder

Input:

path -> Path to the !Folder! where the files are stored in. Do not specify the name for the file!

lidar_data -> Array containing the lidar data

Output:

None -> Just stores the data as path/{curent_iteration_index}.npy

'''

def storeLidarData(self, lidar_data, path):

# Create directory

try:

os.mkdir(path)

print("Directory " , path , " created ")

except FileExistsError:

print("Directory to store lidar data - " , path , " - already exists")

#Store the data

lidar_data_file_name = path + "/" + str(self.cur_idx) + ".npy"

with open(lidar_data_file_name, 'wb') as f:

np.save(f, lidar_data)

f.close()

print("Saved lidar data successfully as: ", lidar_data_file_name)

'''

Takes the lidar data and returns a combined 2d array

Input:

pointcloud [a,b,c] -> a = amount of horizontal sensor readings,

-> b = amount of vertical sensor readings,

-> c = amount of dimensions per point - x,y,z

labels [a,b] -> a = amount of horizontal sensor readings,

-> b = amount of vertical sensor readings

intensities [a,b] -> a = amount of horizontal sensor readings,

-> b = amount of vertical sensor readings

Output: [d,e] -> d = a * b , total amount of sensor readings

-> e = c + 1 + 1, namely x,y,z,intensity,label for specific reading

'''

def reshapeAndCombineLidarData(self, pointcloud, labels, intensities):

pc_resh = self.reshapePointcloud(pointcloud)

labl_resh = self.reshapeLabelsAndIntensity(labels)

int_resh = self.reshapeLabelsAndIntensity(intensities)

data = np.concatenate((pc_resh,int_resh, labl_resh),axis=1)

return data

'''

Takes the lidar pointcloud and returns reshaped array

Input:

pc [a,b,c] -> a = amount of horizontal sensor readings,

-> b = amount of vertical sensor readings,

-> c = amount of dimensions per point - x,y,z

Output: [d,e] -> d = a * b, amount of total sensor readings

-> e = c, amount of dimensions per point - x,y,z

'''

def reshapePointcloud(self, pc):

total_points = pc.shape[0] * pc.shape[1]

points_dimension = pc.shape[2]

pc_2d = np.reshape(pc, (total_points,points_dimension))

return pc_2d

'''

Takes the lidar labels or intensities and returns reshaped array

Input: [a,b] -> a = amount of horizontal sensor readings,

-> b = amount of vertical sensor readings

Output: [c,1] -> c = a * b, amount of total sensor readings

'''

def reshapeLabelsAndIntensity(self, array_2d):

total_points = array_2d.shape[0] * array_2d.shape[1]

pc_2d = np.reshape(array_2d, (total_points,1))

return pc_2d

if __name__ == "__main__":

"Typical usage"

import argparse

parser = argparse.ArgumentParser("Dataset generator")

parser.add_argument("--scenario", type=str, help="Scenario to load from omniverse server")

parser.add_argument("--num_frames", type=int, default=10, help="Number of frames to record")

parser.add_argument("--writer_mode", type=str, default="npy", help="Specify output format - npy or kitti")

parser.add_argument(

"--data_dir", type=str, default=os.getcwd() + "/output", help="Location where data will be output"

)

parser.add_argument("--max_queue_size", type=int, default=500, help="Max size of queue to store and process data")

parser.add_argument(

"--train_size", type=int, default=8, help="Number of frames for training set, works when writer_mode is kitti"

)

parser.add_argument(

"--classes",

type=str,

nargs="+",

default=[],

help="Which classes to write labels for, works when writer_mode is kitti. Defaults to all classes",

)

args = parser.parse_args()

dataset = RandomScenario(

args.scenario, args.writer_mode, args.data_dir, args.max_queue_size, args.train_size, args.classes

)

if dataset.result:

# Iterate through dataset and visualize the output

print("Loading materials. Will generate data soon...")

for image in dataset:

print("ID: ", dataset.cur_idx)

if dataset.cur_idx == args.num_frames:

break

if dataset.exiting:

break

# cleanup

dataset.kit.shutdown()

I really hope you can point out to me what I am missing :/. Thank you so much!