Hello,

I ran some iperf3 benchmarks between the Orin and a desktop PC with a 10GbE NIC, and found that when sending packets from the desktop to the Orin, CPU usage for ksoftirqd/0 shot up to 96% and bandwidth was limited to ~5.3Gb/s.

I gathered from /proc/interrupts every 2 seconds, and also collected data from top.

recvtop.txt (137.7 KB)

recv interrupts.txt (897.2 KB)

When instead sending packets from the Orin to the desktop, I was fortunately able to get ~9.4Gb/s with 10% CPU usage from the process irq/297-1-0008.

send interrupts.txt (299.1 KB)

sendtop.txt (138.3 KB)

Are these values as expected? I imagine performance would be reduced further for carrier boards that have additional 10GbE ports.

1 Like

Could you provide the steps you reproduced this issue?

I connected the Orin Dev Kit’s 10GbE port to an x86-64 desktop PC with its own 10GbE port, then used iperf3 to run some benchmarks.

To test the Orin’s maximum receive bandwidth, I ran iperf3 -s on the Orin and iperf3 -c [orin IP] on the desktop.

To test the Orin’s maximum send bandwidth, I ran iperf3 -s on the desktop and iperf3 -c p [desktop IP] on the Orin.

I used top to view CPU usage and cat /proc/interrupts to view the interrupt counters.

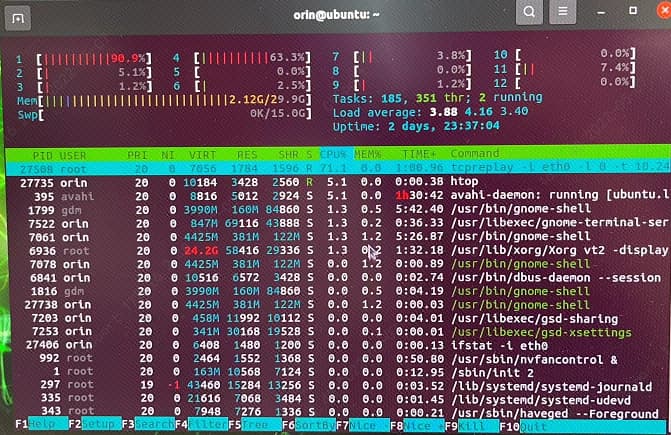

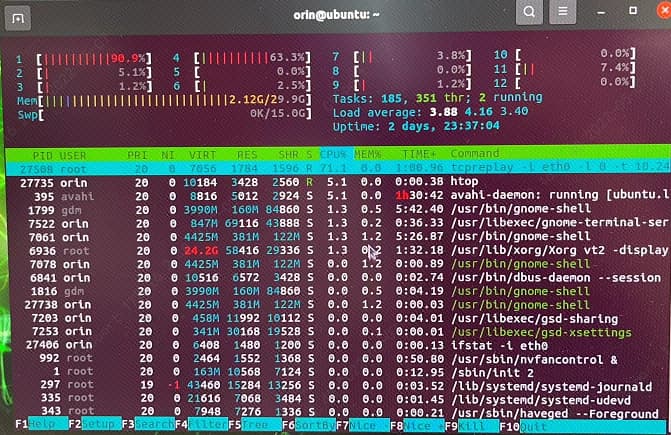

I have the same problem. Two Orin Dev Kits connect to each other. Iperf benchmark is only 5Gb/s. CPU 1 usage 90%.

Hello, I found the same problem, did you find a solution?

Hello, I found the same problem, did you find a solution?

No solutions yet.

@WayneWWW

Is this issue being tracked? What bandwidth has Nvidia measured during internal tests, including with multiple 10GbE interfaces in parallel?

I am having the same problem. Any update on this issue would be appreciated.