Hello Everyone!

I’m trying to stream video from virtual cameras placed inside an Omniverse scene of a virtual warehouse. I’ve tried using ROS2 bridge to publish camera data to topics and it’s working.

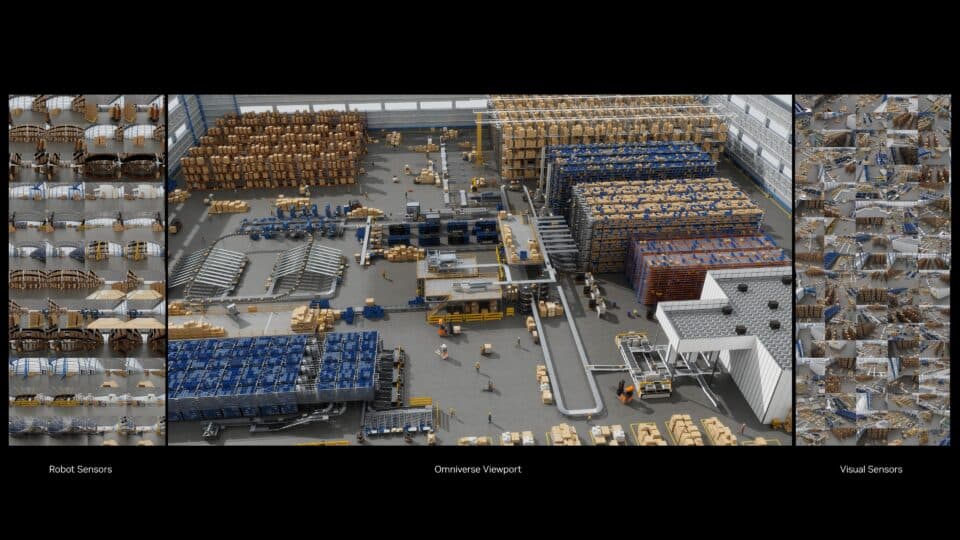

My task is to do multi-view object detection with Metropolis and maybe Video Search and Summarization VSS feature of Metropolis, which is to identify and track multiple objects from multiple camera angles in the factory, similar to the videos showing Omniverse digital twins and Mega blueprint. The cameras are both statically placed to get the overview of the factory and dynamically placed on robots. Is it recommended to publish camera data via ROS2 with multiple cameras like the example image attached? Would the processing overhead be too extensive if using ROS2? If I want to integrate Metropolis, so Metropolis would subscribe to the camera topic to perform real-time processing on the camera feed?

Or would you recommend that the web-viewer-sample https://github.com/NVIDIA-Omniverse/web-viewer-sample is a good template for me to modify based on my requirements? Would some more code, libraries (like the viewport API Viewport API — Omniverse Kit), and extensions be involved in the process if I want to do something like the attached image?

Also, would you recommend some ways to directly extract GPU frames since virtual camera renders are ultimately stored in GPU memory as images/textures? Is this a good approach that bypasses the limitations of ROS and WebRTC for multi-camera real-time streaming from Omniverse/Isaac Sim?

For Omniverse digital twin development, is it recommended that Metropolis modules are extensions, plugins, or on the same cloud server as the Omniverse/Isaac Sim digital twin? Are there ways to stream the camera feed via websocket or other methods so that Metropolis modules elsewhere can easily access it? I would appreciate it if you could point me to the materials relating to my problems, like the connection/communication between Omniverse and Metropolis for digital twin development, since I couldn’t find direct answers.