Hi everyone,

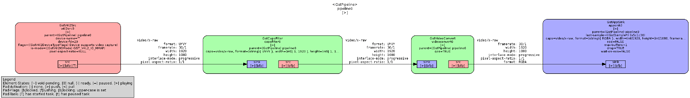

Here I will describe the workaround for HDMI2CSI (tc358840) module to fetch the frame from source and convert frame from YUV to RGBA on GPU. For this purpose we use OpenMAX implementation for TX1. We’ve added new class to GStreamerCameraFrameSourceImpl.cpp, named GStreamerCameraOpenMAXSorceImpl. This class implements gstreamer path to fetch the frame in YUV and convert it to RGBA over nvvidconv. It works with VisionWorks 1.4.3 and camera source: 1920x1080@30fps (BlackMagic 4k Micro Camera) for now.

We modify three files:

- ~/VisionWorks/libvisionworks-nvxio-/src/FrameSource/GStreamer/GStreamerCameraFrameSourceImpl.hpp

- ~/VisionWorks/libvisionworks-nvxio-/src/FrameSource/GStreamer/GStreamerCameraFrameSourceImpl.cpp

- ~/VisionWorks/libvisionworks-nvxio-/src/FrameSource/FrameSource.cpp

Modifications are showed bellow:

- GStreamerCameraFrameSourceImpl.hpp

34a35

> #include "GStreamerEGLStreamSinkFrameSourceImpl.hpp"

- GStreamerCameraFrameSourceImpl.cpp - added new class implementing nvvideoconv as well as needed headers. This part should do same as command:

gst-launch-1.0 v4l2src ! ‘video/x-raw, width=1920, height=1080, framerate=30/1, format=UYVY’ ! nvvidconv ! ‘video/x-raw(memory:NVMM), width=1920, height=1080, framerate=30/1, format=I420’ ! nvvideosink

> #include "GStreamerOpenMAXFrameSourceImpl.hpp" //added include for OpenMAX

>

> #include "NVXIO/Utility.hpp" //added

> #include "NVXIO/Application.hpp" //added

>

>

> //------Added camera /dev/video0 for HDMI2CSI--------

> GStreamerCameraOpenMAXFrameSourceImpl::GStreamerCameraOpenMAXFrameSourceImpl(vx_context vxcontext) :

> GStreamerEGLStreamSinkFrameSourceImpl(vxcontext, FrameSource::VIDEO_SOURCE, "GStreamerCameraOpenMAXFrameSource", true)

> //fileName(filename)

> {

> }

>

> //-----------Added camera /dev/video0 for HDMI2CSI-----------

>

> bool GStreamerCameraOpenMAXFrameSourceImpl::InitializeGstPipeLine()

> GstStateChangeReturn status;

> end = true;

>

> pipeline = GST_PIPELINE(gst_pipeline_new(NULL));

> if (pipeline == NULL)

> {

> NVXIO_PRINT("Cannot create Gstreamer pipeline");

> return false;

> }

>

> bus = gst_pipeline_get_bus(GST_PIPELINE (pipeline));

>

> // create v4l2src

> GstElement * v4l2src = gst_element_factory_make("v4l2src", NULL);

> if (v4l2src == NULL)

> {

> NVXIO_PRINT("Cannot create v4l2src");

> FinalizeGstPipeLine();

>

> return false;

> }

>

> std::ostringstream cameraDev;

> cameraDev << "/dev/video0";

> g_object_set(G_OBJECT(v4l2src), "device", cameraDev.str().c_str(), NULL);

>

> gst_bin_add(GST_BIN(pipeline), v4l2src);

>

>

> // create nvvidconv

> GstElement * nvvidconv = gst_element_factory_make("nvvidconv", NULL);

> if (nvvidconv == NULL)

> {

> NVXIO_PRINT("Cannot create nvvidconv");

> FinalizeGstPipeLine();

>

> return false;

> }

>

> gst_bin_add(GST_BIN(pipeline), nvvidconv);

>

> // create nvvideosink element

> GstElement * nvvideosink = gst_element_factory_make("nvvideosink", NULL);

> if (nvvideosink == NULL)

> {

> NVXIO_PRINT("Cannot create nvvideosink element");

> FinalizeGstPipeLine();

> return false;

> }

>

> g_object_set(G_OBJECT(nvvideosink), "display", context.display, NULL);

> g_object_set(G_OBJECT(nvvideosink), "stream", context.stream, NULL);

> g_object_set(G_OBJECT(nvvideosink), "fifo", fifoMode, NULL);

>

> gst_bin_add(GST_BIN(pipeline), nvvideosink);

>

> //HDMI2CSI

> std::ostringstream stream;

> stream << "video/x-raw, format=UYVY, width=1920, height=1080, framerate=30/1;";

> //TODO: format, resolution and framerate should be configurable

>

> std::unique_ptr<GstCaps, GStreamerObjectDeleter> caps_v42lsrc(gst_caps_from_string(stream.str().c_str()));

>

> if (!caps_v42lsrc)

> {

> NVXIO_PRINT("Failed to create caps");

> FinalizeGstPipeLine();

>

> return false;

> }

>

> // link elements

> if (!gst_element_link_filtered(v4l2src, nvvidconv, caps_v42lsrc.get()))

> {

> NVXIO_PRINT("GStreamer: cannot link v4l2src -> color using caps");

> FinalizeGstPipeLine();

>

> return false;

> }

>

>

> std::unique_ptr<GstCaps, GStreamerObjectDeleter> caps_nvvidconv(

> //HDMI2CSI

> gst_caps_from_string("video/x-raw(memory:NVMM), format=(string){I420}, width=1920, height=1080, framerate=30/1"));

> //TODO: framerate and resolution should be configurable

>

> // link nvvidconv using caps

> if (!gst_element_link_filtered(nvvidconv, nvvideosink, caps_nvvidconv.get()))

> {

> NVXIO_PRINT("GStreamer: cannot link nvvidconv -> nvvideosink");

> FinalizeGstPipeLine();

>

> return false;

> }

>

> // Force pipeline to play video as fast as possible, ignoring system clock

> gst_pipeline_use_clock(pipeline, NULL);

>

> status = gst_element_set_state(GST_ELEMENT(pipeline), GST_STATE_PLAYING);

>

> handleGStreamerMessages();

> if (status == GST_STATE_CHANGE_ASYNC)

> {

> // wait for status update

> status = gst_element_get_state(GST_ELEMENT(pipeline), NULL, NULL, GST_CLOCK_TIME_NONE);

> }

> if (status == GST_STATE_CHANGE_FAILURE)

> {

> NVXIO_PRINT("GStreamer: unable to start playback");

> FinalizeGstPipeLine();

>

> return false;

> }

>

> // GST_DEBUG_BIN_TO_DOT_FILE(GST_BIN(pipeline), GST_DEBUG_GRAPH_SHOW_ALL, "gst_pipeline");

>

> if (!updateConfiguration(nvvidconv, configuration))

> {

> FinalizeGstPipeLine();

> return false;

> }

>

> end = false;

>

> return true;

> }

> //--------------end HDMI2CSI-------------------

- FrameSource.cpp - in ordrer to start our workaround implementation we change this file:

306c306

< return makeUP<GStreamerCameraFrameSourceImpl>(context, static_cast<uint>(idx));

> return makeUP<GStreamerCameraOpenMAXFrameSourceImpl>(context); //index is already 0 in nvvideoconv implementation

After this rebuild VisionWorks.

Greetings and

Marry Christmas and Happy New Year :)