jwson3

July 20, 2022, 8:00am

1

I am using Isaac sim with AWS.

First, I run the Isaac Sim container with an interactive Bash session (Terminal 1 on AWS):

$ sudo docker run --name isaac-sim --entrypoint bash -it --gpus all -e “ACCEPT_EULA=Y” --rm --network=host nvcr.io/nvidia/isaac-sim:2022.1.0

Then I start Isaac Sim with native livestream mode (Terminal 1 on AWS):

I Launch the Omniverse Streaming Client (Local)

Then I open another terminal for the running docker container (Terminal 2 on AWS) :

$ sudo docker exec -it isaac-sim /bin/bash

But when I start python script in the docker (Terminal 2 on AWS)

But it failed to run.

Is is possible to run python environment (not script editor) with docker container?

Hi. Does the python script works if you do not run ./runheadless.native.sh first or you run the python script on a second container?

jwson3

July 23, 2022, 8:29am

4

After several attempts, it was possible to run a python script. But I have a question, I am following the Creating New RL Envrionment of ISAAC GYM TUTORIALS. After rl training of cartpole, when performing inference, headless=False should be set for visualization. In this case, how can I visualize carpole policy running? I tried to connect to docker container of aws with omniverse streaming client, But i failed to connect.

To view the Isaac Sim UI when using a container, set headless=True and enable_extension("omni.kit.livestream.native"). Take a look at livestream.py as an example.

1 Like

jwson3

July 23, 2022, 4:28pm

7

Thanks so much. I will try it now.

jwson3

July 24, 2022, 1:28am

8

Finally, I can run python script and visualize simulation. Thanks so much.

1 Like

jwson3

July 25, 2022, 2:49pm

9

I have one more question. I try to use RL extension on docker container. Tutorial code is as below.

# create isaac environment

from omni.isaac.gym.vec_env import VecEnvBase

env = VecEnvBase(headless=False)

# create task and register task

from cartpole_task import CartpoleTask

task = CartpoleTask(name="Cartpole")

env.set_task(task, backend="torch")

# import stable baselines

from stable_baselines3 import PPO

# Run inference on the trained policy

model = PPO.load("ppo_cartpole")

env._world.reset()

obs = env.reset()

while env._simulation_app.is_running():

action, _states = model.predict(obs)

obs, rewards, dones, info = env.step(action)

env.close()

In this case, How can i enable livestreaming for remotely accessing?

For livestreaming, SimulationApp should be used. So I tried to add SimulationApp, but it failed.

Sorry for bothering you. I tried to read RL extension API but detailed explanation is missing.

Hi.

Try running it headless:

env = VecEnvBase(headless=True)

Then also enable the livestream server:

from omni.isaac.core.utils.extensions import enable_extension

1 Like

jwson3

July 25, 2022, 4:22pm

12

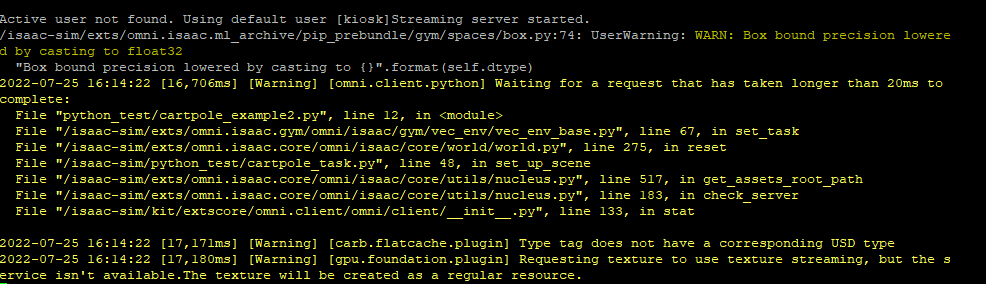

I tried it. But it is not working perfectly.

Omniverse Streaming Client is connected. But object is not loaded and “start” button is not pushed.

terminal display is as below.

But when I input ctrl-c on terminal, the objects are shown on display. But it does not work anymore because it shutdown.

Is there any solution?

Hi. Did you get the issue resolved yet?

jwson3

August 2, 2022, 2:27am

14

Hi, I didn’t solve it.

The same problem arises.

How can I get your email?

My code is as below

# create isaac environment

from omni.isaac.gym.vec_env import VecEnvBase

env = VecEnvBase(headless=True)

from omni.isaac.core.utils.extensions import enable_extension

enable_extension("omni.kit.livestream.native")

# create task and register task

from cartpole_task import CartpoleTask

task = CartpoleTask(name="Cartpole")

env.set_task(task, backend="torch")

# import stable baselines

from stable_baselines3 import PPO

# Run inference on the trained policy

model = PPO.load("ppo_cartpole")

env._world.reset()

obs = env.reset()

while env._simulation_app.is_running():

action, _states = model.predict(obs)

obs, rewards, dones, info = env.step(action)

env.close()

Thanks. Is this the edited cartpole_task.py from the RL example ?

I’ll try this and file a bug.

jwson3

August 2, 2022, 2:15pm

16

Always thank you for your hard work.

1 Like

I think I found what’s missing. The update() call:

while env._simulation_app.is_running():

action, _states = model.predict(obs)

obs, rewards, dones, info = env.step(action)

env._simulation_app.update()

env.close()

1 Like

jwson3

August 3, 2022, 1:10am

18

It works for me now. Thanks so much

1 Like

system

August 17, 2022, 1:10am

19

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.