Hi all,

I’m working on a robotics project where I’m integrating NVBlox with vSLAM (using isaac_ros_visual_slam) and robot_localization to improve pose estimation. The main goal is to compensate for odometry drift in the NVBlox-generated map.

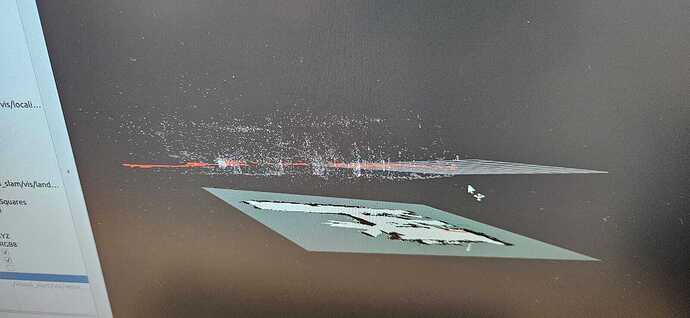

Everything launches and runs as expected, and the map initially builds correctly. However, over time, significant drift occurs—particularly an unstable map→odom transform—which causes the entire map to rotate or shift independently of the robot’s actual motion. I also tried using only the robot’s odometry, but the issue persisted. Additionally, I tested with two different RealSense cameras, the D435i and the D455, one at a time.

I am using the NVIDIA container on a Jetson AGX Orin. I started by running the RealSense + NVBlox example from the official repository, but I experienced some drift in the map. Because of this, I would like to fuse visual odometry with the robot’s odometry to improve accuracy.

I’ve attached my launch and config files below.

Thanks in advance for your help!

vSLAM launch

import launch

from launch_ros.actions import ComposableNodeContainer, Node

from launch_ros.descriptions import ComposableNode

def generate_launch_description():

"""Launch file which brings up visual slam node configured for RealSense."""

realsense_camera_node = Node(

name='camera',

namespace='camera',

package='realsense2_camera',

executable='realsense2_camera_node',

parameters=[{

'enable_infra1': True,

'enable_infra2': True,

'enable_color': True,

'enable_depth': True,

'pointcloud.enable': True,

'depth_module.emitter_enabled': 0,

'depth_module.emitter_on_off': False,

'depth_module.profile': '848x480x90',

'enable_gyro': True,

'enable_accel': True,

'gyro_fps': 200,

'accel_fps': 200,

'unite_imu_method': 2

}],

)

visual_slam_node = ComposableNode(

name='visual_slam_node',

package='isaac_ros_visual_slam',

plugin='nvidia::isaac_ros::visual_slam::VisualSlamNode',

parameters=[{

'initial_reset': True,

'enable_localization_n_mapping': True,

'enable_image_denoising': False,

'enable_ground_constraint_in_slam': True,

'enable_ground_constraint_in_odometry': True,

'publish_odom_to_base_tf': False,

'publish_map_to_odom_tf': True,

'rectified_images': True,

'enable_imu_fusion': True,

'gyro_noise_density': 0.000244,

'gyro_random_walk': 0.000019393,

'accel_noise_density': 0.001862,

'accel_random_walk': 0.003,

'calibration_frequency': 200.0,

'image_jitter_threshold_ms': 22.00,

'base_frame': 'base_link',

'imu_frame': 'camera_gyro_optical_frame',

'enable_slam_visualization': True,

'enable_landmarks_view': True,

'enable_observations_view': True,

'camera_optical_frames': [

'camera_infra1_optical_frame',

'camera_infra2_optical_frame',

],

}],

remappings=[

('visual_slam/image_0', '/realsense_splitter_node/output/infra_1'),

('visual_slam/camera_info_0', 'camera/infra1/camera_info'),

('visual_slam/image_1', '/realsense_splitter_node/output/infra_2'),

('visual_slam/camera_info_1', 'camera/infra2/camera_info'),

('visual_slam/imu', 'camera/imu')

],

)

visual_slam_launch_container = ComposableNodeContainer(

name='visual_slam_launch_container',

namespace='',

package='rclcpp_components',

executable='component_container',

composable_node_descriptions=[visual_slam_node],

output='screen',

)

static_tf_node = Node(

package='tf2_ros',

executable='static_transform_publisher',

name='static_tf_base_to_camera',

arguments=['0.1', '0.075', '0.125', '0', '0', '0', 'base_link', 'camera_link'],

output='screen'

)

return launch.LaunchDescription([visual_slam_launch_container, static_tf_node, realsense_camera_node])

NVBLOX launch

from typing import List

from launch import Action, LaunchDescription

from launch_ros.descriptions import ComposableNode

import isaac_ros_launch_utils as lu

from nvblox_ros_python_utils.nvblox_constants import NVBLOX_CONTAINER_NAME

def add_nodes(args: lu.ArgumentContainer) -> List[Action]:

# Get configuration files

base_config = lu.get_path('visual_odometry', 'cfg/nvblox_base.yaml')

# Create nvblox node

nvblox_node = ComposableNode(

name='nvblox_node',

package='nvblox_ros',

plugin='nvblox::NvbloxNode',

remappings=[

('camera_0/depth/image', '/camera/depth/image_rect_raw'),

('camera_0/depth/camera_info', '/camera/depth/camera_info'),

('camera_0/color/image', '/camera/color/image_raw'),

('camera_0/color/camera_info', '/camera/color/camera_info'),

],

parameters=[base_config],

)

actions = []

if args.run_standalone:

actions.append(lu.component_container(args.container_name))

actions.append(lu.load_composable_nodes(args.container_name, [nvblox_node]))

return actions

def generate_launch_description() -> LaunchDescription:

args = lu.ArgumentContainer()

args.add_arg('container_name', NVBLOX_CONTAINER_NAME)

args.add_arg('run_standalone', 'True')

args.add_opaque_function(add_nodes)

return LaunchDescription(args.get_launch_actions())

NVBLOX config

ros__parameters:

# cuda stream setting

cuda_stream_type: 1 # 0: cuda default stream, 1: blocking async stream, 2: non blocking async stream, 3: per-thread default stream.

# miscellaneous

voxel_size: 0.05

num_cameras: 1

use_tf_transforms: true

mapping_type: "static_tsdf" # ["static_tsdf", "static_occupancy"]

# Parameters governing frequency of different processing steps in the reconstruction pipeline.

# Processing happens every n:th tick_period. <=0 means that no processing take place

tick_period_ms: 10

integrate_depth_rate_hz: 40.0

integrate_color_rate_hz: 5.0

integrate_lidar_rate_hz: 40.0

update_mesh_rate_hz: 5.0

update_esdf_rate_hz: 10.0

publish_layer_rate_hz: 5.0

publish_debug_vis_rate_hz: 2.0

decay_tsdf_rate_hz: 0.0 #5.0

decay_dynamic_occupancy_rate_hz: 10.0

clear_map_outside_radius_rate_hz: 0.0

after_shutdown_map_save_path: "/workspaces/isaac_ros-dev/maps/map"

# printing statistics on console

print_rates_to_console: false

print_timings_to_console: false

print_delays_to_console: false

print_queue_drops_to_console: false

print_statistics_on_console_period_ms: 10000

# esdf settings

esdf_mode: "2d" # ["2d", "3d"]

publish_esdf_distance_slice: true

# color settings

use_color: true

# depth settings

use_depth: true

# lidar settings

use_lidar: true

lidar_width: 1800

lidar_height: 31

lidar_min_valid_range_m: 0.1

lidar_max_valid_range_m: 50.0

use_non_equal_vertical_fov_lidar_params: false

# Input queues

maximum_input_queue_length: 10

# Map clearing settings

map_clearing_radius_m: 6.0 # no map clearing if < 0.0

map_clearing_frame_id: "base_link"

# QoS settings

input_qos: "SYSTEM_DEFAULT"

# Visualization

esdf_slice_bounds_visualization_attachment_frame_id: "base_link"

esdf_slice_bounds_visualization_side_length: 10.0

workspace_height_bounds_visualization_attachment_frame_id: "base_link"

workspace_height_bounds_visualization_side_length: 10.0

layer_visualization_min_tsdf_weight: 0.1

layer_visualization_exclusion_height_m: 0.5 #2.0

layer_visualization_exclusion_radius_m: 6.0 #7.0

layer_visualization_undo_gamma_correction: false

max_back_projection_distance: 6.0 #7.0

back_projection_subsampling: 1 # no subsampling if == 1

layer_streamer_bandwidth_limit_mbps: -1.0 # unlimited

multi_mapper:

connected_mask_component_size_threshold: 2000

remove_small_connected_components: true

static_mapper:

# mapper

do_depth_preprocessing: false

depth_preprocessing_num_dilations: 3

# projective integrator (tsdf/color/occupancy)

projective_integrator_max_integration_distance_m: 5.0

projective_integrator_truncation_distance_vox: 4.0

projective_integrator_weighting_mode: "inverse_square_tsdf_distance_penalty"

projective_integrator_max_weight: 5.0

projective_tsdf_integrator_invalid_depth_decay_factor: -1.0

# occupancy integrator

free_region_occupancy_probability: 0.45

occupied_region_occupancy_probability: 0.55

unobserved_region_occupancy_probability: 0.5

occupied_region_half_width_m: 0.1

# view calculator

raycast_subsampling_factor: 4

workspace_bounds_type: "unbounded" # ["unbounded", "height_bounds", "bounding_box"]

workspace_bounds_min_corner_x_m: 0.0

workspace_bounds_min_corner_y_m: 0.0

workspace_bounds_min_height_m: -0.5

workspace_bounds_max_corner_x_m: 0.0

workspace_bounds_max_corner_y_m: 0.0

workspace_bounds_max_height_m: 2.0

# esdf integrator

esdf_integrator_min_weight: 0.1

esdf_integrator_max_site_distance_vox: 2.0

esdf_integrator_max_distance_m: 2.0

# mesh integrator

mesh_integrator_min_weight: 0.1

mesh_integrator_weld_vertices: true

# tsdf decay integrator

tsdf_decay_factor: 0.95

tsdf_decayed_weight_threshold: 0.001

exclude_last_view_from_decay: true

tsdf_set_free_distance_on_decayed: false

tsdf_decayed_free_distance_vox: 4.0

decay_integrator_deallocate_decayed_blocks: true

# mesh streamer

layer_streamer_exclusion_height_m: 2.0

layer_streamer_exclusion_radius_m: 7.0