Leveraging NVIDIA Isaac Sim with YOLOv8: Advanced Object Detection and Segmentation in Warehouse Robotics

Introduction to NVIDIA Isaac Sim

In the rapidly evolving world of robotics, simulation plays a pivotal role in accelerating development and testing. NVIDIA Isaac Sim is at the forefront of this revolution, offering a robust platform for simulating AI-powered robots in high-fidelity environments. Whether you’re working on autonomous navigation, manipulation, or perception, Isaac Sim provides a rich set of tools to create, test, and refine your robotic applications.

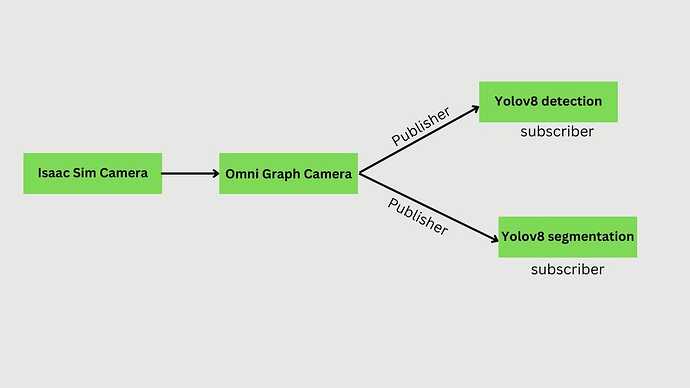

In this blog, we delve into integrating NVIDIA Isaac Sim with YOLOv8 for object detection and segmentation, focusing on a warehouse environment use case. We’ll explore how to set up Isaac Sim’s camera using Action Graph, process the camera feed with YOLOv8, and visualize the results using ROS2 tools like RViz and RQT.

Setting Up the Isaac Sim Camera with Action Graph

Isaac Sim’s Action Graph is a versatile, node-based system that allows users to create complex behaviors and data pipelines without the need for extensive coding. The Action Graph can be used to control robot behavior, simulate sensor outputs, and in our case, publish camera images.

Steps to Configure the Camera Publisher:

- Creating the Camera Node:

- Start by launching Isaac Sim and setting up your simulation environment.

- Add a camera to your robot or a fixed position in the warehouse environment.

- Use the Action Graph to create a new node that captures the camera feed and publishes it as a ROS2 topic.

2. Setting Up the Action Graph:

- Within the Action Graph, link the camera node to a ROS2 Publisher node.

- Configure the publisher to stream images on a specific topic, such as

/warehouse_camera/image_raw.

3. Testing the Camera Feed:

- Before proceeding, ensure that the camera is correctly publishing images by subscribing to the topic using a ROS2 tool like

rqt_image_viewor by echoing the topic to verify the data stream.

Introduction to YOLOv8

YOLO (You Only Look Once) is a series of object detection models that have set benchmarks for real-time object detection. The latest iteration, YOLOv8, brings several enhancements that make it even more powerful for real-time applications, particularly in robotics.

Key Differences from Previous YOLO Models:

- Improved Backbone: YOLOv8 uses a more efficient backbone network that enhances feature extraction.

- Advanced Post-Processing: Enhanced non-max suppression (NMS) and other techniques improve detection accuracy.

- Modular Design: YOLOv8’s design allows for easy customization and adaptation to specific tasks.

YOLOv8 Node Code Explanation

The provided Python code integrates the YOLOv8 model into a ROS2 node, allowing the model to process images from a camera feed and publish the results, including object detection and segmentation, on ROS topics for further use in the robotics ecosystem.

Key Components of the Code

- ROS2 Node Initialization:

- The

Yolov8Nodeclass inherits fromNode, which is a base class for all ROS2 nodes. - The node is initialized with the name

'yolov8_node'.

- Subscription to Camera Topic:

- The node subscribes to the camera feed topic (e.g.,

/camera/image_raw) usingself.subscription. This is the source of input images that the YOLOv8 model will process. - The

camera_callbackfunction is invoked whenever a new image is received on this topic.

- Publishers for Segmentation and Detection:

- The YOLOv8 model is loaded using the

YOLOclass from the Ultralytics package. The model file is specified (e.g.,yolov8n-seg.ptfor a segmentation-enabled model). - The model is moved to the appropriate device (

cudafor GPU orcpufor CPU) based on availability.

- Model Loading:

- The YOLOv8 model is loaded using the

YOLOclass from the Ultralytics package. The model file is specified (e.g.,yolov8n-seg.ptfor a segmentation-enabled model). - The model is moved to the appropriate device (

cudafor GPU orcpufor CPU) based on availability.

- Image Processing in

camera_callback:

- The input image from the camera is converted from ROS Image format to an OpenCV image using

CvBridge. - The image is passed through the YOLOv8 model to obtain detection and segmentation results.

- Segmentation Handling:

- If segmentation masks are available (

results[0].masks), they are processed and published as a separate ROS topic. - Each mask is moved from GPU to CPU using

.cpu(), converted to a NumPy array, and then transformed back into a ROS Image message usingCvBridge

- Detection Handling:

- The bounding boxes, class labels, and other detection information are drawn onto the original image using

results[0].plot(). - The annotated image is then published on a separate topic.

- Main Function:

- The

mainfunction initializes the ROS2 node and keeps it running usingrclpy.spin()until the node is shut down.

YOLOV8 DETECTION

The image above showcases the results of deploying a YOLOv8 object detection model within a simulated warehouse environment in NVIDIA Isaac Sim. This simulation demonstrates how the YOLOv8 model can effectively detect and classify objects — in this case, multiple people — within a complex environment.

Each bounding box is labeled as “person” along with a confidence score, indicating the certainty of the detection. The detections are likely the result of a YOLOv8 model running in a ROS2 environment,

YOLOV8 SEGMENTATION

Future Work: NVIDIA DeepStream with YOLOv8 for Video Analytics

In an upcoming blog, we’ll explore how to integrate YOLOv8 with NVIDIA DeepStream for video analytics. This will allow us to process video feeds in real time, leveraging the powerful hardware acceleration provided by NVIDIA GPUs, making it ideal for applications in surveillance, retail, and smart cities.

Conclusion

The integration of YOLOv8 with NVIDIA Isaac Sim offers a powerful combination for developing, testing, and deploying AI-powered robotics solutions. By leveraging Isaac Sim’s high-fidelity simulation capabilities and YOLOv8’s advanced object detection and segmentation, developers can create robust, efficient, and scalable robotics systems tailored to complex real-world environments like warehouses. As we continue to explore the potential of these tools, the possibilities for innovation in robotics and AI are boundless. Stay tuned for our next exploration into video analytics with NVIDIA DeepStream!