When I generate point clouds using RGB and depth data, I observe distortions in the resulting point cloud. I suspect that the depth data, which represents the distance to the camera, might be inaccurate or misinterpreted during the conversion process.

There is distance_to_camera and distance_to_image_plane. Could it be that the wrong data format was used?

Hi @Weylon2023, have you resolved the problem? I have the similar one.

I’m trying to simulate Intel RealSense D455 camera (I use standard D455 camera rig from Isaac). I want to publish point clouds from camera to ROS2 utilising the OmniGraph, see the screenshot below:

When I visualising point cloud in RViz, it seems distorted:

It seems like I should add a rectification node before ROS2 camera helper, but I have no ideas how to do it.

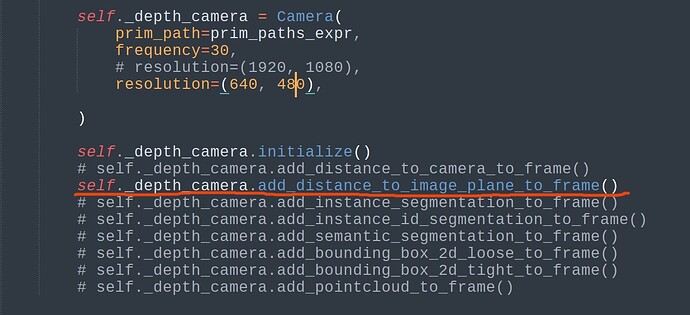

Hi! @pavel.butov , I resolved the problem by replacing distance_to_camera with distance_to_image_plane. It can get the correct rgb-d heightmaps and the correct point clouds.

@Weylon2023 thank you for your feedback! Could you please give me some more details? Where should I replace it (would you be so kind as to attach a screenshot or something)?

Hi, @pavel.butov , I made modifications in my settings for the camera, after initialization, you could define some APIs to get information via the camera.

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.