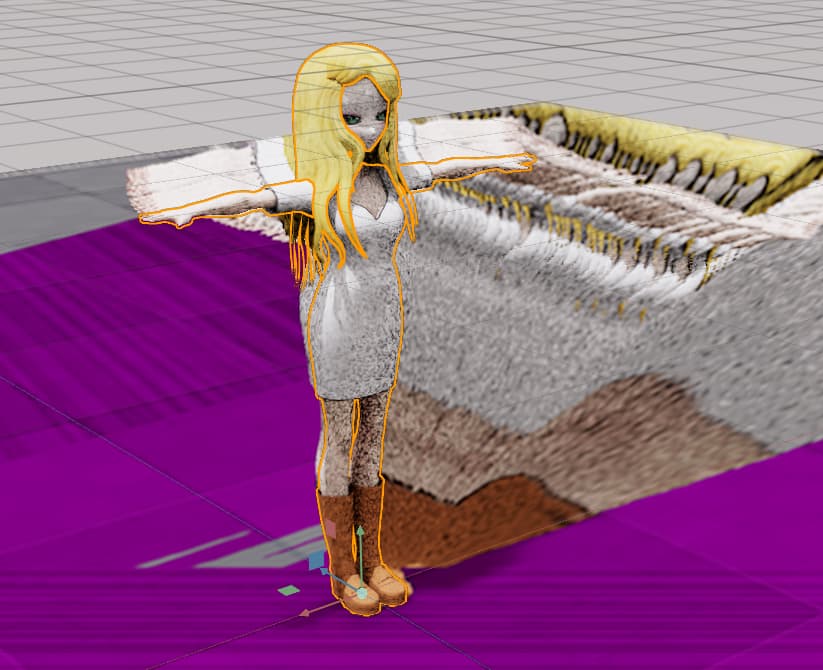

I was trying the retargeting approach. It was just looking strange, but you made the comment the skeleton was in the wrong place, which makes sense. It looked like during retargeting it was pulling the hips down to the ground.

Note: GLTF->Blender->OBJ->USD seems to lose the bones. With FBX I have tried different settings but the result does not load with textures in Omniverse. But skeleton was at right layer.

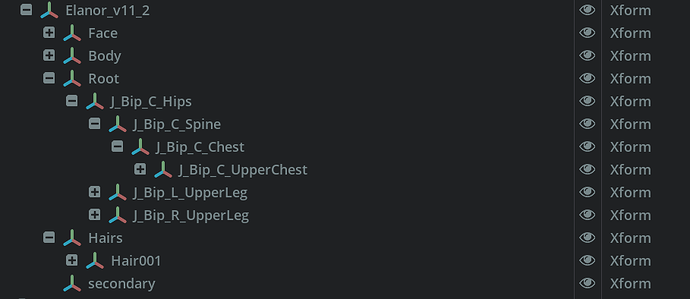

So next idea is a “fix it up” python script that restructures the USD file. Load USD, reorganize layers, write it back out. I can compare the FBX and GLTF versions to work out the correct structure I hope. The skeleton was a bit interesting - its a flat list of tags and layer names (I think). I will probably have a go in USDA by hand first with a text editor, and if I get it right then try to automate it with a Python script. Good learning exercise. (I keep telling myself that.)

I need to fix other things anyway, so probably want such a script regardless. E.g. restructure the mesh layers to what audio2Face wants. I have a long list of topics to still work out in Omniverse. How to blend animation clips? (I don’t think the Sequencer supports this at present - you can only put one after the other.) How to combine procedural animation (e.g. “look at target”) with animation clips (including blending between them). I think everything is technically feasible - but some of it looks like a lot of work (probably too much for a hobby project).

The other “fun” I will have is Unity (where all my existing animation clips are) convert animation clips to “humanoid” animations. Rather than retargeting from one character to another, they convert animation clips to “humanoid” (generic), then retarget that to bone names. Each character has an “avatar description” which maps the bone names to the bones for that character. So its kinda the same, but different enough to make bringing them across… interesting. (It looks technically possible, just more work.) Fun times!

I really should get a scene working with the retargeting approach. I have a library of animation clips from many different original characters collected over time. So I have to wrap my head around what to do in Omniverse. Maybe convert all animation clips to a standard character, then retarget that one character to everyone else. Retargeting many source character animations to many target characters feels inefficient.

But I also have to work out hair bounces, hair in wind, cloth simulation for clothes, texture tiling for different skins, facial expressions, … it’s big enough a job that the logical part of my brain tells me “give up now, you will never finish it”. So this is currently more a learning exercise.